Apple exploring motion-based 3D user interface for iPhone

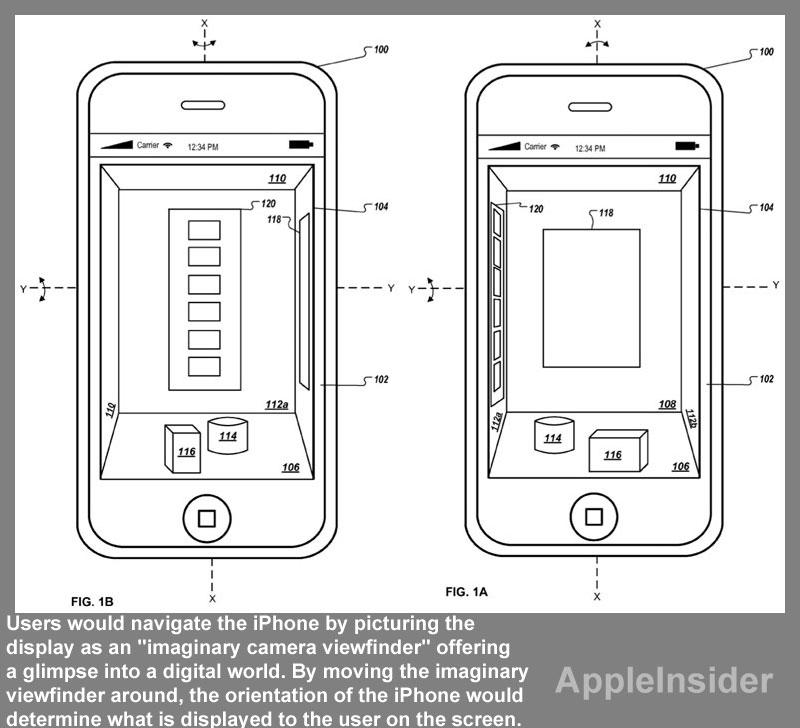

The concept was revealed this week in a new patent application discovered by AppleInsider and entitled "Sensor Based Display Environment." It describes a three-dimensional display environment that uses orientation data from onboard sensors, like a gyroscope and compass, to navigate the system.

In the application, Apple notes that problems can occur when using a 3D user interface with a touchscreen. The application refers to 3D as polygons on the screen of a traditional iPhone, rather than the "illusion" of 3D by sending two different images to each eye with a new, special display.

"Due to the limited size of the typical display on a mobile device, a 3D GUI can be difficult to navigate using conventional means, such as a finger or stylus," the filing reads. "For example, to view different perspectives of the 3D GUI, two hands are often needed: one hand to hold the mobile device and the other hand to manipulate the GUI into a new 3D perspective."

Apple could remove the need to touch the screen entirely by using orientation data from onboard sensors to determine a "perspective projection of the 3D display environment." Examples of this kind of interaction can be seen with augmented reality applications, or using the gyroscope to view a location with Google Street View in the iOS Maps application.

But Apple's concept would take the idea much further, potentially offering users the ability to navigate the device using motion. In one illustration, the iPhone is shown with a home screen featuring a floor, back wall, ceiling and side walls.

Users would navigate the iPhone by picturing the display as an "imaginary camera viewfinder" offering a glimpse into a digital world. By moving the imaginary viewfinder around, the orientation of the iPhone would determine what is displayed to the user on the screen.

As users look about their virtual room, a number of objects can be placed on the floor, walls, ceiling and even behind them. These objects could be selected and would allow users to navigate the device.

The filing also makes note of a "snap to" feature that could make navigation a quicker process. By doing a preset action, such as shaking the iPhone, the view could automatically "snap to" a predetermined camera view of the 3D user interface.

The proposed invention, made public this week by the U.S. Patent and Trademark Office, was first filed in July of 2010. It is credited to one inventor: Patrick Piedmonte.

Neil Hughes

Neil Hughes

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Marko Zivkovic

Marko Zivkovic