Why did Apple spend $400M to acquire Shazam?

Rumors that Apple was seeking to acquire UK firm Shazam Entertainment Ltd. first floated back in December. Following an EU approval process, Apple officially announced it had finalized the purchase on Monday, ostensibly to "provide users even more great ways to discover, experience and enjoy music." That appears to be a major understatement.

Shazam is one of the oldest apps in the store

It might be hard to get excited about Apple acquiring one of the first apps to ever appear in the iPhone App Store way back in 2008. But Shazam isn't just as old as the iOS app store; it's actually about as old as macOS X itself, having been founded in 1999 and first released in 2002 as a dialup service for identifying songs.

Sixteen years ago, UK users could mash vertical digits 2580 on their Nokia to dial up the service, which would listen for 30 seconds, then disconnect, process the results, and text the user back with the song title and artist. The service later launched in the U.S. in 2004, costing about $1 per identification. When it launched as a free iPhone app in 2008, it presented identified songs in iTunes, harvesting an affiliate cut of suggested download purchases.

Across the last decade, Apple has regularly promoted and partnered with Shazam, noting it was one of the top ten apps for iOS back in 2013 and integrating it with Siri in 2014. The next year it showed off Shazam working on the Apple Watch, presenting lyrics of the song in real time.

Shazam remains wildly popular today across a variety of platforms including the Mac. However, music identification is not an exclusive feature Apple can buy given competition in the space, nor something that has a clear potential to excite users to the point of keeping them from defecting or causing them to switch.

So while Shazam integrated itself with Snapchat a couple years ago, that hasn't stopped Facebook's Instagram Stories from ransacking Snapchat's users and popularity. Similarly, Google developed its own Shazam-like service for identifying ambient songs automatically on Pixel 2 phones, but few in Android-land cared enough about that — or any of Pixel's other features — to pay a premium to buy the device.

Why buy Shazam?

On top of the dubious value of basic music identification, Apple also isn't known for liberally buying up scores of acquisitions willy-nilly just to blow money. Note that's in contrast to Google and Microsoft, each of which incinerated $15 billion on a pair of outlays that were later written off in the manner of a millionaire playboy totaling his new Lamborghini before walking away with just a casual smirk.

Virtually every one of Apple's recent acquisitions can be directly linked to the launch of serious, significant new features or to embellishing core initiatives designed to help sell its hardware, including Face ID (Faceshift, Emotient, and Perceptio); Siri (VocalIQ); Photos and CoreML (Turi, Tuplejump, Lattice Data, Regaind); Maps (Coherent Navigation, Mapsense, and Indoor.io); wireless charging (PowerbyProxi) and so on.

Further, the reported $400 million price tag on the Shazam acquisition puts it in a rare category of large purchases that Apple has made which involved revolutionary changes to its platforms. Only Anobit (affordable flash storage), AuthenTec (Touch ID), PrimeSense (TrueDepth imaging) and NeXT itself are in the same ballpark apart from Beats— Apple's solitary, incomparably larger $3 billion purchase that delivered both the core of Apple Music and an already profitable audio products subsidiary paired with a popular, global brand.

What about Shazam's "ways to discover, experience and enjoy music" could possibly be worth the amount of money Apple spent to acquire it?

Shazam's Flow-FireFly: a visual onramp to Augmented Reality

Rather than just music discovery, it's much more likely related to a key initiative at Shazam that's distinct from the song identification feature it's most famous for, as Mike Wuerthele first noted for AppleInsider in December.

Back in 2015, Shazam announced an effort to move beyond audio identification using a microphone— largely provided as a music identifying service to users— to using smartphone cameras to visually identify items. However, rather than simply identifying objects, Shazam's visual recognition platform was developed as a platform for marketers seeking to engage with audiences.

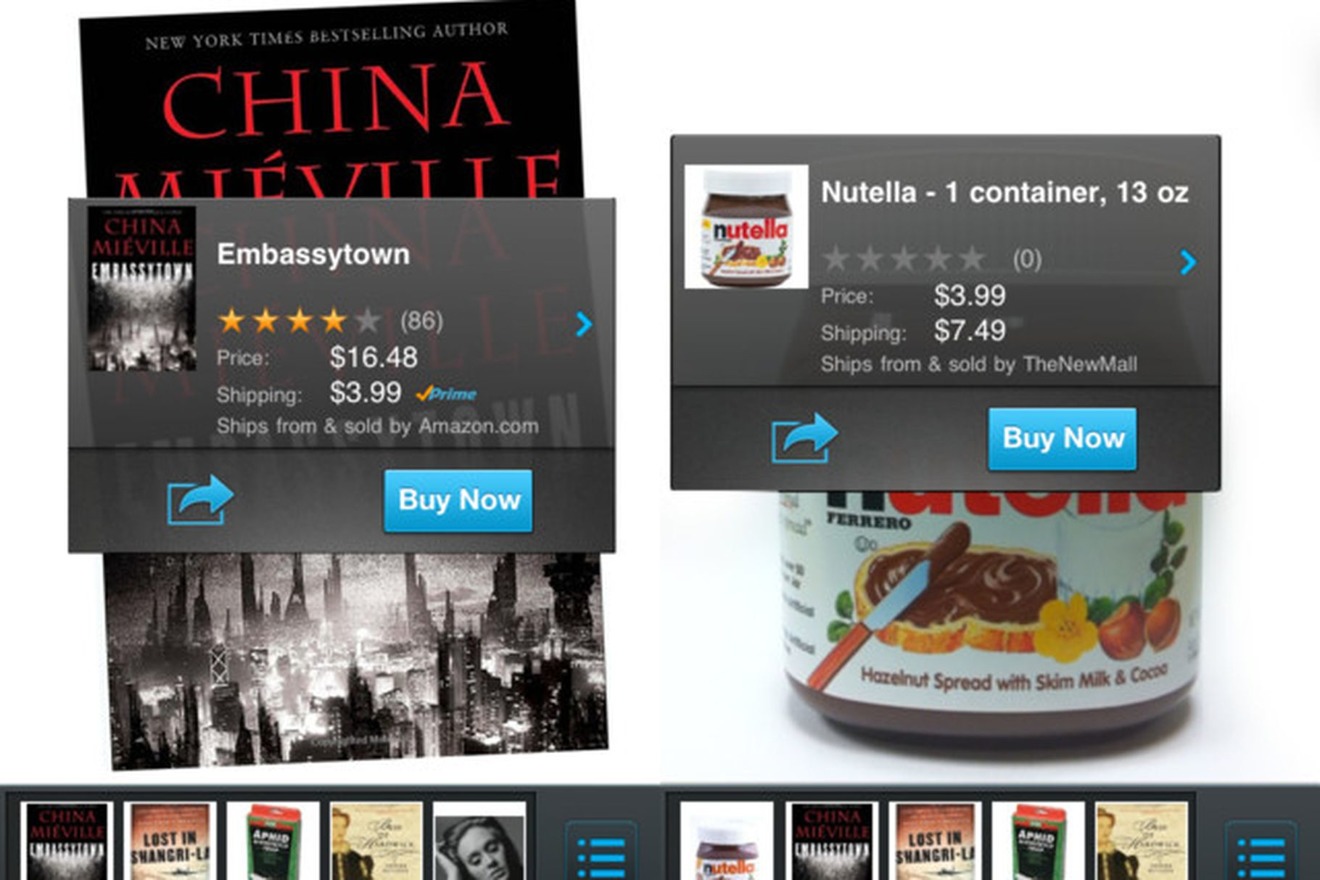

A year earlier, Amazon debuted its FireFly service to perform a similar sort of "visual Shazam," except that Amazon's aim was to use FireFly as a way to sell its then-new Fire Phone.

FireFly was based on Flow, an iPhone app originally launched by Amazon's A9 search subsidiary back in 2011. Flow was designed to recognize millions of products by scanning their barcodes.

Years later, Amazon presented "FireFly" as a way for Fire Phone users to similarly point their camera at a product (in the demo, the same jar of Nutella that Flow was promoted as recognizing) to identify it and subsequently order it from Amazon. FireFly was also designed to recognize other data such as phone numbers on a business card.

Amazon's camera-based FireFly was nearly identical in concept to Shazam's use of a microphone to identify a song and then taking the user to iTunes to purchase it. But while Shazam had been wildly popular as a free app to identify songs, the Fire Phone ended up (for several reasons) a huge flop as a somewhat-expensive new phone aimed primarily at just making it easier to shop on Amazon.

When Shazam introduced its own visual recognition service the following year, rather than tying it into a store to suggest referral purchases it launched the service with a series of marketing partners looking for new ways to get audiences to interact with their brands. From Disney and Warner Bros. to Target to a variety of book and periodical publishers to a series of other product brands, Shazam enabled interactive campaigns that involved a "Shazam tag."

Once a user identified a code with the Shazam app (or recognized an image such as a book or album cover), it worked like a simple URL to open up a marketing website, or take them to a movie preview or even get them shopping in Target's online store. So far, Shazam had little more than a proprietary QR Code embellished with some visual recognition features. In 2016 Shazam's new foray into marketing was compelling enough to raise $30 million in new funding from investors, giving the company a unicorn valuation of $1 billion

Even so, in 2016, Shazam's new foray into marketing was compelling enough to raise $30 million in new funding from investors, giving the company a unicorn valuation of $1 billion. The fact that Apple subsequently paid "only" $400 million for it makes the deal sound like a bargain.

Last year, Shazam made an additional step, embracing Augmented Reality. Now, rather that just taking users to a standard website, it could use its Shazam Codes (or visual recognition of products or posters) to launch an engaging experience right in the camera, layering what the camera sees with "augmented" graphics synced to the movement of the user's device.

Now users could identify a bottle of Bombay Sapphire gin (below) and see it animate botanicals in front of them, while also suggesting cocktail recipes.

Another Shazam campaign in Australia, for Disney's "Guardians of the Galaxy 2," delivered a Spotify playlist "mixtape" along with presenting the movie trailer and an opportunity to buy tickets. A campaign in Spain let users animate Fanta billboards in AR using their phones. And a Hornitos tequila app used a mini-game, rendered in ARKit, to award discounts on purchases.

Given Apple's interest in building traction for ARKit, which launched last fall as the world's largest AR platform, it seems pretty clear that Apple bought Shazam, not really for any particular technology as Apple has already developed its own core visual recognition engine for iOS, but because Shazam has developed significant relationships with global brands to make use of AR as a way to engage with audiences.

Apple's iAd, part two

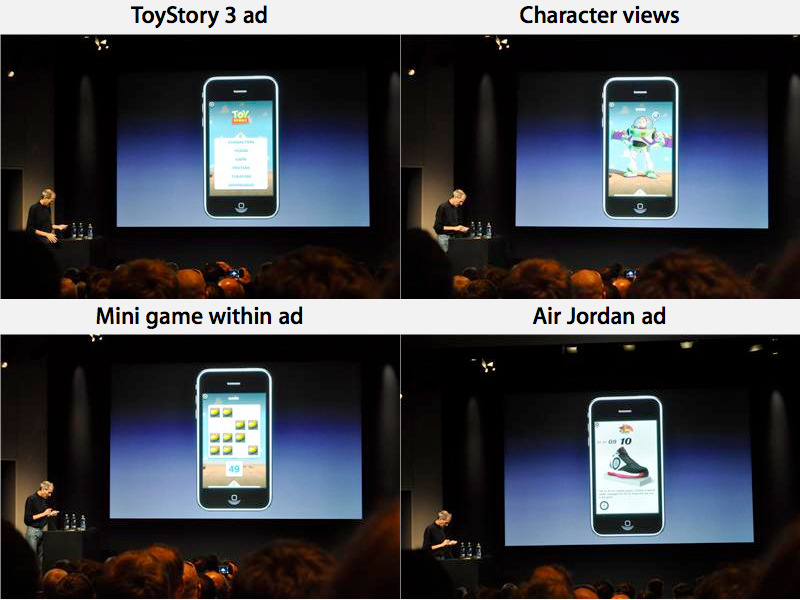

Back in 2010, Steve Jobs introduced iAd as an initiative to help iOS developers to monetize free apps. Rather than just putting up conventional banner ads— which could only pull users out of their apps and throw them into the browser to display a marketing website— iAd was intended to launch a mini-world of marketing content users could explore, then leave to return to the app they were using.

Apple hoped to build a legitimate ad business that didn't rely on tracking users, while giving developers a better way to monetize their apps and end users a better experience with advertising that respected their privacy while keeping navigation straightforward.

Within an iAd experience hosted as a secured HTML5 container running in parallel to the app, users could explore 3D graphical images, customize a product, and even engage in mini-games.

Apple's iAd was a novel idea, but advertisers hated the fact that Apple erected barriers to stop marketers from tracking users and collecting data on them the way Google and other ad networks allowed. This eventually killed iAd, after a brutal series of bashing articles were presented by ad world figures disparaging iAd as being too expensive, then too inexpensive, all as a distraction from the real reason that ad agencies hated iAd so much: it reined in their ability to spy on users, which had already become the standard for setting ad rates and charging advertisers.

Apple didn't leave the advertising business entirely — it still was in the business of merchandizing content in its own iTunes and the App Store, and it later added search advertising to its storefronts. However, it no longer had a way to closely interact with brands outside of giving them a development platform to build their own marketing apps.

With the development of ARKit, Apple has now created a new "mixed reality" world of app experiences that mesh right into the real world. At its last two WWDC events, Apple has introduced various games as primary examples of using ARKit. However, Shazam has already developed marketing campaigns that take advantage of ARKit to build engaging experiences— very similar to the core concept of iAd many years ago.

Using Shazam's AR as a iAD-like brand engagement tool

This starts to explain why Apple paid out a significant sum to own Shazam: it's perhaps one of the most valuable applications of Apple's ARKit technology outside of games. The company's business is already established across a series of major brands, ranging from Coca-Cola and Pepsi and studios from Disney to Fox.

With Shazam inside Apple, iOS can further expand upon integrating visual recognition features into the camera, something that it has already started doing.

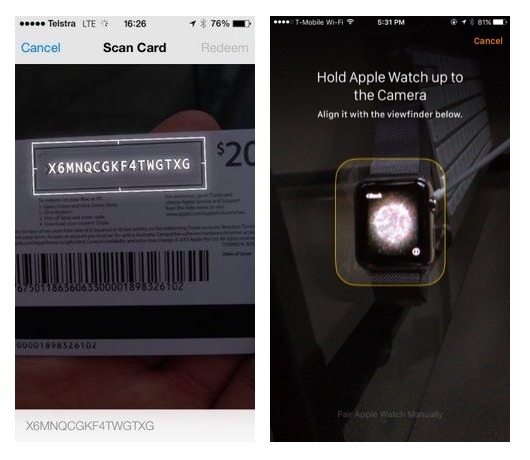

In 2017, Apple introduced intelligent support for QR Codes — simply point open and point the camera at one, and iOS will interpret the link to provide a button to open a website, or even to configure something such as the password for local WiFi network.

Apple also already makes internal use of Machine Learning-based visual recognition techniques to stay focused on a moving subject when recording video, to read the characters on a redeemed iTunes gift card, to pair an Apple Watch, or migrate to a new iPhone. It has also opened up core ML capabilities to third party developers to make use of in their own apps.

With or without Shazam, Apple could already build more sophisticated applications of visual recognition right into its iOS camera — potentially in the future, even in the background — allowing iOS devices to "see" not just QR Codes but recognized posters, billboards, products and other objects, and suggest interactions with them, without manually launching the camera. But Shazam gives Apple a way to demonstrate the value of its AR and visual ML technologies, and to apply these in partnerships with global brands.

Amazon has received incredible attention for taking the concept of Siri's voice assistant and enhancing it into a smarter, always-listening background service with Alexa, something that Apple is now addressing with Hey Siri and HomePod.

But the 50 million simple Alexa and Google Assistant devices out there lack something that most iOS devices already have: a camera capable of providing visual, not just audio, based assistance.

For Amazon and Google, that will require up-selling its Wi-Fi microphone buyers to more expensive devices outfitted with a camera. Apple already has over a billion users of devices that can already do this on their iPhone or iPad, and most of these can launch ARKit experiences based on what they see.

Good luck trying to build a minimally functional AR experience on the global installed base of Android phones, most of which can barely browse the web and run a few apps, let alone aspire to run Google's ARCore platform, which only works on a very limited number of recent, high-end Android models.

Additionally, as the developer of iOS, Apple can also integrate location-based geofencing and use "Hey Siri" or Siri Shortcuts to invoke not just opportunities to begin an ARKit session for Shazam-style marketing purposes, or to launch a chapter of a location based game, but even to launch work applications in enterprise settings— pointing a camera at a broken device to begin evaluating what's required to fix it, for example, or visually registering a person's invitation to check them into an event.

From that perspective, it's pretty obvious why Apple bought Shazam. It wants to own a key component supporting AR experiences, as well as flesh out applications of visual recognition. This also explains why Apple is investing so much into the core A12 Bionic silicon used to interpret what camera sensors see, as well as the technologies supporting AR— combining motion sensing and visual recognition to create a model for graphics augmented into the raw camera view.

Today, this is building captivating games, educational tools, marketing experiences and even business tools that use mobile devices to integrate reality with AR content. In the future this will likely move from a hand held display to a vehicle windshield or even wearables that augment what we see with digital information layered on top.

This all started with one of the first interesting iPhone apps.

Daniel Eran Dilger

Daniel Eran Dilger

Andrew Orr

Andrew Orr

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee