Siri whistleblower says Apple should face investigations over grading controversy

Nearly a year after he revealed that Apple contractors were listening in on Siri recordings, the whistleblower has gone public and feels that Apple wasn't sufficiently punished for its actions.

In a letter to European data protection regulation agencies, Thomas le Bonniec has revealed himself as the whistleblower who declared in July 2019 that Apple was mishandling consumer Siri queries.

"It is worrying that Apple (and undoubtedly not just Apple) keeps ignoring and violating fundamental rights and continues their massive collection of data," Le Bonniec wrote, according to The Guardian on Wednesday morning. "I am extremely concerned that big tech companies are basically wiretapping entire populations despite European citizens being told the EU has one of the strongest data protection laws in the world. Passing a law is not good enough: it needs to be enforced upon privacy offenders."

Le Bonniec repeated many of his claims that he made in 2019, and says that Apple should be "urgently investigated" by data protection authorities and privacy watchdogs. He adds that "Apple has not been subject to any kind of investigation to the best of my knowledge."

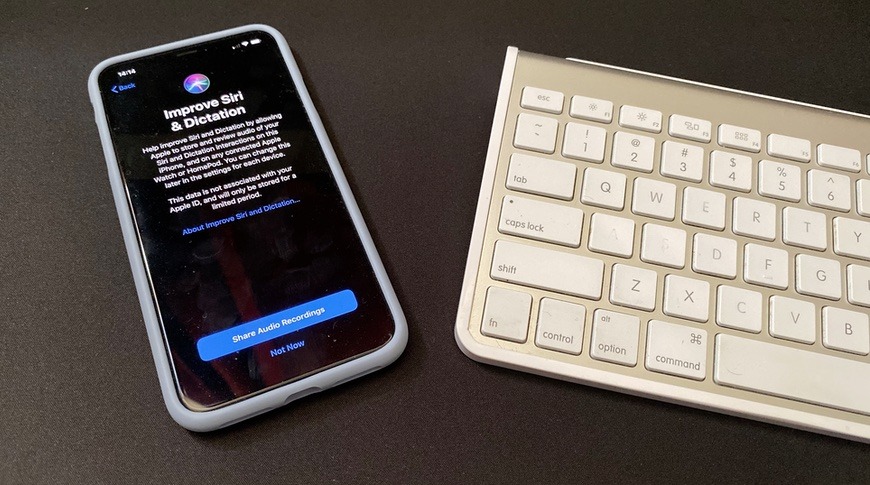

Shortly after the allegations surfaced, Apple suspended the grading program while it performed a program review. It also added an opt-in or opt-out element for the program in iOS 13.2.

The Siri grading controversy

At the time, Apple did not explicitly disclose to consumers that recordings were passed along to contractors — but Apple has told users in terms of service documents that some queries are manually reviewed, and has since the release of the service. Despite the information having been public-facing for at least six iterations of iOS, le Bonniec was concerned over the lack of disclosure, especially considering the contents of some recordings containing "extremely sensitive personal information."

The nature of the information, sometimes unintentional and not part of the query, is wide-ranging, the whistleblower said in July 2019.

"You can definitely hear a doctor and patient, talking about the medical history of the patient," said le Bonniec at the time. "Or you'd hear someone, maybe with car engine background noise - you can't say definitely, but it's a drug deal. You can definitely hearing it happening," he said.

Le Bonniec went on to state there were many recordings "featuring private discussions between doctors and patients, business deals, seemingly criminal dealings, sexual encounters and so on. These recordings are accompanied by user data showing location, contact details, and app data."

Allegedly, there wasn't a procedure in place to deal with sensitive recordings, with the whistleblower stepping forward over the suggestion the data could be easily misused. Citing a lack of vetting for employees and the broad amount of data provided, Le Bonniec suggested that "it wouldn't be difficult to identify the person you're listening to, especially with accidental triggers" like names and addresses, especially for "someone with nefarious intentions."

Apple initially confirmed "A small portion of Siri requests are analyzed to improve Siri and dictation," but added it was kept as secure as possible.

"User requests are not associated with the user's Apple ID," the company continued, "Siri responses are analyzed in secure facilities and all reviewers are under the obligation to adhere to Apple's strict confidentiality requirements."

Apple added that a random subset of less than 1% of daily Siri activations are used for grading, recordings typically only a few seconds in length.

At the end of August, Apple completed its review and confirmed changes would be made, including the option to opt in for audio sample analysis, and that only Apple employees — and not contractors — would be allowed to listen to the samples. Apple would also no longer retain audio recordings of Siri interactions in favor of using computer-generated transcripts to improve Siri, and would also "work to delete" any recording which is determined to be an inadvertent trigger of Siri.

The report followed a year of similar privacy-related stories about Google Assistant and Amazon's Alexa, where teams had access to some customer data logs with recordings, for similar review purposes.

In the Amazon case, the captured voice data was associated with user accounts. In regards to Google, a researcher provided a voice snippet that Google retained to analysis to the reporter that made the request — but Google says that the samples aren't identifiable by user information. How the user was identified by the researcher isn't clear.

Keep up with all the Apple news with your iPhone, iPad, or Mac. Say, "Hey, Siri, play AppleInsider Daily," — or bookmark this link — and you'll get a fast update direct from the AppleInsider team.

Mike Wuerthele

Mike Wuerthele

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard