Future iPhone may pre-process video on the image sensor to cut size & reduce power demand

Apple is looking at ways of reducing how much processing an iPhone or other device has to do on the image sensor itself in order to produce high quality video, with the aim of saving both file size and battery power.

Currently cameras in iPhones, iPads, or any other device, have an image sensor which registers all light it receives. That video data is then passed to a processor which creates the image. But that processing on the main device CPU can be intensive and make the device's battery run down quickly.

"Generating static images with an event camera," a newly revealed ">US patent application

"Traditional cameras use clocked image sensors to acquire visual information from a scene," says the application. "Each frame of data that is recorded is typically post-processed in some manner [and in] many traditional cameras, each frame of data carries information from all pixels."

"Carrying information from all pixels often leads to redundancy, and the redundancy typically increases as the amount of dynamic content in a scene increases," it continues. "As image sensors utilize higher spatial and/or temporal resolution, the amount of data included in a frame will most likely increase."

In other words, as the iPhone cameras get higher resolution, and as people use them the record longer videos, the sheer volume of data being processed rises.

"Frames with more data typically require additional hardware with increased capacitance, which tends to increase complexity, cost, and/or power consumption," says the application. "When the post-processing is performed at a battery-powered mobile device, post-processing frames with significantly more data usually drains the battery of the mobile device much faster."

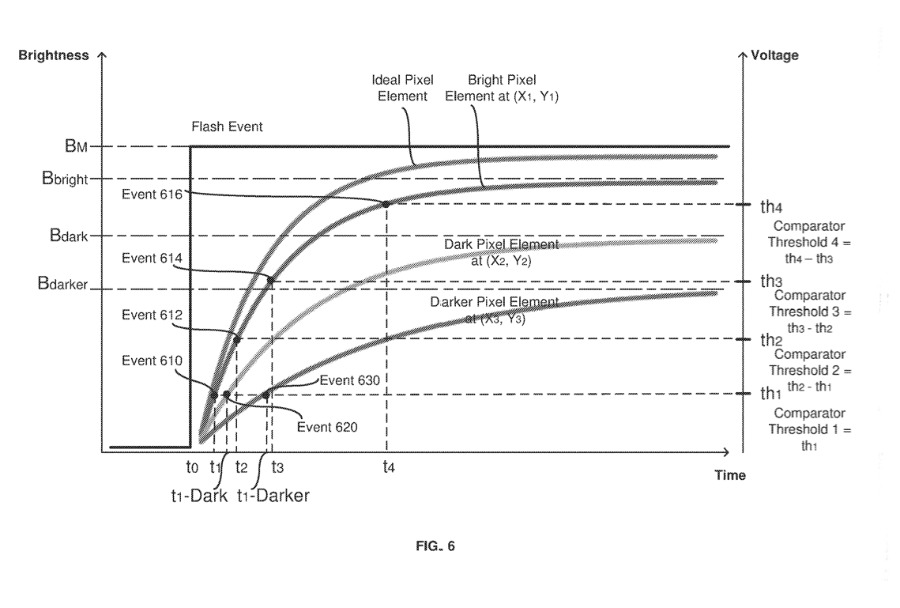

The application then describes methods where "an event camera indicates changes in individual pixels." Instead of capturing everything, the "camera outputs data in response to detecting a change in its field of view."

Typically when an image is processed and made into a JPEG or similar format, pixels that have not changed are discarded so that the file size can be made smaller. In Apple's proposal, those pixels would not even reach the processor.

"[Pixel]event sensors that detect changes in individual pixels [can thereby allow] individual pixels to operate autonomously," it continues. "Pixels that do not register changes in their intensity produce no data. As a result, pixels that correspond to static objects (e.g., objects that are not moving) do not trigger an output by the pixel event sensor, whereas pixels that correspond to variable objects (e.g., objects that are moving) trigger an output by the pixel event sensor."

The patent application is credited to Aleksandr M. Movshovich and Arthur Yasheng Zhang. The former has multiple prior patents in television imaging.

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Andrew Orr

Andrew Orr

Marko Zivkovic

Marko Zivkovic