Apple working on software for dynamic 'Apple Glass' audio and AR image blending

Apple AR glasses or VR headsets may include a switchable audio system that can provide a range of different listening modes, while also incorporating a system that could smoothly blend a real-world view with virtual imagery at speed.

Items like the often-rumored Apple Glass smartglasses or an augmented reality or virtual reality headset have to rely on providing users with convincing visuals and sufficient audio for full immersion. Both elements, however, offer their own unique issue that companies producing head-mounted displays have to conquer.

Headset audio

For audio, there's a world of difference between the audio needs for AR and smart glasses, and that of VR. While the former may want limited audio and to still be able to hear the rest of the environment, VR use typically relies on blocking out external sounds, leaving just the audio of the virtual world.

Current headsets can accomplish this if they require users to supply their own headphones, but those with built-in audio systems typically don't offer as much flexibility as a user may want.

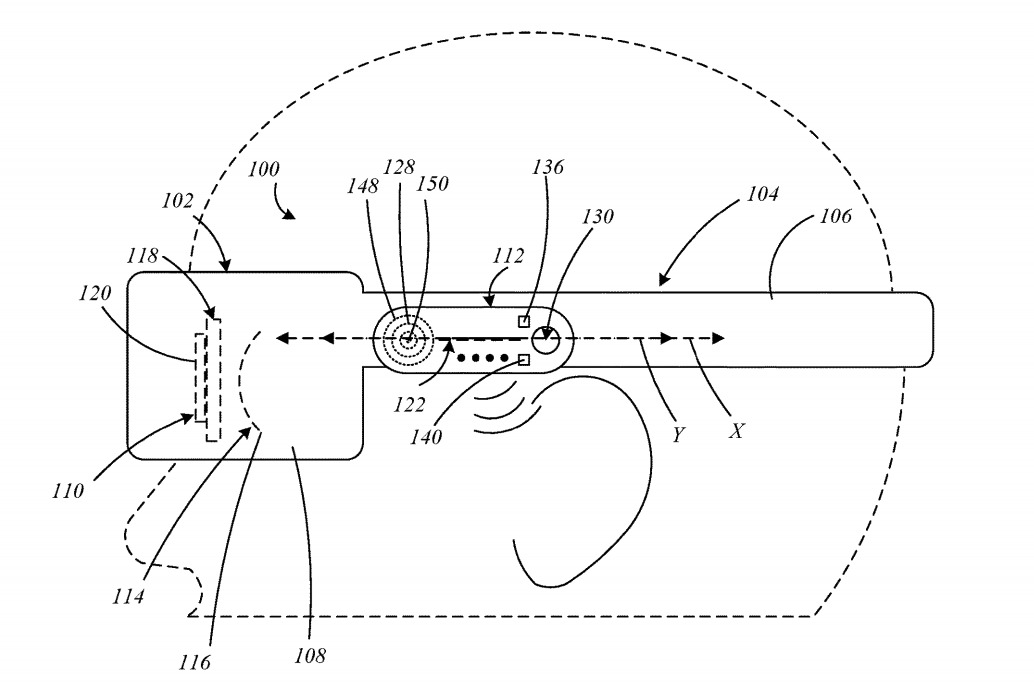

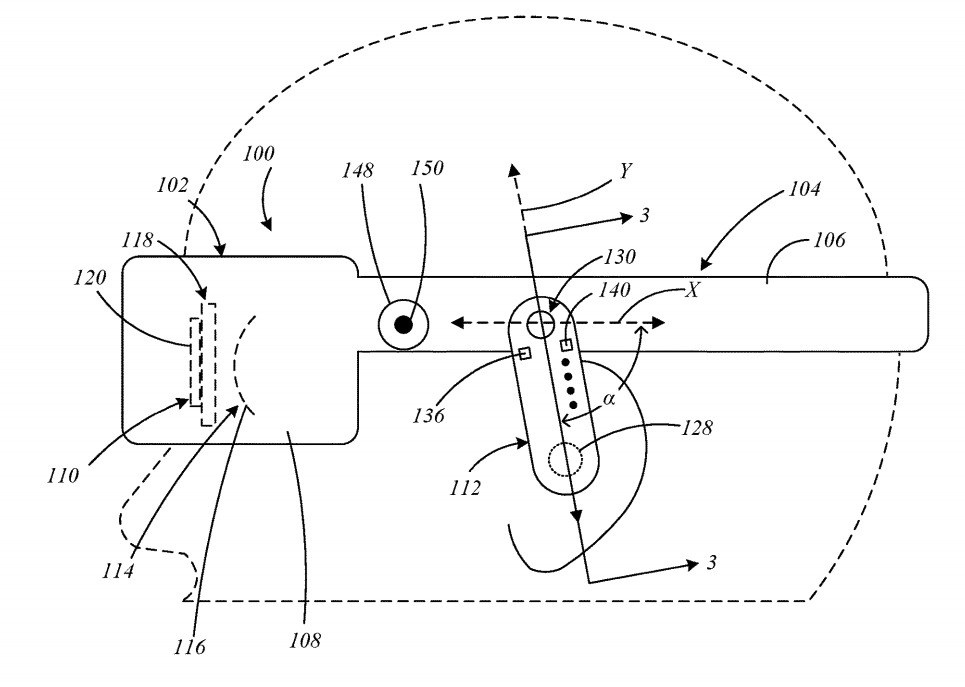

In a patent granted by the US Patent and Trademark Office on Tuesday titled "Display devices with multimodal audio," Apple describes head-mounted vision systems with an audio component that can shift between positions on the headset's support structure.

Apple proposes the audio system could switch between an extra-aural mode, where the speakers of a movable earphone system are held and used a small distance away from the ears, and an intra-aural mode that places the speakers in the ear canal. Under extra-aural, the system would enable external sounds to be more clearly heard, while the second version would do more to impede environmental sounds from being heard by the user.

Switching between the modes, Apple suggests the use of one driver in each earphone, that can change how it projects sound through different ports to match the two different positions. This includes adjusting the power level for the woofer depending on whether it is close to the ear or not, and even a switchable physical element that could alter the movements of sound.

The sound-generating module would use a short arm on a pivot, with the point furthest from the pivot containing elements for placement in the ear. Depending on how it is pivoted, the unit either feeds audio into the ear through one method, or uses the other for extra-aural sound, with the change made automatically.

As well as pivoting, Apple suggests the audio element may also be able to tilt towards and away from the ear for easier placement, and may include a telescoping section.

Originally filed on April 11, 2019, it lists its inventors as Robert D. Silfvast, James W. VanDyle, Jeremy C. Franklin, Neal D. Evans, and Christopher T. Eubank.

Blended vision

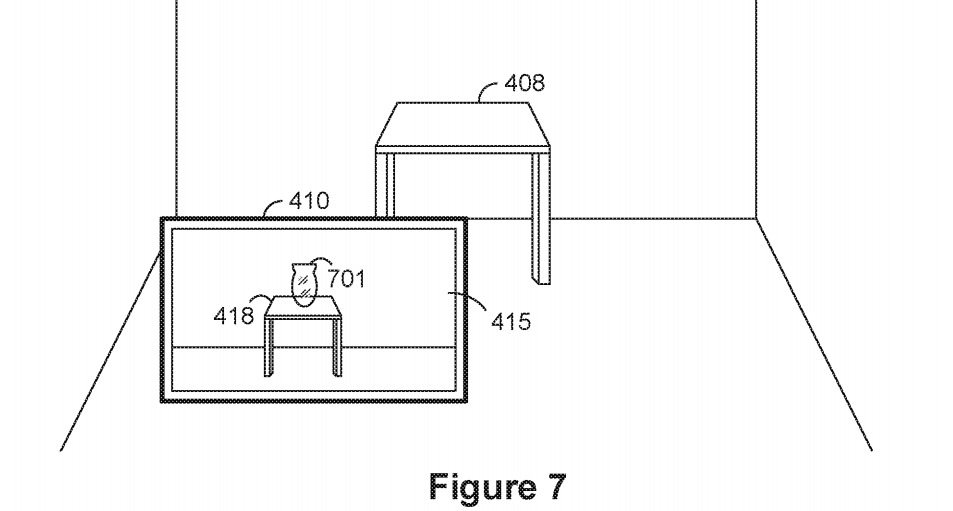

In the second patent, Apple attempts to tackle how an AR headset presents virtual objects on a real-world video feed, with the combination of real-world camera data and digitally-produced versions performed on the fly for the user. As this needs to be performed accurately and quickly, such processes could require considerable computational resources to accomplish to a high standard.

In the patent titled "Method and device for combining real and virtual images," Apple suggests a way a system could compute the blended virtual image with as minimal resources as possible.

For the system, the patent mentions how a camera feed can create a series of values for "real pixels," which has its virtual counterpart in the form of a "virtual pixel." Each have their own pixel values, which are used to perform calculations to determine what final pixel value should be used and displayed to the user.

The two pixel values can be combined into a single value, which can be based on a weighted average between the real and virtual pixel values. Apple reckons the weighting can be determined in part based on the alpha or transparency values of either the real or virtual pixels.

Determining the alpha value for the real pixel can be created by downsampling the full camera image, creating a stencil based on the camera image, and producing a matting parameter matrix based on both the downsampled image and the stencil. This matting parameter image can be upsampled, which in turn can create the alpha for the pixel value.

This alpha value can also be used to create color component pixel values for a particular pixel, enabling for individual colors to be mixed rather than the entire pixel value itself.

By using the technique, it is thought the amount of bandwidth required to transmit the image to the display could be "less than one-tenth" of the data generated by the camera alone.

The patent lists its inventors as Pedro Manuel Da Silva Quelhas and Stefan Auer, and was filed on August 14, 2018.

Apple files numerous patent applications on a weekly basis, but while the existence of patents indicates areas of interest for the company's research and development efforts, they don't guarantee the appearance of the concepts in a future product or service.

Previous work

Apple has made numerous filings in relation to AR and VR headsets in the past, including a system for stopping VR users from bumping into objects, the offloading of graphical processing to wireless basestations, and using an iPhone to map a room for AR purposes instead of using onboard sensors, among other ideas.

Malcolm Owen

Malcolm Owen

Mike Wuerthele and Malcolm Owen

Mike Wuerthele and Malcolm Owen

William Gallagher

William Gallagher