Apple may use sound to identify people or objects, and where they are

New research shows Apple is investigating how HomePod, or other devices, can use audio to estimate the distance to a speaking user, and also to identify the sounds around it.

Apple's HomePod is already very good at hearing your voice, even when it is playing music loudly. Two separate new patent applications show that Apple wants to improve this, and to take the ability for devices to listen, to a new level.

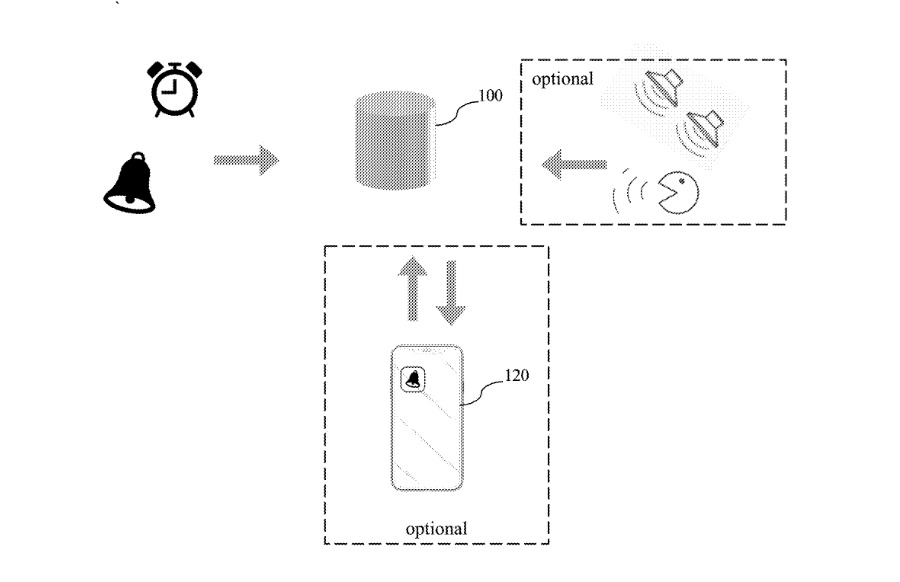

One of them, "Learning-Based Distance Estimation," is concerned with using audio not just to recognize a user, but to figure out where they are.

"It is often desirable for the device to estimate the distance from the device to the user using [its] compact microphone array," says the patent application. "For example, the device may adjust the playback volume or the response from a smart assistance device based on the estimated distance of the user from the device."

"[So] if the user is very close to the device, music or speech will not be played at a high volume," it continues. "Alternatively, if the user is far away, media playback or the response from a smart assistant device may be adjusted to a louder volume."

This same idea is also meant to feed in to Apple's currently impressive but somewhat flawed system where all your devices try to determine which you said "Hey, Siri," to.

"In applications where there are multiple devices," says Apple, "the devices may coordinate or arbitrate among themselves to decide which one or more devices should reply to a query based on the distance from each device to the user."

Apple proposes both simple and more complex solutions, which may all be used in concert. The simpler one is having the device do what the HomePod does and map out its environment first.

Then it effectively has a "grid of measured or simulated points in an acoustic environment." In that case, a sound can be compared to this "grid" to approximate where the person speaking is.

However, Apple doesn't think this is good enough on its own, and also says that it's of least use with Siri. That's because a person may be moving as they speak, and also that they demand a fast response — which this proposal isn't suited for.

Alternatively, then, "if at least two microphone arrays are available," then "the distance of the voice source may be estimated using a triangulation method."

The key part there, though, is the need for at least two devices with microphones. Consequently, Apple has another, more involved solution which involves a "learning based system such as a deep neural network (DNN)," and needn't have multiple devices.

"The deep learning system may estimate the distance of the speech source at each time frame based on speech signals received by a compact microphone array," says the patent application.

What this DNN system could do is determine what is speech and what is background noise. Then it can calculate "information about the direct signal propagation" and also the "reverberation effect and noise."

This application is credited to three inventors, including Mehrez Souden, and Joshua D. Atkins. Their previous related work includes granted patents on how to record full spatial sound using fewer than normal microphones.

That's specifically concerned with audio in Apple AR, where this latest patent application is about physical devices in real environments. If it seems a lot of trouble to go to just to avoid blasting someone's ears off with a too-loud HomePod mini, though, there is much more to it.

"For example, assistive and augmented hearing devices such as hearing aids may enhance the audio signals based on the distance of the voice source," says this patent application.

That's also what the second newly-revealed patent application concentrates on.

Identifying important sounds

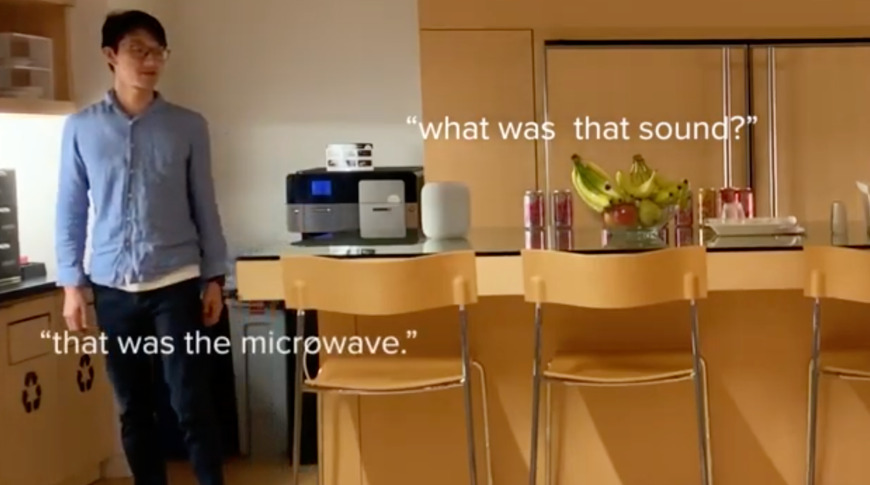

"Systems and Methods for Identifying an Acoustic Source Based on Observed Sound," is about having some devices recognize the sounds of other ones, and reacting to them for us.

"Many home appliances, such as, for example, microwave ovens, washing machines, dishwashers, and doorbells, make sounds to alert a user that a condition of the appliance has changed," begins the patent application.

"However, users may be unable to hear an audible alert emitted by a home appliance for any of a variety of reasons," it continues. "For example, a user may have a hearing impairment, a user may be outside or in another room, or the appliance may emit a sound obscured by a household acoustic scene."

It needn't be your egg timer going off, either. This patent is equally concerned with sounds in "public spaces (government buildings), semi-public spaces (office lobbies), and private spaces (residences or office buildings)."

"[These] also have acoustic scenes that can contain sounds that carry information," it says. "For example, a bell, chime, or buzzer may indicate a door has been opened or closed, or an alarm may emit a siren or other sound alerting those nearby of a danger (e.g., smoke, fire, or carbon monoxide)."

Overall, this patent application chiefly details methods by which a device can be "trained" to recognize "commonly occurring sounds." It will then keep listening out for any of them, and when it hears one, can emit "a selected output responsive to determining that the sound is present in the acoustic scene."

In other words, if it is your egg timer going off, maybe the device can make your Apple Watch tap you on the wrist. Or if it's a burglar alarm, the same device could notify the authorities.

"Sound carries a large amount of contextual information," says Apple. "Recognizing commonly occurring sounds can allow electronic devices to adapt their behavior or to provide services responsive to an observed context (e.g., as determined from observed sound), increasing their relevance and value to users while requiring less assistance or input from the users."

This second patent application is credited to four inventors, including Daniel C. Klinger. His previous work includes a patent application for taking secure phone calls over HomePod, or other devices.

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely