Apple's future iPhones may offer 3D recording of places, objects

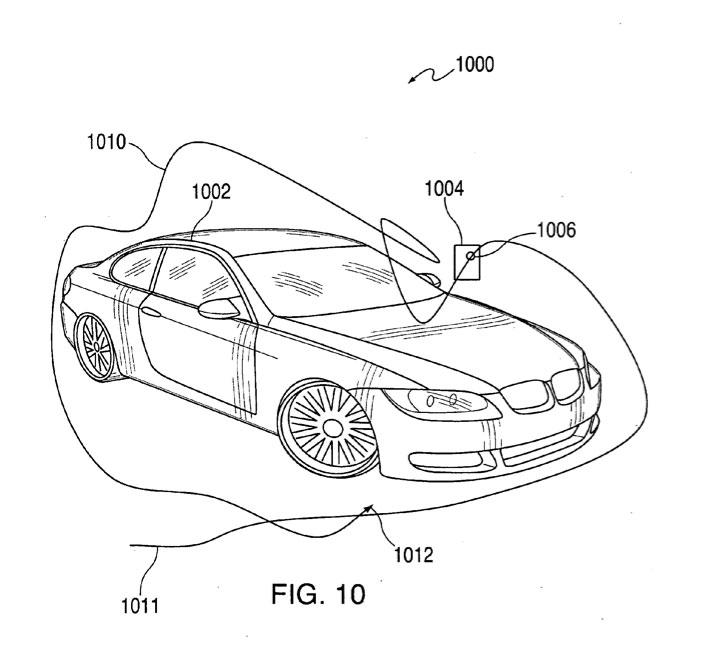

Two new patent applications from Apple revealed this week by the U.S. Patent and Trademark Office describe technology that would generate a three-dimensional model of an object or place based on a recording. Users could then navigate the 3D space "in an order other than that of the recording."

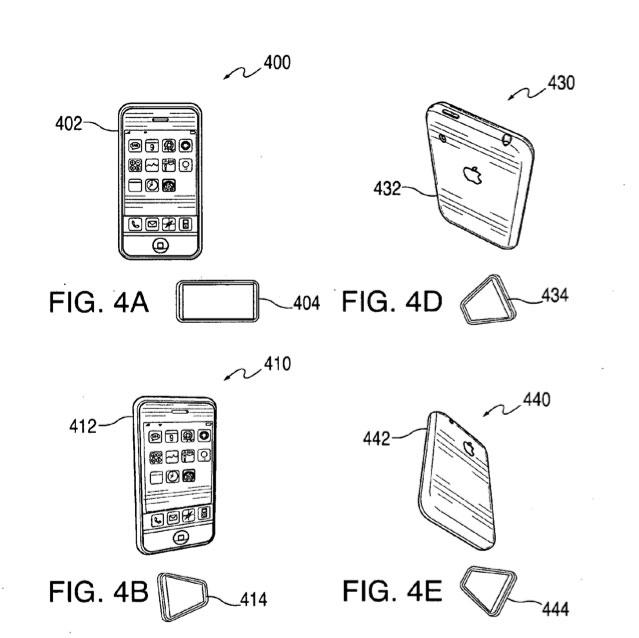

One application, entitled "Generating a Three-Dimensional Model using a Portable Electronic Device Recording," deals specifically with the recording aspect. It says that a portable device, like an iPhone, could record more than just video, and rely on other functions like motion sensing components and GPS. This would allow the software to adjust the displayed portion of a three-dimensional model to reflect the movement of the device.

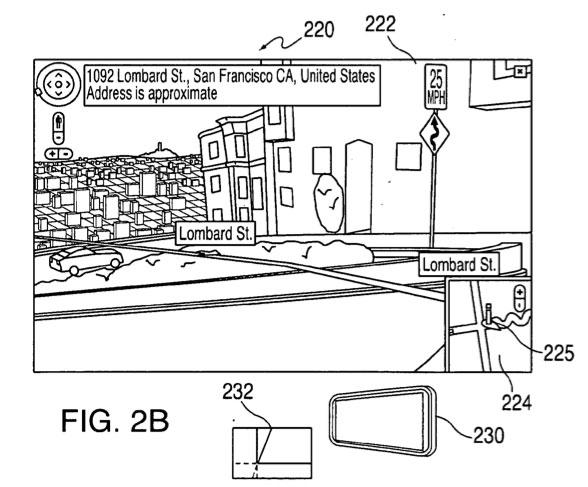

"By walking with the device in the user's real environment, a user can virtually navigate representation of a three-dimensional environment," the application says. The technology sounds like a mix between augmented reality applications such as Layar, which show the world in real-time through the iPhone camera and overlay digital information on top of the picture, and navigation services like Google Maps, where Street View can allow users to view road intersections and landmarks without physically being there.

These 3D renderings could be used in software as practical as a mapping application, or something simply for fun, like a video game.

If enabled on millions of devices, this sort of 3D mapping could be uploaded over the Internet and then shared with other users, allowing a sort of "hive mind" functionality in generating comprehensive and up-to-date real-world renderings.

The accompanying application revealed this week is entitled "Three Dimensional Navigation Using Deterministic Movement of an Electronic Device." It deals with the navigation of a 3D space generated by a user's recording. Again, this application describes features similar to existing augmented reality applications, but taking it a step further by recording the information for future playback.

"By walking with the device in the user's real environment, a user can virtually navigate a representation of a three-dimensional environment," the document states. "in some embodiments, a user can record an object or environment using an electronic device, and tag the recorded images or video with movement information describing the movement of the device during the recording. The recorded information can then be processed with the movement information to generate a three-dimensional model of the recorded environment or object."

Both applications were originally filed on January 28, 2009. They are credited to Richard Tsai, Andrew Just and Brandon Harris.

Neil Hughes

Neil Hughes

William Gallagher

William Gallagher

Andrew Orr

Andrew Orr

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele