Apple hiring more Siri engineers, working on evolving API, features, languages

Now hiring for Siri

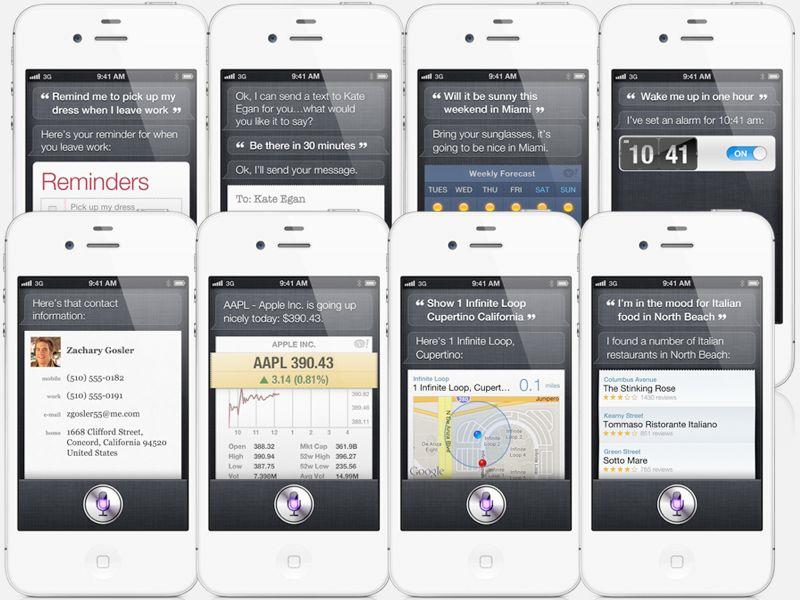

"We are looking for an engineer to join the team that implements the UI for Siri," one job posting notes.

"You will primarily be responsible for implementing the conversation view and its many different actions. This includes defining a system that enables a dialog to appear intuitive, a task that involves many subtle UI behaviors in a dynamic, complex system. You will have several clients of your code, so the ability to formulate and support a clear API is needed."

A second job posting elaborates, "This is a broad-ranging task - we take every application that Siri interacts with, distill it down to fundamentals, and implement that application's UI in a theme fitting with Siri. Consider it an entire miniature OS within the OS, and you get a good idea of the scope!"

Apple also has three positions listed for "Language Technologies Engineers," tasked with "bringing new languages to Siri, Apple’s new personal assistant technology for iPhone, as well as other cloud based services."

Against it before they were before it

Apple's Siri group has already been described as "one of the largest software teams at Apple," and the series of new positions indicate the project is only growing, both in scope and in importance. Apple is building out Siri both as a strategic feature unique to the iPhone 4S, and as a cloud service that its job listings suggest could find applications among its other products, including the Mac.

After initially being ridiculed by top managers at both Google's Android and Microsoft's Windows Phone as not being "super useful" (Google's Andy Rubin insisted that he he didn't "believe that your phone should be an assistant") leading developers at both Google and Microsoft have since suggested that they are actually ahead of Apple's Siri in terms of voice technology.

Prior to the release of iPhone 4S, both of their platforms actually were, at least in offering OS-integrated support for voice recognition. Across iOS devices apart from the iPhone 4S, Apple is still behind, offering only the basic Voice Commands with no support for voice transcription outside of third party apps. However, Siri breaks new ground far in front of where Google and Microsoft were playing, and Apple is using the new technology to make its latest iPhone 4S its fastest selling smartphone ever.

Baby steps for Siri

Building functional voice-based services isn't just a matter of obtaining state of the art recognition algorithms. Siri was released in "beta," a non-typical move for Apple, in part because the way to improve voice recognition is to have it in wide use, learning from the tasks it is given.

Benoit Maison, who worked for IBM in voice recognition for almost six years, noted in a blog entry today that "it's not just easier" to improve voice recognition while it’s being widely used, "it's the only way!"

"We participated in DARPA-sponsored research projects, fields trials, and actual product development for various applications: dictation, call centers, automotive, even a classroom assistant for the hearing-impaired," Maison writes. "The basic story was always the same: get us more data! (data being in this case transcribed speech recordings)."

He adds, "some researchers have argued that most of the recent improvements in speech recognition accuracy can be credited to having more and better data, not to better algorithms." By having Siri in the wild responding to actual voice requests from real users, Apple is collecting a treasure trove of information it can use to make Siri even better.

On page 2 of 2: Collecting voices to improve results, The first one isn't free

Google initiated a telephone based GOOG-411 service in 2007 to provide free, automated, voice-based directory assistance over the phone. After gathering enough data through the service, Google shut the service down last year. Nuance similarly offers a free voice dictation app that provides the company with a way to sample voices. Microsoft, however, is at a disadvantage with Windows Phone because its user base is extremely small and appears not to be gaining any traction in the market, limiting the volume and range of real world samples it can use to improve its service.

While Google has added Voice Actions to Android as a curiosity, Apple's Siri not only signals an intent to deliver an entirely new natural voice interface complementing the multitouch screen of iOS devices, but also threatens Google's middleman status as a search engine. With users performing mobile tasks via apps and with Siri via voice, there's little opportunity left for Google to sell search placement via a conventional page of web results, the area where the company makes most of its money.

Further, Google can't copy Siri on Android without similarly giving up its paid search business model there, and it can't deeply integrate a Siri of its own into iOS, as the only public API for apps on iOS is sandboxed and unable to tightly integrate with other services. This gives Apple a competitive edge with Siri as a hardware maker, rather than solely a licensed platform vendor like Google or Microsoft.

The first one isn't free

Several months prior to Apple's release of Siri in iOS 5, reader Jonathan Truelsen in Denmark reported to AppleInsider that a small team of Americans were recruiting adult native speakers to record common verbal commands in their language, earning a stipend for the 2.5 hour recording session.

The group, promoted via a Facebook event as "the voice project," was not advertised as being sponsored by Apple, but one person that was involved with the project did admit that the company was conducting the sessions, and that the recordings might be incorporated into iOS 5. The recordings were reportedly performed with two iPhone 3G units using Bluetooth headsets.

The commands recorded included checking flight statuses; going to the Internet; checking signal strength; turning on/off bluetooth; finding local companies, restaurants, pubs, cafés; asking scientific questions and history questions; calling phone numbers and contacts and sending an SMS to contact or number. "Sometimes you had make up your own text," Truelsen stated.

After collecting enough paid samples for launch languages, Apple brought Siri to market in German, French and English with variants targeting American, English and Australian accents. Apple plans to expand the languages Siri can understand (including languages like Danish that the company has already apparently paid to sample), but is also working to expand its core functionality, including the range of external services it can query for information.

Apple has already launched at least one external app (Find My Friends) that ties into Siri, even though the app isn't bundled in iOS 5 or shipping on the iPhone 4S. This suggests that the company has future plans to open up Siri to other third parties. Doing so will require user interface integration, however, just as Apple has added Siri-integrated displays and animations that put Notes, Calendars, Contacts, Maps and other connected services right into Siri response page.

At the same time, Apple is also using iPhone 4S users' responses to hone the accuracy of Siri itself. And as it builds server capacity for Siri, it seems likely the company will take its service to other iOS devices and the desktop Mac platform as well, potentially even working Siri into the living room as a voice-based assistant for Apple TV, as some analysts have speculated.

"If the rumors of a speech-enabled Apple TV are true," Maison added, "then Siri will soon have other challenges. For example, far-field speech recognition is notoriously more difficult than with close-talking microphones. She had better take a head start with the iPhone 4S."

Daniel Eran Dilger

Daniel Eran Dilger

Andrew Orr

Andrew Orr

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee