Amazon is watching and reviewing Cloud Cam security footage

Amazon has been hit by claims it is infringing on the privacy of its customers with its smart home security cameras, with a new report alleging Amazon workers are being given a glimpse into the homes of Cloud Cam users, under the guise of training the retailer's artificial intelligence systems.

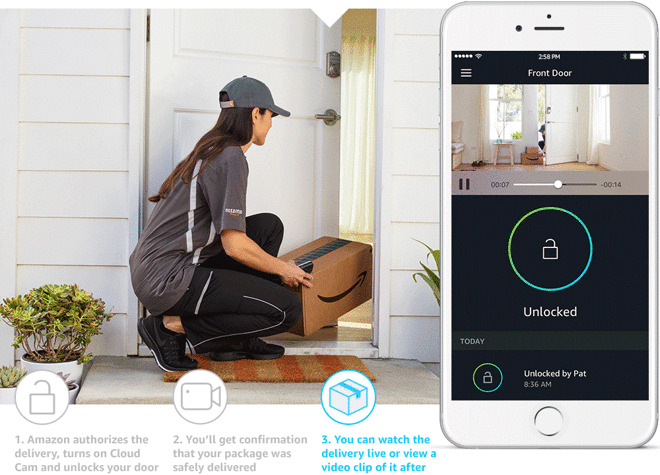

Introduced in 2017, the Cloud Cam is a 1080p cloud-connected webcam that can offer live feeds of its view to homeowners via the Echo Show, Echo Spot, Fire range of devices, and an iPhone app. Artificial intelligence is used to monitor the video for triggerable events, in order to provide alerts and highlight clips of activity, such as an attempted robbery or the movements of a pet.

A new report from Bloomberg reports that video captured, stored, and analyzed by Amazon may not necessarily be seen just by software. Support teams working on the product are also provided an opportunity to see the feeds, in order to help the software better recognize what it is seeing.

"Dozens" of employees based in India and Romania are tasked with reviewing video snippets captured by Cloud Cam, telling the system whether what takes place in the clips is a threat or a false alarm, five people who worked on the program or have direct knowledge of its workings told the report.

Auditors can annotate up to 150 video recordings, each typically between 20 to 30 seconds in length. According to an Amazon spokeswoman, the clips are sent for review from employee testers, along with Cloud Cam owners who submit clips for troubleshooting, for example if there are inaccurate notifications or issues with video quality.

"We take privacy seriously and put Cloud Cam customers in control of their video clips," the spokeswoman advised. Outside of submitting video for troubleshooting, they added "only customers can view their clips."

The report goes on to assert that Amazon does not inform customers of the use of humans to train algorithms in the terms and conditions of the service.

Inappropriate or sensitive content does sometimes slip into the retraining collection, two sources advised. Also cited by the sources, the review team viewed clips of users having sex on a rare occasion. The clips containing inappropriate content are flagged and then discarded to avoid being used for training the AI.

The Amazon spokeswoman confirmed the disposal of the inappropriate clips. She also did not mention why the activity would appear in clips voluntarily submitted by users or Amazon staff.

Amazon does implement some security to prevent the clips from being shared, with workers in India located on a restricted floor where mobile phones are banned. Even so, one person familiar with the team admitted this policy didn't stop some employees from sharing footage to people not on the team.

Alexa, Siri, and Repetition

The story is reminiscent of an earlier Bloomberg report from April, which alleged Amazon used outside contractors and full-time employees to comb through snippets of audio from Echo devices to help train Alexa. Again, in that instance, inappropriate content made their way to employees, including one instance employees believed was evidence of sexual assault, and despite Amazon having security policies in place, content was allegedly shared between employees.

The fallout from the report led to a similar claim from a "whistleblower" that Apple failed to adequately disclose its use of contractors to listen to anonymized Siri queries, though Apple does provide warnings in its software license agreements.

Both the report and the "whistleblowing" prompted Apple to temporarily suspend its Siri quality control program as it conducts a thorough review. Apple also advised a future software update would enable users to opt in for the performance grading.

Amazon and Google then followed suit, with Google halting one global initiative for Google Assistant audio, while Amazon provided an option to opt out of human reviews of audio recordings for Alexa.

Unlike Amazon, Apple is unlikely to face a similar situation, as it doesn't currently offer automated monitoring of video feeds to customers. While Apple does allow video camera to work through HomeKit, Apple does not perform any sort of quality control, triggerable actions, or other processing on live video feeds itself.

This does leave the door open to third-parties that take advantage of HomeKit's video feed functionality to perform processing on video they collect, but not Apple. At least not at this time.

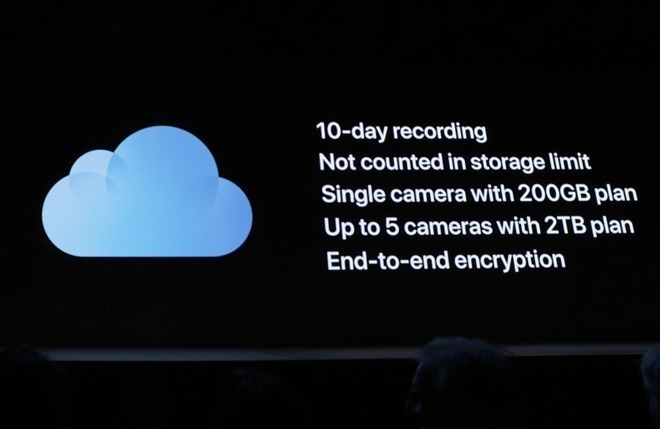

Apple's HomeKit Secure Video feature, which was originally announced in June but has yet to become available to use, will allow for the storage of HomeKit video in iCloud. As end-to-end encryption will be used to secure the video before it leaves the user's home, it means not even Apple will be able to access the video at all.

One video leak allegedly showing the feature in action indicates it will offer some artificial intelligence-based processing, which includes detecting motion events and identifying the main subject of the video, such as a dog or car. Given the highly privacy-focused nature of Apple's offering, including the encryption element, it is likely this is locally-performed processing, rather than one performed in the cloud.

Malcolm Owen

Malcolm Owen

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard