Apple expanding child safety features across iMessage, Siri, iCloud Photos

Apple is releasing a suite of features across its platforms aimed at protecting children online, including a system that can detect child abuse material in iCloud while preserving user privacy.

The Cupertino tech giant on Thursday announced new child safety features across three areas that it says will help protect children from predators and limit the spread of Child Sexual Abuse Material (CSAM). The official announcement closely follows reports that Apple would debut some type of system to curb CSAM on its platforms.

"At Apple, our goal is to create technology that empowers people and enriches their lives — while helping them stay safe," the company wrote in a press release.

For example, Apple will implement new tools in Messages that will allow parents to be more informed about how their children communicate online. The company is also uses a new system that leverages cryptographic techniques to detect collections of CSAM stored in iCloud Photos to provide information to law enforcement. Apple is also working on new safety tools in Siri and Search.

"Apple's expanded protection for children is a game changer," said John Clark, CEO and President of the National Center for Missing & Exploited Children.

With so many people using Apple products, these new safety measures have lifesaving potential for children who are being enticed online and whose horrific images are being circulated in child sexual abuse material. At the National Center for Missing & Exploited Children we know this crime can only be combated if we are steadfast in our dedication to protecting children. We can only do this because technology partners, like Apple, step up and make their dedication known. The reality is that privacy and child protection can co-exist. We applaud Apple and look forward to working together to make this world a safer place for children.

All three features have also been optimized for privacy, ensuring that Apple can provide information about criminal activity to the proper authorities without threatening the private information of law-abiding users.

The new features will debut later in 2021 in updates to iOS 15, iPadOS 15, macOS Monterey, and watchOS 8.

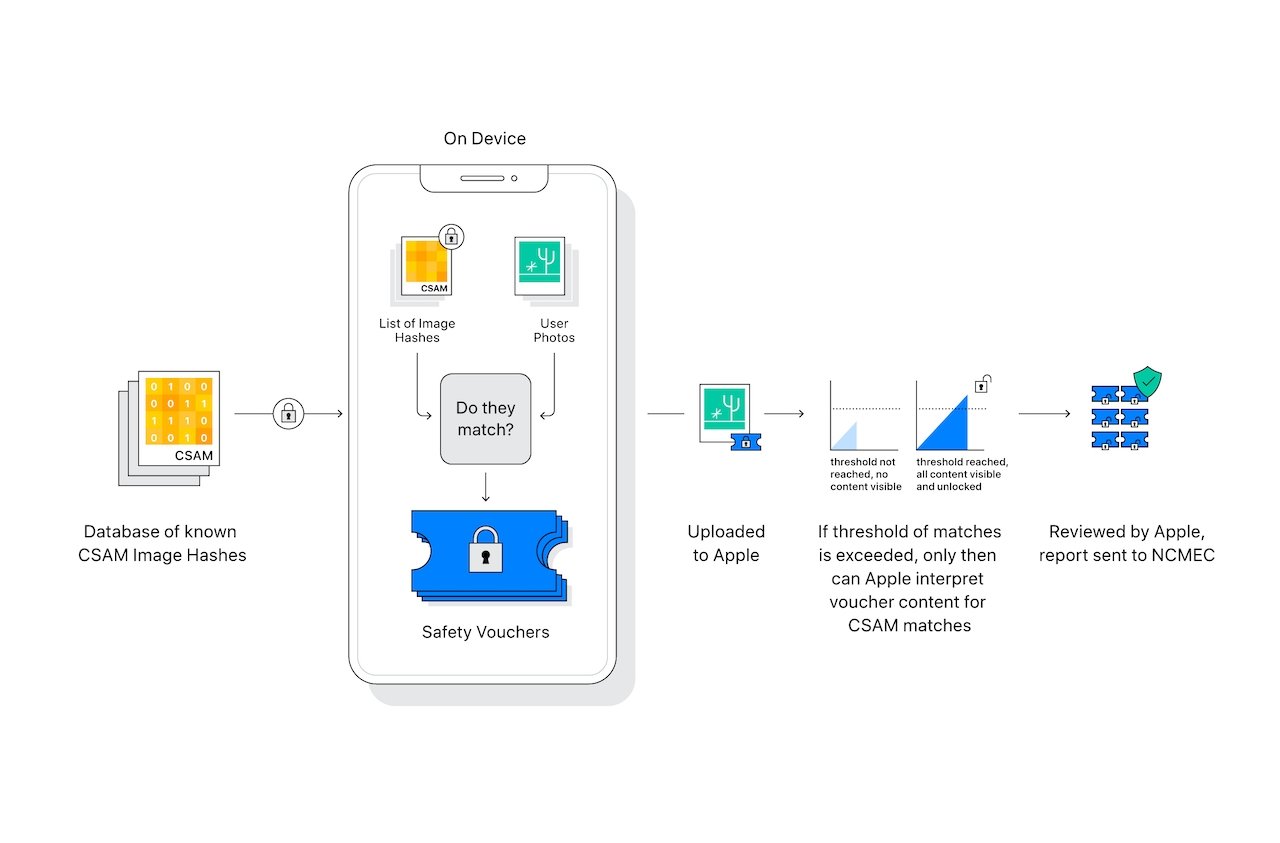

CSAM detection in iCloud Photos

The most significant new child safety feature that Apple is planning on debuting focuses on detecting CSAM within iCloud Photos accounts.

If Apple detects collections of CSAM stored in iCloud, it'll flag that account and provide information to the NCMEC, which works as a reporting center for child abuse material and works with law enforcement agencies across the U.S.

Apple isn't actually scanning images here. Instead, it's using on-device intelligence to match CSAM to a known database of hashes provided by the NCMEC and other child safety organizations. This database is converted into an unreadable set of hashes that are stored securely on a user's device.

The actual method of detecting CSAM in iCloud Photos is complicated, and uses cryptographic techniques at every step to ensure accuracy while maintaining privacy for the average user.

Apple says a flagged account will be disabled after a manual review process to ensure that it's a true positive. After an account is disabled, the Cupertino company will send a message to NCMEC. Users will have the opportunity to appeal an account termination if they feel like they've been mistakenly targeted.

The company again reiterates that the feature only detects CSAM stored in iCloud Photos — it won't apply to photos stored strictly on-device. Additionally, Apple claims that the system has an error rate of less than one in one trillion accounts per year.

Rather than cloud-based scanning, the feature also only reports users who have a collection of known CSAM stored in iCloud. A single piece of abusive material isn't enough to trigger it, which helps to cut back the rate of false positives.

Again, Apple says that it will only learn about images that match known CSAM. It is not scanning every image stored in iCloud and won't obtain or view any images that aren't matched to known CSAM.

The CSAM detection system will only apply to U.S.-based iCloud accounts to start. Apple says it will likely roll the system out on a wider scale in the future.

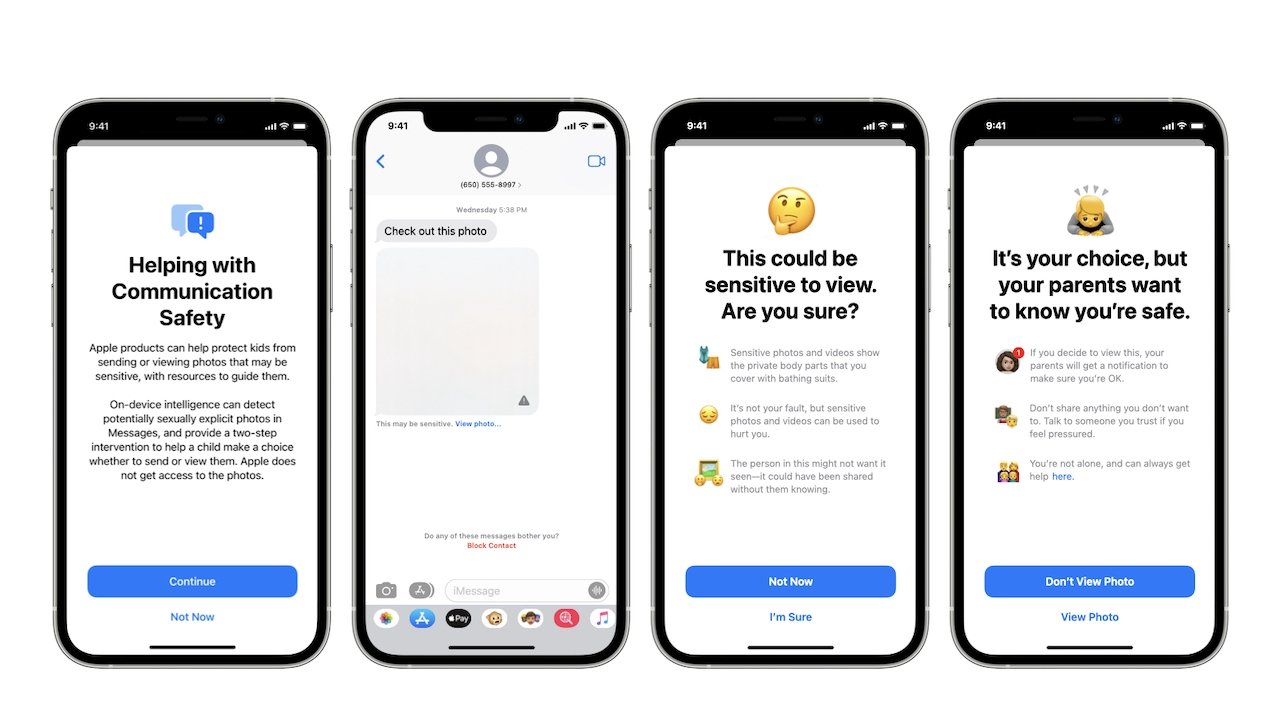

Communication safety

One of the new updates focuses on increasing the safety of children communicating online using Apple's iMessage

For example, the iMessage app will now show warnings to children and parents when they are receiving or sending sexually explicit photos.

If a child under 17 years old receives a sensitive image, it will be automatically blurred and the child will be presented with helpful resources. Apple also included a mechanism that will let children under 13 years old know that a message will be sent to their parents if they do view it. Children between 13 and 17 years old will not be subject to parental notification when opening these images and Communication Safety cannot be enabled on accounts used by adults over the age of 18.

The system uses on-device machine learning to analyze images and determine if it's sexually explicit. It's specifically designed so that Apple does not obtain or receive a copy of the image.

Siri and Search updates

In addition to the iMessage safety features, Apple is also expanding the tools and resources it offers in Siri and Search when it comes to online child safety.

For example, iPhone and iPad users will be able to ask Siri how they can report CSAM or child exploitable. Siri will then provide the appropriate resources and guidance.

Siri and Search are also being updated to step in if users perform searches or queries for CSAM. As Apple notes, "these interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue."

Maintaining user privacy

Apple has long touted that it goes to great lengths to protect user privacy. The company has even gone toe-to-toe with law enforcement over user privacy rights. That's why the introduction of a system meant to provide information to law enforcement has some security experts worried.

This sort of tool can be a boon for finding child pornography in people's phones. But imagine what it could do in the hands of an authoritarian government? https://t.co/nB8S6hmLE3

— Matthew Green (@matthew_d_green) August 5, 2021

However, Apple maintains that surveillance and abuse of the systems was a "primary concern" while developing them. It says it designed each feature to ensure privacy was preserved while countering CSAM or child exploitation online.

For example, the CSAM detection system was designed from the start to only detect CSAM — it doesn't contain mechanisms for analyzing or detecting any other type of photo. Furthermore, it only detects collections of CSAM over a specific threshold.

Apple says the system doesn't open the door to surveillance, and it doesn't do anything to weaken its encryption. The CSAM detection system, for example, only analyzes photos that are not end-to-end encrypted.

Security experts are still concerned about the ramifications. Matthew Green, a cryptography professor at Johns Hopkins University, notes that the hashes are based on a database that users can't review. More than that, there's the potential for hashes to be abused — like a harmless image shared a hash with known CSAM.

"The idea that Apple is a 'privacy' company has bought them a lot of good press. But it's important to remember the this is the same company that won't encrypt your iCloud backups because the FBI put pressure on them," Green wrote.

Ross Anderson, a professor of security engineering at the University of Cambridge, called the system "an absolutely appalling idea" in an interview with The Financial Times. He added that it could lead to "distributed bulk surveillance of... our phones and laptops."

Digital rights group The Electronic Frontier Foundation also penned a blog post about the feature, saying it is "opening the door to broader abuses."

"All it would take to widen the narrow backdoor that Apple is building is an expansion of the machine learning parameters to look for additional types of content, or a tweak of the configuration flags to scan, not just children's, but anyone's accounts. That's not a slippery slope; that's a fully built system just waiting for external pressure to make the slightest change," wrote EFF's India McKinney and Erica Portnoy.

Mike Peterson

Mike Peterson

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Amber Neely

Amber Neely