Apple 'poisoned the well' for client-side CSAM scanning, says former Facebook security chief

Alex Stamos, former Facebook security chief, says Apple's approach to CSAM scanning and iMessage exploitation may have caused more harm than good for the cybersecurity community.

Once iOS 15 and the other fall operating systems release, Apple will introduce a set of features intended to prevent child exploitation on its platforms. These implementations have created a fiery online debate surrounding user privacy and the future of Apple's reliance on encryption.

Alex Stamos is currently a professor at Stanford but previously acted as security chief at Facebook. He encountered countless damaged families as a result of abuse and sexual exploitation during his tenure at Facebook.

He wants to stress the importance of technologies, such as Apple's, to combat these problems. "A lot of security/privacy people are verbally rolling their eyes at the invocation of child safety as a reason for these changes," Stamos said in a Tweet. "Don't do that."

The Tweet thread covering his views surrounding Apple's decisions is extensive but offers some insight into the matters brought up by Apple and experts alike.

In my opinion, there are no easy answers here. I find myself constantly torn between wanting everybody to have access to cryptographic privacy and the reality of the scale and depth of harm that has been enabled by modern comms technologies.

— Alex Stamos (@alexstamos) August 7, 2021

Nuanced opinions are ok on this.

The nuance of the discussion has been lost on many experts and concerned internet citizens alike. Stamos says the EFF and NCMEC both reacted with little room for conversation, having used Apple's announcements as a stepping stone to advocate for their equities "to the extreme."

Information from Apple's side hasn't helped with the conversation either, says Stamos. For example, the leaked memo from NCMEC calling concerned experts "screeching voices of the minority" is seen as harmful and unfair.

Stanford hosts a series of conferences around privacy and end-to-end encryption products. Apple has been invited but has never participated, according to Stamos.

Instead, Apple "just busted into the balancing debate" with its announcement and "pushed everybody into the furthest corners" with no public consultation, said Stamos. The introduction of non-consensual scanning of local photos combined with client-side ML might have "poisoned the well against any use of client side classifiers."

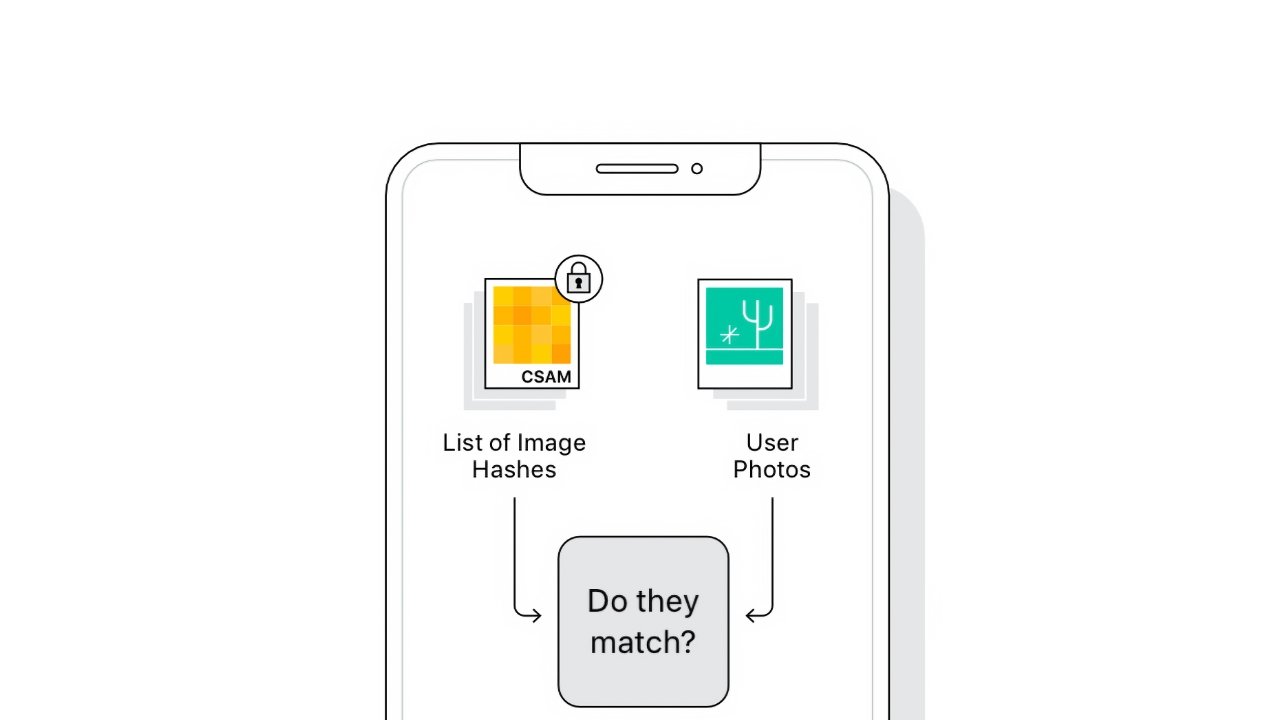

The implementation of the technology itself has left Stamos puzzled. He cites that the on-device CSAM scanning isn't necessary unless it is in preparation for end-to-end encryption of iCloud backups. Otherwise, Apple could easily perform the scanning server side.

The iMessage system doesn't offer any user-related reporting mechanisms either. So rather than alert Apple to users abusing iMessage for sextortion or sending sexual content to minors, the child is left with a decision — one Stamos says they are not equipped to make.

As a result, their options for preventing abuse are limited.

— Alex Stamos (@alexstamos) August 7, 2021

What I would rather see:

1) Apple creates robust reporting in iMessage

2) Slowly roll out client ML to prompt the user to report something abusive

3) Staff a child safety team to investigate the worst reports

At the end of the Twitter thread, Stamos mentioned that Apple could be implementing these changes due to the regulatory environment. For example, the UK Online Safety Bill and EU Digital Services Act could both have influenced Apple's decisions here.

Alex Stamos isn't happy with the conversation surrounding Apple's announcement and hopes the company will be more open to attending workshops in the future.

The technology itself will be introduced in the United States first, then rolled out on a per-country basis. Apple says it will not allow governments or other entities to coerce it into changing the technology to scan for other items such as terrorism.

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Amber Neely

Amber Neely

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Mike Wuerthele and Malcolm Owen

Mike Wuerthele and Malcolm Owen

William Gallagher

William Gallagher