Outdated Apple CSAM detection algorithm harvested from iOS 14.3 [u]

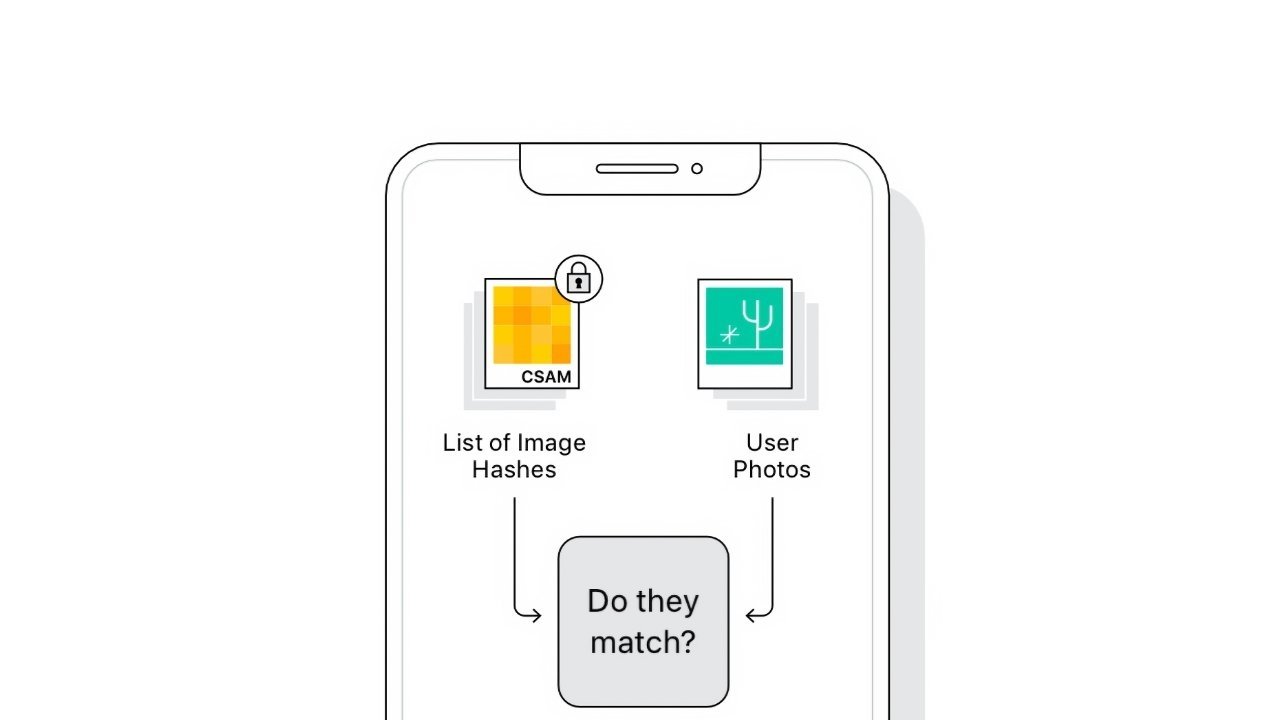

A user on Reddit says they have discovered a version of Apple's NeuralHash algorithm used in CSAM detection in iOS 14.3, and Apple says that the version that was extracted is not current, and won't be used.

The Reddit user, u/AsuharietYgvar, says the NerualHash code was found buried in iOS 14.3. The user says that they've reverse-engineered the code and rebuilt a working model in Python that can be tested by passing it images.

The algorithm was found among hidden APIs, and the container for NeuralHash was called MobileNetV3. Those interested in viewing the code can find in a GitHub repository.

The Reddit user claims this must be the correct algorithm for two reasons. First, the model files have the same prefix found in Apple's documentation, and second, verifiable portions of the code work the same as Apple's description of NeuralHash.

It appears that the discovered code will not be fooled by compression or image resizing but will not detect crops or rotations.

Using this working Python script, GitHub users have begun examining how the algorithm works and if it can be abused. For example, one user, dxoigmn, found that if you knew the resulting hash found in the CSAM database, one could create a fake image that produced the same hash.

If true, someone could make fake images that resembled anything but produced a desired CSAM hash match. Theoretically, a nefarious user could then send these images to Apple users to attempt to trigger the algorithm.

Despite these discoveries, all of the information provided by this version of NerualHash may not represent the final build. Apple has been building the CSAM detection algorithm for years, so it is safe to assume some version of the code exists for testing.

If what u/AsuharietYgvar has discovered is truly some version of the CSAM detection algorithm, it likely isn't the final version. For instance, this version struggles with crops and rotations, which Apple specifically said its algorithm would account for.

Theoretical attack vector, at best

If the attack vector suggested by dxoigmn is possible, simply sending a user an image generated by it has several fail-safes to prevent a user from getting an iCloud account disabled, and reported to law enforcement. There are numerous ways to get images on a person's device, like email, AirDrop, or iMessage, but the user has to manually add photos to the photo library. There isn't a direct attack vector that doesn't require the physical device, or the user's iCloud credentials to inject images directly into the Photos app.

Apple also has a human review process in place. The attack images aren't human-parseable as anything but a bit-field, and there is clearly no CSAM involved in the fabricated file.

Not the final version

The Reddit user claims they discovered the code but are not an ML expert. The GitHub repository with the Python script is available for public examination for now.

No one has discovered the existence of the CSAM database or matching algorithm in iOS 15 betas yet. Once it appears, it is reasonably possible that some crafty user will be able to extract the algorithm for testing and comparison.

For now, users can poke around this potential version of NerualHash and attempt to find any potential issues. However, the work may prove to be fruitless since this is only a part of the complex process used for CSAM detection, and doesn't account for the voucher system or what occurs server-side.

Apple hasn't provided an exact release timeline for the CSAM detection feature. For now, it is expected to launch in a point release after the new operating systems debut in the fall.

Update: Apple in a statement to Motherboard said the version of NeuralHash discovered in iOS 14.3 is not the final version set for release with iOS 15.

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

Sponsored Content

Sponsored Content