Apple working on how to read back iMessages in the sender's voice

A new Apple patent application describes converting an iMessage into a voice note played back in a voice that the user has created with samples of the sender's voice.

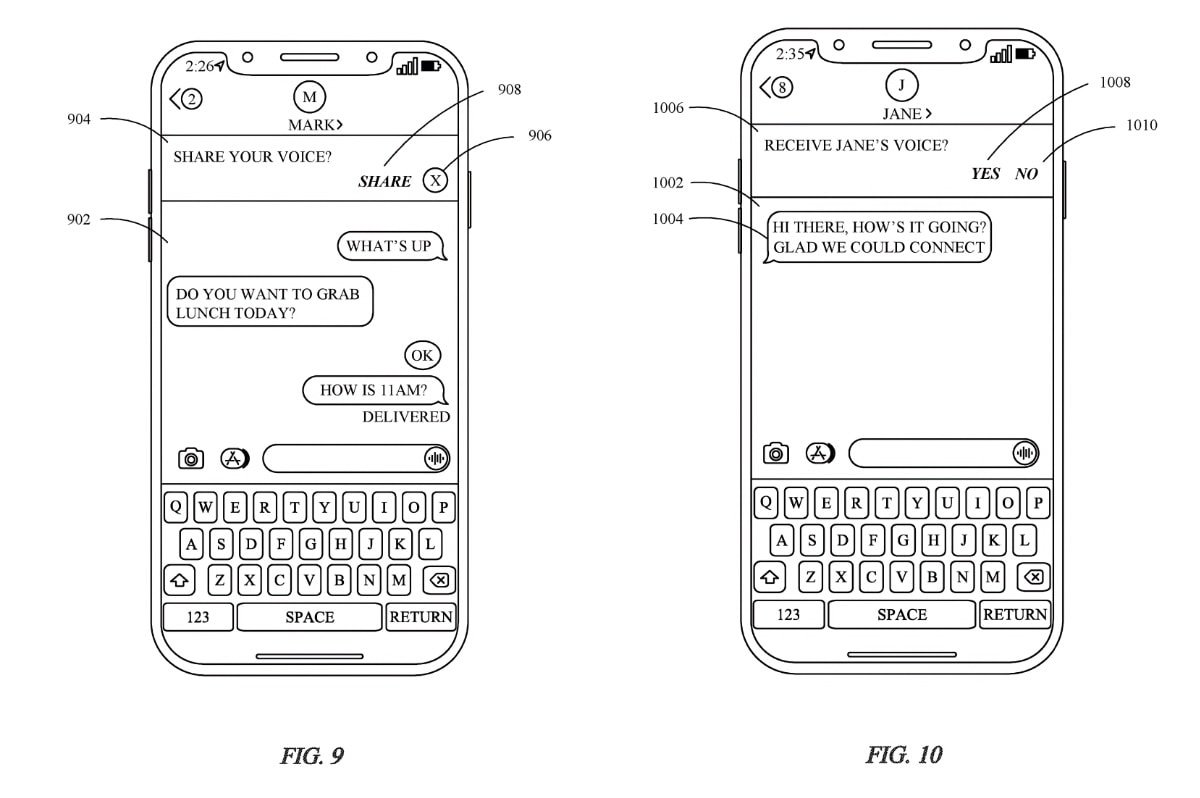

Apple users can already send audio recordings in iMessage or have Siri read text messages back to them, but the patent describes a way to have the device read the text message in the sender's voice instead of Siri, using a voice file.

"The voice model is provided to a second electronic device," according to the patent. "In some examples, a message is received from a respective user of a second electronic device."

Meaning, when someone sends an iMessage, they can choose to attach a voice file, which would be stored on the device. If this happens, the receiver will be prompted to decide if they want to receive both the message and the voice recording.

"In response to receiving the message, a voice model of the respective user is received," the patent reads. "Based on the voice model, an audio output corresponding to the received message is provided."

According to the patent, the iPhone in question would then build a Siri-like profile for the sender's voice, and then simulated it when reading that message and any future messages they receive from that sender. The voice simulation model could also be sent on its own so a person's contacts can download it before messages.

It would offer more personalization when friends and family text each other, instead of hearing Siri's voice when it reads messages. Couples could also hear messages in a more personal way, such as hearing "I love you" in their partner's voice.

The patent's inventors are Qiong Hi, Jiangchuan Li, and David A. Winarsky. Winarsky is Apple's director of text-to-speech technology, while Li is a senior Siri software engineer for machine learning at Apple, and Hu formerly worked on Siri at the company.

As usual, with patents, it won't necessarily become a reality, but it's possible given Apple's recent work with artificial intelligence and voices. For example, with iOS 11, Apple switched Siri's voice from relying on recordings from voice actors to a text-to-speech model using machine learning.

In 2020, Apple acquired a company called Voysis that worked on improving natural language processing in virtual assistants. They used WaveNet technology, which was introduced by Google's DeepMind program in 2016.

WaveNets are "deep generative models of raw audio waveforms" that can be used to generate speech that mimics any human voice.

Apple has also started using artificial intelligence to narrate specific audiobook genres instead of using humans. So the patent is entirely in the realm of possibility of having an Apple device eventually learn to read messages in a person's voice.

Andrew Orr

Andrew Orr

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee