Apple has shown continued interest in a new input method that would use advanced sensors to detect gestures, movements, location and distance from a user, allowing a person to easily transition from physical input up close to gesture controls from afar.

Certain user interface elements could enlarge, simplify or disappear as users step further away from their device.

The company's pursuit of a user-sensing, highly interactive computer system was detailed in a patent continuation published by the U.S. Patent and Trademark Office on Thursday. Entitled "Computer User Interface System and Methods," it describes sensors that could measure everything from the presence of a user to their location in the room to any gestures they may perform.

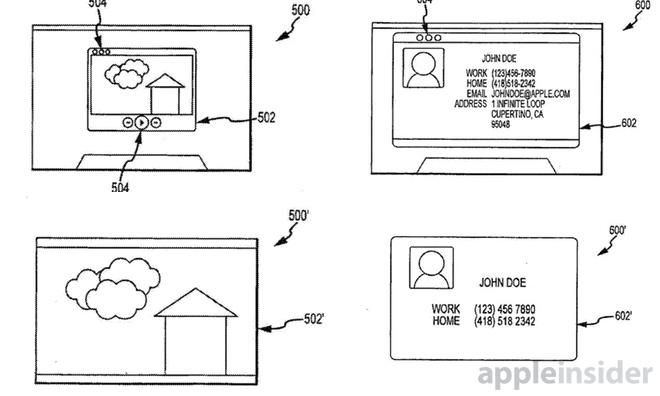

Perhaps most interesting is the filing's mention of a "user proximity context" that would modify a system's user interface based on how close the person is to the device.

The system described in Apple's patent filing would allow users to easily read and control a device from either up close or afar, dynamically adapting to the person's distance.

"An appearance of information displayed by the computer may be altered or otherwise controlled based on the user proximity context," the filing reads. "For example, a size and/or a content of the information displayed by the computer may be altered or controlled."

With this system, elements on the display, including text size, could be automatically increased or decreased based on how close the user is to the screen. The user interface could also adapt and change based on where a person is in relation to the device.

In another example given by Apple, a device could automatically transfer input controls from a mouse to hand gestures as a person steps away. Yet another situation presented by Apple describes a device increasing its screen brightness based on how far away a user is, allowing them to see the display more easily from a distance.

Apple's concept is in some ways a more advanced and interactive version of the "parallax effect" the company introduced with its iOS 7 mobile operating system upgrade last year. That feature uses the motion sensors in an iPhone or iPad to make background wallpapers move, giving users the illusion that their device is a sort of "window" into a virtual three-dimensional world.

The concepts presented in Apple's filing are not entirely new, though they do suggest that Apple could be interested in offering three-dimensional input and interactivity in future devices. Head tracking, motion gestures and user identification already exist in a number of products on the market, most notably Microsoft's Kinect for its Xbox gaming platform.

The application disclosed this week was filed with the USPTO in August of 2013, and is a continuation of a filing from 2008. It is credited to inventors Aleksandar Pance, David R. Falkenberg, and Jason M. Medeiros.