Apple continues to pursue in-air 3D gesture control technology, and on Monday was granted a patent for a machine vision system capable of recognizing human hand gestures with uncanny accuracy.

Source: USPTO

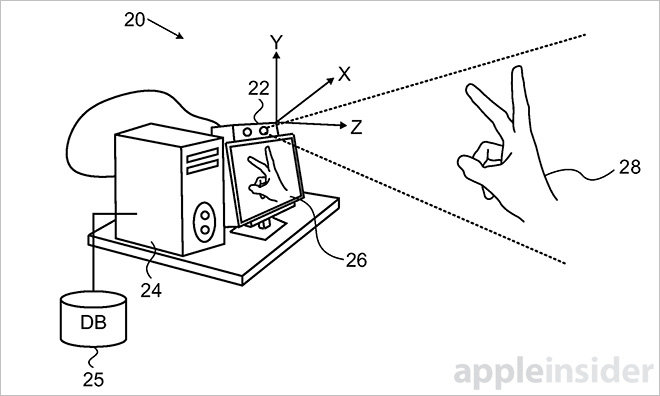

The U.S. Patent and Trademark Office granted Apple U.S. Patent No. 9,002,099 for "Learning-based estimation of hand and finger pose," an invention describing methods by which an optical 3D mapping system can discern a user's hand gesture more accurately by applying specialized learning algorithms.

Using Apple's newly patented technology, 3D mapping hardware would be able to distinguish a peace sign from a clenched fist, even if part of a user's hand is blocked by a foreign object. For applications like in-air gesture control, accuracy allows for more granular pose processing, which in turn opens the door to an expansive library of control schemes.

The patent relies on 3D imaging hardware capable of creating depth maps of a scene with fairly high precision. By processing these maps, isolating hand landmarks, reconstructing a digital skeleton facsimile and assigning landmark positioning relative to each other, an exemplary system can accurately determine hand poses.

Apple already owns patents related to depth mapping, including IP acquired through a 2013 purchase of PrimeSense, an Israeli company known for motion sensing and 3D scanning hardware and middle-ware. PrimeSense technology was behind Microsoft's Kinect sensor for Xbox 360, which lets gamers control their machine via hand and body gestures.

The first PrimeSense patent reassignment to cross the USPTO's desk came last year for a projection-based 3D mapping solution that would serve as a solid backbone for today's invention.

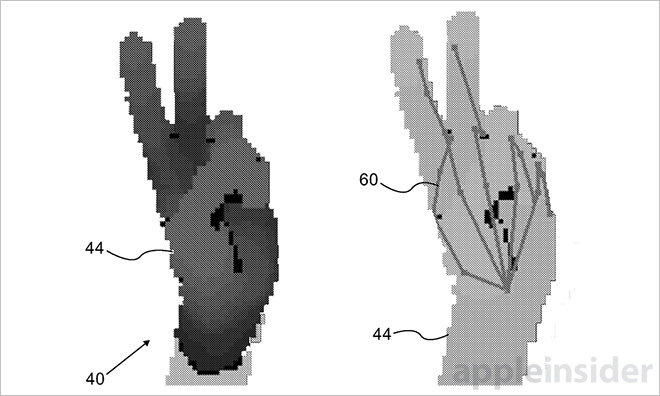

A typical recognition system needs at least a few landmark features to begin estimating a user's hand pose, or gesture, usually coming from a group including a fingertip, joint, palm and base of the hand. Depth data relating to these "patches" are compiled into bins for later retrieval by a learning engine. Feeding a sequence of depth maps to a processing unit, the system compares and contrasts patch descriptors with a database of known kinematics to analyze hand or finger placement, trajectory, spatial relation and other metrics.

Importantly, Apple's patent takes partial hand occlusion into account. If a 3D mapping device is unable to see a user's hand, it follows that posture can't reasonably be determined. If, however, a user's hand is only partially hidden from view, perhaps by the user's other hand or a person, a reasonable estimation of landmarks may still be

By ignoring patches of an image that contain occluded features, or alternatively including patches with occluded landmarks but ignoring occluded bins with hidden features, an accurate descriptor of a given scene can be obtained. Applying known distances and kinematics stored in a database, as well as adding to said database over time, results in reliable hand motion and positioning data.

The remainder of the patent goes into more detail regarding specialized estimation algorithms, degree of confidence calculations, weighting formulas and more.

Apple's hand and finger pose patent was first filed in March 2013 and credits Shai Litvak, Leonid Brailovsky and Tomer Yanir as its inventors.