According to a filing with the U.S. Patent and Trademark Office, Apple is investigating an advanced tablet stylus design capable of simulating the texture of onscreen graphics as it moves across a display surface.

Source: USPTO

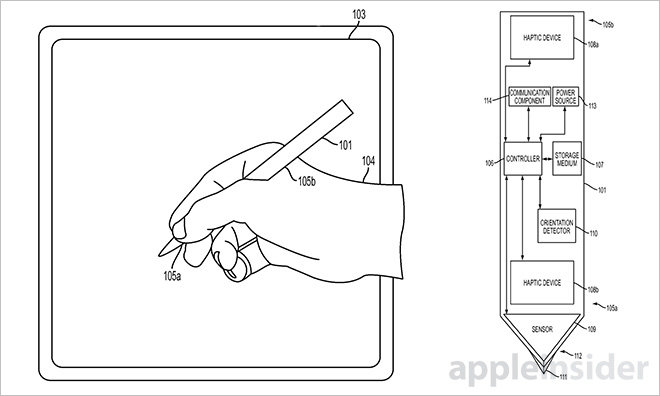

Apple's patent application for a "Touch implement with haptic feedback for simulating surface texture" details a stylus input device with onboard electronics that enable it to sense contact with a touchscreen, gather information about a displayed texture and output vibratory feedback corresponding to said texture.

In some embodiments, the stylus contains contact sensors to determine when the device touches down on a target surface, while other implementations rely on capacitive sensors, pressure sensors and cameras. Sensors like photodiodes are also used to determine textures depicted onscreen, such as wood, paper, glass and more.

Alternatively, texture types may be communicated to the stylus from a host device through any suitable means, such as Bluetooth or Wi-Fi.

Once a texture is ascertained, the touch implement activates a haptic feedback mechanism to relay corresponding vibrations, auditory cues or other signals to the user. Feedback profiles vary depending on texture, meaning rougher depicted textures trigger more dramatic vibrations, while surfaces like glass invoke little to no feedback.

Apple proposes a fairly granular experience in which feedback profiles dynamically change as a stylus moves over different portions of a screen's surface. For example, a user would perceive differing levels of feedback as they wrote across a scene depicting wood, parchment and glass. Further, the system is able to adjust haptic output based as it senses changes in writing pressure, angle or orientation.

In a related patent also published on Thursday, Apple outlines a stylus input that works somewhat in reverse to the method described above. Instead of simulating sensed texture through vibratory feedback, the implement uses a camera module in its tip to decipher and record physical characteristics of virtually any surface or object and reproduce those properties visually on a computing device.

In some embodiments, light bounced off an object passes through the clear tip or lens and gathered by an embedded photo sensor. Image data is sent to a connected device and processed to reproduce three-dimensional renderings that include image textures, shapes and colors. Aside from exact object reproduction, the method is ideal for capturing textures and mapping them to graphical tools in illustration, photo editing or CAD software.

Rumors suggest Apple is preparing to debut a branded stylus when it unveils a larger 12.9-inch iPad model widely expected to launch some time this year. Noted KGI analyst Ming-Chi Kuo believes the initial release will be a simple model without communications capabilities or onboard sensors, with more advanced features coming in subsequent generations.

Apple's patent application for a stylus with haptic feedback was first filed for in January 2014 and credits Jason Lor, Patrick A. Carroll and Dustin J. Verhoeve as its inventors. The company's texture-reading patent application cites the same inventors, along with Glen A. Rhodes.