Apple on Tuesday was granted a patent detailing a method of device localization -- mapping -- using computer vision and inertial measurement sensors, one of the first inventions to be reassigned from this year's acquisition of augmented reality startup Flyby Media.

The patent, published by the U.S. Patent and Trademark Office as "Visual-based inertial navigation," allows for a consumer device to place itself in three-dimensional space using camera imagery and sensor data.

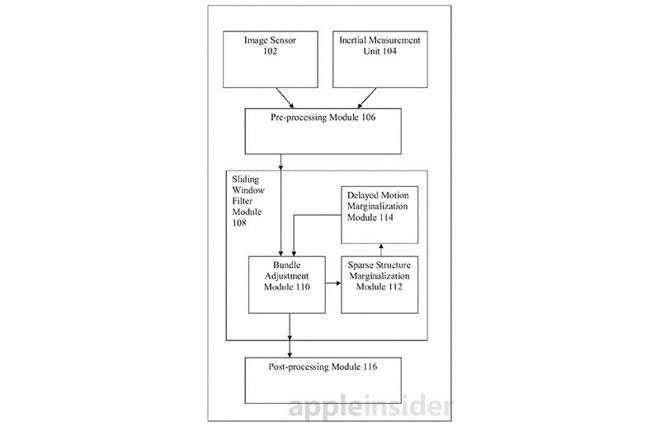

Specifically, the system marry images from an onboard camera or cameras with corresponding data from gyroscope and accelerometer hardware, among other sensors, to obtain information about a device's position at a given point in time. Subsequent images and measurements are compared and contrasted to determine device position and orientation in real time.

As noted in the patent, visual-based inertial navigation systems do not require GPS signals or cell towers to achieve accuracy down to mere centimeters. However, tracking past variables often calls for images and sensor measurements to be stored for later processing, a method requiring computational overhead unfit for mobile hardware.

To overcome such obstacles, Apple's invention implements a sliding window inverse filter (SWF) module designed to minimize computational load. The technology first processes information from overlapping windows of images as they are captured by a device camera, then tracks features in the images with corresponding sensor data to determine an estimated device state.

Next, the SWF estimates the position and orientation of objects proximate to the device. Estimates for device state and proximate objects are then calculated for each image in a series, or window, of images. Finally, the SWF summarizes each window of images, converting the estimates into information about the device at a single point in time. Summarized information about one window can by applied to processing steps in a second, overlapping, window.

In a real world situation, SWF modules might be used to power augmented reality navigation solutions. For example, SWF position and orientation estimates might be used to label points on a digital map or act as a visual aid in locating items in a retail store. Since the module is capable of maintaining the last known position of an object, it can lead users back to a misplaced set of keys or other important items.

One potential implementation incorporates depth sensors to create a 3D map of a given environment, while another integrates a device's wireless radios to mark signal strength as a user walks through a building.

It is unclear if Apple has plans to integrate SWF technology into an upcoming product, but the company is said to be hard at work on augmented and virtual reality solutions.

Apple's visual-based inertial navigation patent was first filed in 2013 and credits former Flyby Media employees Alex Flint, Oleg Naroditsky, Christopher P. Broaddus, Andriy Grygorenko and Oriel Bergig, as well as University of Minnesota professor Stergios Roumeliotis, as its inventors.

Earlier this year reports claimed Apple purchased Flyby Media, a startup focused on augmented reality solutions linked to Google's Project Tango. Of the inventors named in today's patent, Naroditsky and Grygorenko now work at Apple.