Google search is developing a credibility problem that could be blamed on artificial intelligence. In a statement, the company said its algorithms for generating "featured snippet" answers can cause "instances when we feature a site with inappropriate or misleading content," resulting in false answers. However, Google is also the source--and funding--behind much of the fake news on the Internet, thanks to a similar lack of curation for YouTube.

Google's old results here haven't been accurate for months

False results from outdated news sources

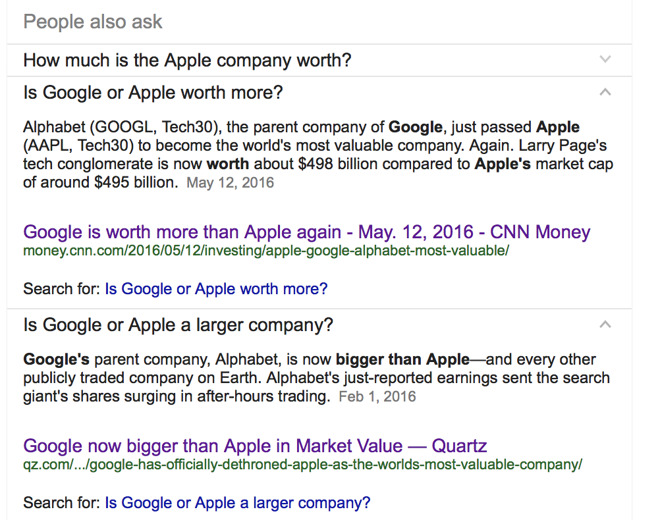

Sometimes Google's suggested search answers, called "featured snippets" are simply outdated, presenting false answers based on old headlines. At the end of February AppleInsider noted that Google was still providing year-old stock market information that confidently, but falsely, claimed Google's parent company was worth more than Apple.

That hasn't been true in months. Since we published the story, Google has removed its "snippet" answer (above) but still returns old, incorrect links from last summer as more relevant links than the new story with accurate information. Further, it still populates a "people also ask" question panel with multiple wrong answers (below).

False results from fake news sources

Even worse, Danny Sullivan, editor of SearchEngineLand, noted in a Tweet that Google is also drawing "snippet" answers from non-credible sources. "If you're wondering if Obama is planning a coup, Google's 'one true answer' is yes. FFS," Sullivan stated.

If you're wondering if Obama is planning a coup, Google's "one true answer" is yes. FFS. pic.twitter.com/pFr7omJttU

-- Danny Sullivan (@dannysullivan) March 5, 2017

A report by recode pointed out that the false information is also read off by Google's Alexa-like Home appliance. It noted that "unlike a normal page of search results, Google Home doesn't tell you where its answer came from or give you the option to see other answers."

BBC tech correspondent Rory Cellan-Jones demonstrated Google Home cheerfully recounting that former U.S. President Obama "may be planning a communist coup d'etat at the end of his term in 2016!"

And here's what happens if you ask Google Home "is Obama planning a coup?" pic.twitter.com/MzmZqGOOal

-- Rory Cellan-Jones (@ruskin147) March 5, 2017

Amazon's Alexa, recode pointed out, didn't return similar false results, instead saying "I can't find the answer to the question I heard."

Google responded to recode in a statement that said, "when we are alerted to a Featured Snippet that violates our policies, we work quickly to remove them, which we have done in this instance. We apologize for any offense this may have caused."

False information cultivated and monetized with corporate advertising

However, it was actually Google's YouTube service that published the false video behind the "answer" from a user named "Western Center for Journalism" back in 2014, "Obama's Coming Communist Coup D'état in 2016."

Google just took money from IBM to sponsor this old fake news YouTube video

A report by BuzzFeed recently drew attention to YouTube's role as a sewage pipe delivering fake news and hate speech.

Unlike Facebook and Twitter, which have been frequently criticized for spreading false stories and conspiracy theories, YouTube generally gets a pass from the media. That may be related to the fact that Google's ads monetize most news websites, and web publishers are largely dependent upon Google search for sending them traffic.

The report by BuzzFeed profiled one YouTube user, David Seaman, as sending a daily stream of videos to 150,000 subscribers, monetized by Google ads from "major brands like Quaker Oats and Uber."

Seaman's stories frequently involve false stories of rape and pedophilia related to a Washington D.C. pizzeria, which stoked outrage among uneducated Americans and inflamed one angry YouTube watcher to the point of showing up at the slandered restaurant with a gun.

Other fake news YouTube segments include deceptively edited videos vilifying emigrants in Europe, including a low budget, false report set in Sweden, published on YouTube as a viral video clip named, "Stockholm Syndrome."

That fake news YouTube video was shown on a Fox and Friends segment on TV, and was subsequently referred to by a disturbed President Trump at a rally, where he spoke of "what's happening last night in Sweden" as if speaking about a terrorist attack, rather than just the issue of a gullible viewer of advanced age confused about the nature fake news content on YouTube, and the willingness of Fox News and Google to lend it credibility for revenue.

Beyond the president of the United States, hundreds of thousands of other YouTube viewers are regularly feeding on subjects ranging from "PizzaGate" child rape fantasy to Holocaust denial to Muslim invasion via immigration. In some high profile cases, such as PewDiePie, Google determines that videos may not be "advertiser friendly" and "demonetizes" them.

Overall, much of Google's featured content not only veers into fake news and conspiracy theory, but actively pushes viewers "down the rabbit hole" by showing them more of whatever false information they wander into, thanks to algorithms that recommend related content without any actual human curation, a growing issue for advertisers.

Google's management of YouTube is similar to that of its Google Play store for Android, where fake content dilutes the value and visibility of legitimate content, and copyright theft is emboldened by Google's sponsorship through ad monetization.