Apple in a patent application published Thursday details a method of integrating an intelligent virtual assistant -- Siri -- into a messaging environment, allowing users to participate in text-based exchanges with the AI, while opening up automation and helper tools to traditional Messages conversations with friends.

Source: USPTO

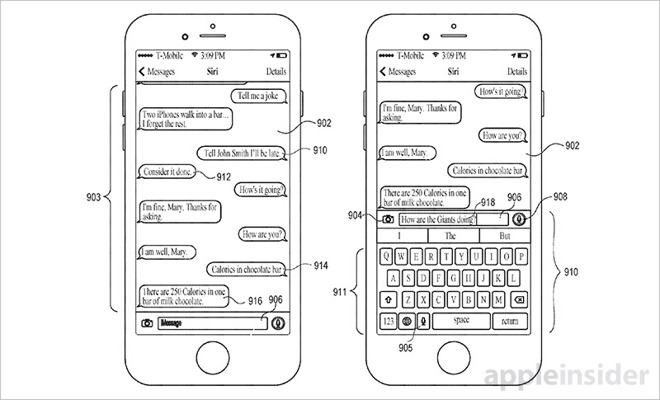

As published by the U.S. Patent and Trademark Office, Apple's patent application for an "Intelligent automated assistant in a messaging environment" describes a method of meshing Siri with a graphical user interface designed for text messaging. Importantly, the integration would grant Siri the ability to not only converse with a single user, but also act as a third party in group chats.

The proposed system appears much more advanced than current Siri implementations that rely largely on speech recognition. As detailed in today's document, Siri integration would enable users to "talk" to the virtual assistant using any form of communication supported by Messages, which at this time includes text, audio, images and video.

Currently, Siri accepts voice commands via device microphones in a dedicated Siri UI. While the assistant can parse natural language input with a high degree of accuracy, mistakes do occur. In such cases, users can modify their query by tapping or selecting confirmation text and typing in the correct information. Siri also lacks the ability to process images in its current form.

As noted in the patent application, moving Siri to a messaging environment fosters all-new interactions. For example, a text-based UI is preferable in noisy environments or in quiet locations where audio interactions are not desired, like a library or movie theater.

Since Messages, and other messaging apps, present information in a chronological format, users would be able to review previous Siri interactions as traditional text histories. Additionally, Siri can reference available chat histories for contextual clues as it attempts to answer user queries. Currently, the Siri UI deletes a query thread once a session is complete.

Like existing implementations, contextual information can go beyond conversation history. For example, device location can be leveraged to answer a question like, "How did the Giants do?" A generated task flow first detects user intent, which in this case is an inquiry into a sports score. Depending on the location of the device, time and team schedule, Siri might return a score for the San Francisco Giants or New York Giants.

Subsequent messages can include links to internet websites relating to the sports score, or internal links to other apps.

Interestingly, the document mentions image and video recognition as viable use case scenarios for a Messages-based Siri. The patent application cites an image of a Volkswagen Beetle, which might be "sent" to Siri by a user as part of a query. In one example, a user asks Siri to gather information on pricing, prompting the virtual assistant to break down the image using identifying characteristics, search the web for current prices and return an answer in the form of a text.

Other examples of image-related queries include "Where is this?" "What insect is this?" "Which company uses this logo?" and more. In some cases, video can be processed by recognizing sounds within a given clip, which is the same method mentioned for parsing audio queries.

With image and audio recognition capabilities enabled, Siri can also "remember" user preferences. For example, if a user sends a picture of a bottle of wine and says, "I like this wine," Siri will make a note for later reference. In the same vein, users can enter blocks of text for storage, such as contact information, which the virtual assistant can file away for later retrieval.

Perhaps most intriguing is multi-user conversations in which Siri plays a role as an active participant. Inviting Siri to join a chat, as one would a third person in Messages, allows the AI to offer its services to both human users. For example, a first user might ask about nearby Chinese restaurants, a query to which Siri would respond with a list of appropriate venues. A second person might want to narrow the list to cheaper eateries and, once a particular restaurant is decided, create a calendar entry for storage on both user devices.

Going further, Siri can later remind each person of the upcoming meeting and suggest transportation options. In examples offered in today's document, each Siri assistant has its own identity. For instance, when reminding a user of a calendar invite, Siri might send a message to a first user saying, "Don't forget about dinner at 7 p.m.," while sending another message to a second user saying, "John Smith's Siri reminds you about dinner tonight at 7 p.m."

In theory, Siri could become an integral third party for Messages chats.

Apple's plans for the invention are unclear at this time. An unverified rumor surfaced in March suggesting Messages in the next-generation "iOS 11" operating system will support some form of Siri integration, though the source report read like speculation.

Thought to be based on recent patent filings, the supposed feature will allow Siri to eavesdrop on Messages conversations in order to provide a device owner with useful information. For example, when a user discusses eating a meal with friends, Siri might pop in and suggest nearby restaurants, ask to schedule reservations or arrange for an Uber. Such capabilities are mentioned in today's patent filing.

Integrating AI assistants into messaging utilities is not unprecedented. Google last year launched the Allo messaging app with Google Assistant integration, granting users in-app access to what boils down to an advanced chat bot. Similarly, popular workplace messaging platform Slack features its own -- less advanced -- chat bot called Slackbot, which can answer common questions and perform rudimentary tasks.

Apple's patent application detailing Siri integration for Messages was first filed for in May 2016 and credits Petr Karashchuk, Tomas A. Vega Galvez and Thomas R. Gruber as its inventors.