Apple's latest contribution to its machine learning blog is a dive into how the software behind the "Hey Siri" command works, and how the company uses a neural network to convert the acoustic pattern of a voice to filter it out from the background.

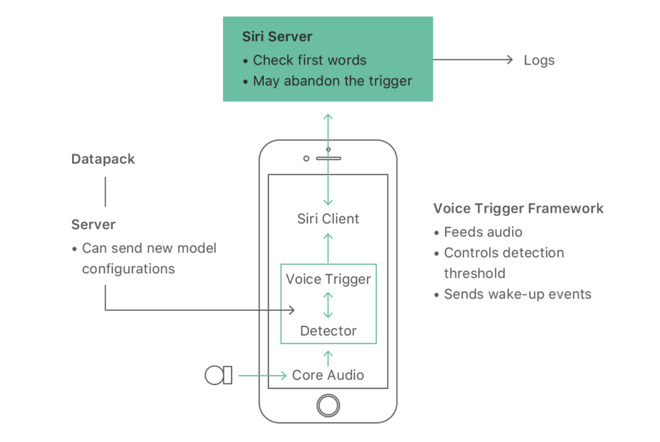

The new article published on Wednesday mostly concentrates on the part of Siri that runs directly on an iPhone or Apple Watch. In particular, it focuses on the detector -- a specialized speech recognizer which is always listening just for its wake-up phrase, but has to deal with other noises as well.

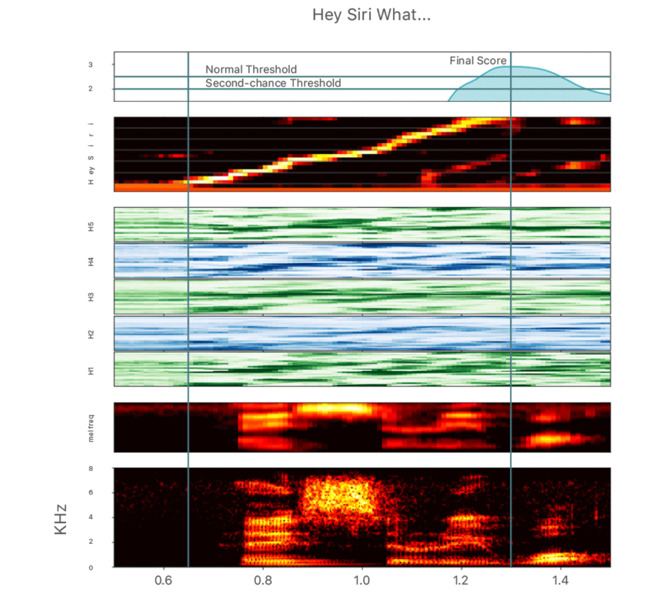

Apple notes that the hardware in an iPhone or Apple Watch turns your voice into a stream of instantaneous waveform samples, at a rate of 16000 per second. About 0.2 seconds of audio at a time is fed to a "Deep Neural Network" which classifies what is listening to, and passes a likelihood if what it is listening to is the activation phrase to the rest of the operating system.

Apple has set several thresholds for sensitivity. If a score is in a median range, the software listens more intently for the phrase a second time for a few seconds to be sure it is not missed again.

After the initial activation, the waveform arrives at the Siri server. If the main speech recognizer hears it as something other than "Hey Siri" -- for example "Hey Seriously" -- then the server sends a cancellation signal to the phone to put it back to sleep.

There are language-specific phonetic specifications integrated as well, with Apple noting that words "Syria" and "serious" are examined in context with the surrounding phrase.

The Apple Watch presents some special challenges because of the much smaller battery, and less powerful processing capability. To skirt those problems, Apple Watch's "Hey Siri" detector only runs when the watch motion coprocessor detects a wrist raise gesture, which turns the screen on.

Apple's Machine Language Journal went live on July 19, with the first post, "Improving the Realism of Synthetic Images" discussing neural net training with collated images. The first piece used eyes and gazes as an example of a data set where a large array of training information is needed, but the difficulty of collecting said data could stand in the way of efficient machine learning.

Apple is inviting machine learning researchers, students, engineers and developers to contact them with questions and feedback on the program.

The site appears to be part of Apple's promise to allow researchers to publish what they discover and discuss what they are working on with academia at-large. The sea change in Apple's policies was announced in December by Apple Director of Artificial Intelligence Research Russ Salakhutdinov.