Apple on Tuesday was granted a pair of patents that together describe a mobile augmented reality system capable of detecting its surroundings and displaying generated virtual information to users in real time.

Source: USPTO

As published by the U.S. Patent and Trademark Office, Apple's U.S. Patent No. 9,560,273 lays down the hardware framework of an augmented reality device with enhanced computer vision capabilities, while U.S. Patent No. 9,558,581 details a particular method of overlaying virtual information atop a given environment. Together, the hardware and software solutions could provide a glimpse at Apple's AR aspirations.

Both patents were originally filed for by German AR specialist Metaio shortly before Apple acquired the firm in 2015. Then USPTO patent applications, the IP was assigned to Apple last November.

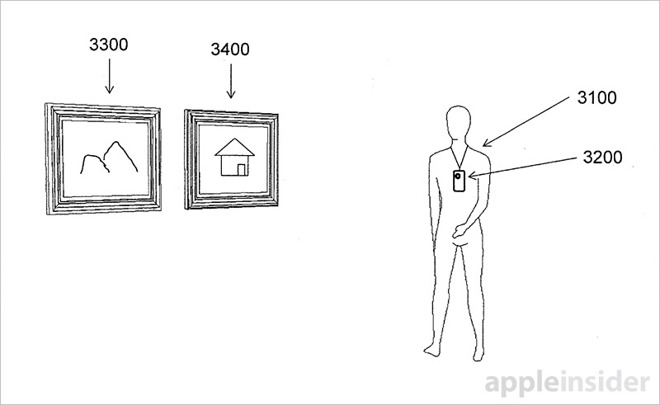

The '273 patent for a "Wearable information system having at least one camera" imagines a device with one or more cameras, a screen, user interface and internal components dedicated to computer vision. While head-mounted displays are mentioned as ideal platforms for the present AR invention, the filing also suggests smartphones can serve as suitable stand-ins.

At its core, the filing deals more with power efficient object recognition than it does visualization of AR data overlays. The former feature is perhaps the greatest technical barrier to wide adoption of AR. In particular, existing image matching solutions like those employed in facial recognition security systems consume a large amount of power and thus see limited real world use.

Illustration of wearable AR system.

Apple's patent goes further than simple device control, however, as it details a power efficient method of monitoring a user's environment and providing information about detected objects. For example, the technology might be used as part of a guided tour app, scanning for and providing information about interesting objects as a visitor walks around a museum.

To achieve power specifications suitable of a mobile device, the invention maintains a default low-power scanning mode for a majority of its operating uptime. High-power modes are triggered in short bursts, for example when downloading and displaying AR content or storing new computer vision models into system memory.

The document dives deep into initialization of optical tracking, or the initial determination of a camera's position and orientation. An integral facet of AR, initialization is considered one of the technology's most difficult hurdles to overcome.

Apple notes the process can be divided into three main building blocks: feature detection (feature extraction), feature description and feature matching. Instead of relying on existing visual computing methods, Apple proposes a new optimized technique that implements dedicated hardware and pre-learned data.

Some embodiments call for an integrated circuit that manages the entire feature recognition and matching process. Further aiding the process is a reliance on intensity images, or images representing different amounts of light reflected from the environment, depth images, or a combination of the two.

Images captured by a user device are cross-referenced against descriptors in an onboard or off-site database, which itself can be constantly updated. Positioning components, onboard motion sensors and other hardware can further narrow down an object's possible identity.

Interestingly, the invention notes depth images, which result in better object determination, require a two-camera setup. Coincidentally, Apple last year introduced a two-camera design with iPhone 7 Plus.

Apple also built depth detection functionality into its latest flagship iPhone with Portrait Mode, a feature that uses complex computer vision algorithms and depth mapping to create a series of image layers. The camera mode automatically sharpens certain layers, specifically those containing a photo's subject, and selectively unfocus others using a custom blur technique.

Further embodiments of today's patent go into great detail regarding object recognition, description and matching procedures, while others describe potential applications like the tour guide assistant mentioned above. Other possible use cases include indoor navigation assets.

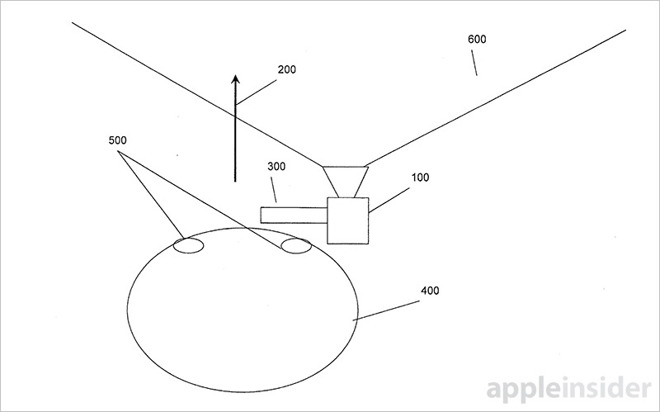

Apple's '581 patent, titled "Method for representing virtual information in a real environment," also originated in Metaio's labs and reveals a method of labeling points of interest in an AR environment. More specifically, the IP takes occlusion perception into account when overlaying virtual information onto a real-world object.

For example, a user viewing an AR city map on a transparent display (or on a smartphone screen depicting a live feed from a rear-facing camera) pulls up information relating to nearby buildings or landmarks. Conventional AR systems might overlay labels, pictures, audio and other media directly onto a POI, thus occluding it from view.

To compensate for shortcomings in computer vision systems, Apple proposes the use of geometry models, depth-sensing, positioning data and other advanced technologies not commonly seen in current AR solutions.

Applying 2D and 3D models allows for such systems to account for a user's viewpoint and their distance away from the POI, which in turn aids in proper visualization of digital content. Importantly, the process is able to calculate a ray between the viewing point and virtual information box or asset. These rays are limited by boundaries associated with a POI's "outside" and "inside" walls.

Again, Apple's patent relies on a two-camera system for creating a depth map of a user's immediate environment. With the '581 patent, this depth map is used to generate the geometric models onto which virtual data is superimposed.

Whether Apple has plans to integrate either of today's patents into a shipping consumer product is unknown, but mounting evidence suggests the company is moving to release some flavor of AR system. The dual-sensor iPhone 7 Plus camera is likely the key to Apple's AR puzzle, as many current and past patents rely on depth sensing technology to generate and display virtual data.

Apple's object recognition patent was first filed for in February 2014 credits former Metaio CTO Peter Meier and senior design engineer Thomas Severin as its inventors. The AR labeling patent was filed for in June 2014 and credits inventors Meier, Lejing Wang and Stefan Misslinger.