Apple's iOS 11 brings Augmented Reality to a huge installed base of over 350 million iOS devices with powerful A9, A10 Fusion or A11 Bionic chips. However, another form of AR is about to be introduced, exclusive to the company's new iPhone X. Here's a deeper look at Apple's big AR surprise.

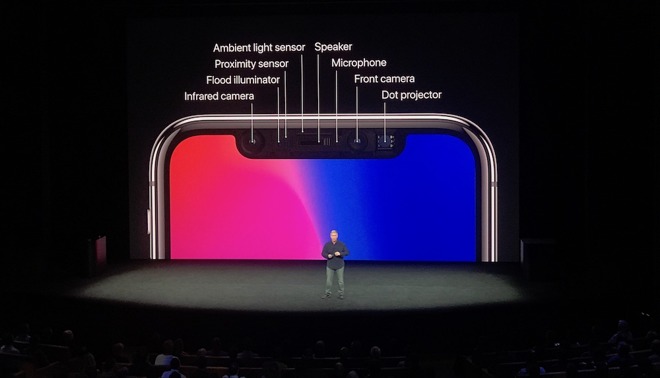

ARKit on your face with the TrueDepth camera

While Apple's existing platform for ARKit in iOS 11 targets a huge installed base of single-camera iPhone and iPad models, the company is also about to release something that no Android phone will have in the short term: a front facing depth camera capable of performing even more sophisticated AR effects.

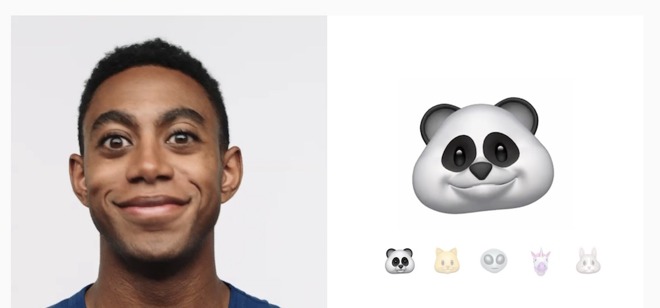

On iPhone X, ARKit apps can take advantage of its TrueDepth camera to make the user's face into the surface where 3D graphics are built. This uses facial geometry to track the user's pose, face shape and their expression, enabling Animoji (below), Portrait Lighting effects, masks and avatars; effectively, a very sophisticated new depth of AR effects for the front-facing camera.

Rather than just putting an enhancement layer on top of a photograph, Face Tracking in ARKit detects the user's face and movements and tracks positioning using its array of cameras and the phone's 6-axis motion sensors. This can be used to create "selfie effects" such as applying virtual makeup or tattoos, facial hair, glasses, jewelry or hats. It can also be used to perform "face capture," which takes real-time facial expressions to animate a 3D model such as a character avatar.

Google's AR was Ahead before it fell so faR behind

Apple's new level of depth camera-based AR technology will begin building out a platform of tens of millions of iPhone X users just as Android's ARCore trickles out basic single-camera AR to a select few, premium-priced Android models.

Google isn't just a couple years behind in deploying AR software; the Android ecosystem is vastly behind in even creating a business model that could support the development of a mass market device with a depth camera capable of delivering the depth-based AR Apple is about to roll out to millions within the next month.

Oddly enough, Google was originally ahead of the game with its experimental Project Tango, a more ambitious form of AR that required a depth camera. The technology traces its lineage back to the same work at PrimeSense and FlyBy Media, both of which Apple acquired.

In addition to performing Visual Inertial Odometry, Tango also addresses SLAM (Simultaneous Localization And Mapping), which builds out a map of what the depth camera system "perceives" for later use. This growing map of your home and everywhere else you use Tango collects an expansive model of the world, something like Google's camera cars that create street views for Maps. Not including SLAM in ARKit was an intentional decision

ARKit 1.0 doesn't attempt to map out the world as you use it, focusing instead on lightweight, immediate uses of AR to create explorable 3D visuals. However, it can be used in conjunction with GPS or iBeacon indoor location systems to provide AR in combination with known maps. Not including SLAM in ARKit was an intentional decision, because Apple has acquired companies working with interior mapping, specifically using SLAM techniques.

The greater technical complexity Google worked on in Tango, combined with its prerequisite, complex and expensive depth cameras to work at all, added up to a project that didn't ship in a workable form ordinary people could use. Further, rather than building out the hardware and software technology to deploy AR to the masses itself, Google relied on third parties to find some application of the experimental work it was doing.

Google's Tango danced in the wrong direction!

Across years of work, Google dribbled out an experimental Tango phone and tablet to about 3,000 curious researchers before teaming up with China's Lenovo to install a depth camera system on the back of the Phab 2 Pro "Tango" phone last year, followed by a similar Asus-built Zenfone AR earlier this year.

Lenovo Phab 2 Pro Tango phone

However, both of those Tango phones implemented depth-AR using the rear camera. That's a design decision that appears to makes sense, but it renders depth-AR as a standalone gimmick that's only marginally better than single camera AR using VIO, despite making the phone significantly more expensive. CNET concluded that the Phab 2 Pro was "a bulky, heavy, mediocre handset with disappointing hardware, an old version of Android and no NFC."

Unlike Tango phones, iPhone X implements a front-facing TrueDepth sensor. Compared to the status quo, that seems backwards until you realize that, in addition to doing depth-based AR as a neat "trick," it also positions the depth sensor to do other valuable things: Face ID authentication, familiar Portrait Lighting effects on selfies, and an advanced type of face-based avatar filters that are already extremely popular among young Snapchat users.

Another reason why Apple's front-facing TrueDepth camera has significant mobile advantages over the rear-mounted depth camera system of Tango: depth cameras use a lot energy, so expecting to use one to navigate through a store as your phone is held out in front of you (Tango's "Visual Positioning System" was actually one of the features Google presented at IO this summer!) isn't really sensible. Further, using such a depth camera outside can be problematic because sunlight can overwhelm the IR illumination and dots that are projected by depth camera systems.

By putting its TrueDepth sensor on the front, Apple can turn it on only when needed (with frequent short bursts for Face ID, or only when users are actively recording Animoji). The system also only has to illuminate your face, rather than attempting to invisibly light up the entire environment.

Note that Apple points out that Face ID works "even in the dark," highlighting its engineering intent for use where a user would expect to have it work, in contrast to Tango and Google's VPS, which ambitiously pretend to work everywhere imaginable, in large, open and outdoor environments that are simply much harder to actually deliver useful AR-related SLAM or VIO as practical, functional features.

Our new #Tango-enabled Visual Positioning Service helps mobile devices quickly and accurately understand their location indoors. #io17 pic.twitter.com/1pYlCGM8eg

-- Google (@Google) May 17, 2017

Tango is a Newton. ARKit is an iPod

A big part of successfully deploying any new technology (or art) is finding a rooting in the familiar. If it's too new, nobody knows how to relate to it. However, if it's too common, there's little proprietary value in developing the technology. Apple commonly aims, not just to be first, but to hit the market with a practical, valuable solution in a way that nobody else has thought to deliver.

Apple hasn't always been so focused. In the early 1990s, it developed Newton as an ambitious tablet project, but didn't complete enough of the work to make it clearly and obviously useful at the price its new technology demanded. After years of work, Newton was essentially blindsided by Palm, which shipped a cheaper, less technically impressive but more practical, useful Pilot PDA.

Google has a similar problem with Tango: it's put lots of effort into developing technology that Apple intentionally chose not to implement (such as the SLAM mapping that plays a big role in Tango but isn't in ARKit 1.0), resulting in complexity and expense without a parallel increase in value for most mainstream users.

Academics can blog about how much more superior Tango is on paper, but if it can't deliver on its ambitious promises, and if nobody is using it because it's expensive to implement, and it never really gets updated and maintained and finished because of that lack of use in the real world, it doesn't really matter.

Hidden as plain as the notch on your face

In the same way that the bloggers who stole a prototype iPhone 4 back in 2010 had it in their hands but didn't even know it would feature a Retina Display or a gyroscope or introduce FaceTime, the basic design of iPhone X was splashed around by leakers who didn't really grasp that Apple's depth AR strategy was more than just a way to visualize Ikea furniture in a room.

TrueDepth was implemented as a way to securely identify and recognize the device's owner, enhance their selfies and pull them into an animated world of Anaimoji chats and third-party avatars. Apple's novel implementation of TrueDepth on iPhone X makes the user the focus of its unique new depth camera

There are some interesting applications for depth-based AR (requiring an actual depth camera, unlike most of ARKit) on the back of a mobile device. But there are already commercial solutions offering this (including Occipital's $379 external Structure Sensor for iPad) and they haven't exactly taken the world by storm.

Apple's novel implementation of TrueDepth on iPhone X makes the user the focus of its unique new depth camera. It combines a flood illuminator that reflects invisible infrared light back to the infrared camera, in parallel with a dot projector that creates a grid of landmark points to serve as a structure sensor.

Multiple paths to implement depth-based AR

There are a lot of different ways to implement this technology: Microsoft focused on IR mapping of the living room in front of Xbox Kinect games to track users' bodies. While novel, it hasn't delivered gameplay that's superior to other forms of motion controllers, and has lots of simplistic drawbacks. The system also adds expense, playing a role in making Microsoft's latest console generation too expensive to effectively compete until the company stripped out Kinect as a bundled feature.

Similarly, Google's Tango experiments that put a structure sensor on the back of big Android phones offered some potential for novel features related to mapping out objects in the room, but was too expensive to find an audience for what little it could actually do out of the box.

Apple's two-pronged approach brings single camera ARKit to an installed base of 380 million devices, acquainting users with the technology in a free update to iOS 11. In parallel, users can opt into the even more sophisticated world of front facing, TrueDepth camera AR on iPhone X, which looks at the user rather than the outside world behind the device.

And while some sought to ridicule Apple over the frivolity of demonstrating how the TrueDepth camera could be used to animate emoji, in reality Craig Federighi spent less time showing the feature off for iPhone X than Steve Jobs spent demonstrating how iPhone 4's gyroscope could be used to animate Jinga blocks. To intelligent observers, both were examples of how incredible new technology could be put to use by app developers once delivered to a large platform of users.

Outsmarting Google with secret development

Apple's efforts to find useful, attractive features for its TrueDepth system and use them to sell iPhone X in large volumes is so obvious in hindsight that Android makers are now rushing to copy it. This explains why Apple didn't brag about its implementation plans for TrueDepth AR until it had iPhone X nearly ready to sell.

Further, Apple's strategy for rolling out single camera AR to over 380 million users in iOS 11 while introducing its depth-based Face Tracking AR as an exclusive feature of iPhone X is only obvious now, far too late for copycat Android makers or their platform leader to quickly catch up.

Google has attempted to salvage parts of its depth-camera Tango work under a simpler single-camera implementation it recently rebranded as ARCore. But by failing to identify the most valuable and easiest to deploy technologies first, in tandem with developing a more advanced layer that could be sold as an exclusive premium, it's now far behind Apple's ARKit rollout and must start over from scratch on its depth-based AR, if it can even find anyone to adopt its second attempt at premium-priced Tango, modeled after Apple's work.

Apple's secrecy in developing a unique, innovative way to apply its TrueDepth technology essentially replicated the history of Google Wallet (steamrolled by Apple Pay), Android NFC Beam (run over by Bluetooth 4 Continuity) and Android fingerprint sensors (trounced by Touch ID). Being first with experimental new tech was clearly not as a valuable as going bigger with a better implementation that could offer real value to buyers.