When iPhone launched ten years ago it took basic photos and no videos. Today's iOS 11 now achieves a new level of image capture with Depth, AI Vision and Machine Learning. In parallel, it hosts a new platform for Augmented Reality, which integrates what the camera sees and graphics created by the device. Here's a look at how it works.

AR is a major new advance in camera-related technology

This fall's release of iOS 11 introduced a series of new Application Programming Interfaces (APIs) related to cameras and imaging. An API allows third-party developers to leverage Apple's own code (referred to as framework or sometimes a "kit") to do specialized, heavy lifting in their own apps.

The new Depth API lets developers build apps that figuratively stand on the shoulders of Apple's giant work in developing and calibrating iPhone 7 Plus and 8 Plus dual cameras, which can work in tandem to build a differential depth map used to selectively adjust portions of images based on how near or far from the camera they are.

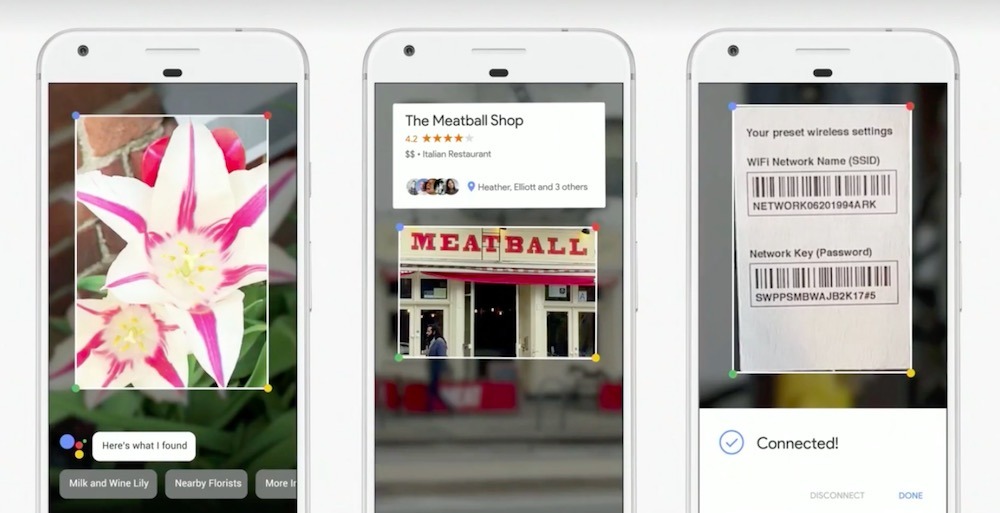

And with its sophisticated Vision framework and CoreML, Apple is allowing developers to tap into a library of pre-built intelligence for detecting faces, objects, text, landmarks and barcodes that are seen by the camera, and then even identify what objects are based on existing Machine Learning models. AR uses a combination of motion sensor data and visual input from the camera to allow a user to freely explore around 3D graphics rendered in space

A third major effort in iOS 11's intelligent camera imaging APIs supports the creation of rendering Augmented Reality experiences as a platform for third-party developers, using a new framework Apple calls ARKit.

The most obvious examples of ARKit apps are games that build a synthetic 3D world of animated graphics that are overlaid upon the real world as seen by the camera.

There are also a wide range of other things that can be done in AR, from guided navigation to exploration of models to measuring and mapping out the real world, such as building out a house floor plan by simply aiming your camera at the corners.

Beyond just overlaying 3D graphics on top of real images, AR uses a combination of motion sensor data and visual input from the camera to allow a user to freely explore around 3D graphics rendered in space, visualizing complex animated scenes from any angle.

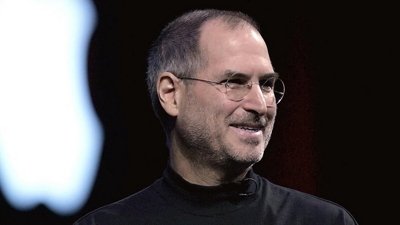

Steve Jobs introduces Core Motion on iPhone 4

Delivering a platform for AR app development is more difficult than it may appear. ARKit builds upon Apple's decade of experience in optimizing and tracking data from motion sensors, starting with the 3-axis accelerometer built into the original iPhone back in 2007 that enabled tilt games and gestures such as "shake to undo."

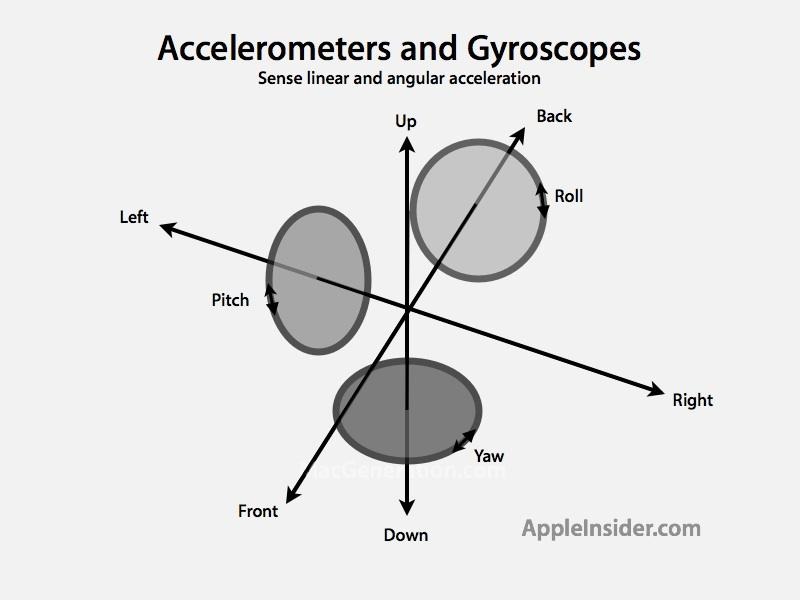

In 2010, iPhone 4 delivered the first smartphone with a 3-axis gyroscope. Coordinated with accelerometer tilt sensors and the digital compass, this gave the phone 6-axis motion sensing and rotation about gravity, enabling a new level of spatial awareness in games and utility apps.

Steve Jobs demonstrated using iPhone 4's gyroscopic positioning in space to play an indulgent two minute game of Jenga on stage at WWDC in 2010, where his stack of virtual blocks could rotate in tandem with phone movement, rather than just be tilted side to side.

At the time, this was an exciting new capability, eliciting whoops and cheers from the developer audience— engineers who were aware of what could be done on a mobile device with access to accurate, 6-axis motion data.

Forms of motion tracking had been deployed before in video game console controllers, such as Nintendo's Wii remote with accelerometers and Sony's SIXAXIS gyroscopic controller for PS3. However, Apple added a gyroscope to iPhone 4 even before the major mobile gaming devices: Sony's PSP Go lacked motion sensors and Nintendo only shipped its motion-aware 3DS the following year.

Jobs emphasized that iPhone 4's new gyroscope wasn't just a fanciful hardware gimmick, but was being delivered with new iOS Core Motion APIs that "give you extremely precise positioning information," enabling app developers to explore novel ways to use the data those sensors recorded. He also emphasized that the new hardware was being shipped on every iPhone 4, meaning it would quickly create a huge, uniform installed base for developers to target.

Five years of Core Motion evolution

Apple's then-new A4 custom silicon kept growing more powerful and sophisticated. For 2013's 64-bit, A7-powered iPhone 5s, Apple introduced a new low-power M7 motion coprocessor for efficiently monitoring the output of motion sensors and the digital compass, making it possible to collect and report background Core Motion data without constantly turning on the CPU.

One of the most obvious and valuable uses of motion data related to fitness tracking. The M8 coprocessor core in iPhone 6 enhanced this by adding a barometer for tracking elevation changes while climbing stairs or running up hills.

The next year, the always-on, power-efficient M9 Core Motion silicon was further tasked with monitoring the microphone to respond to Hey Siri commands on iPhone 6s. This technology also found its way into Apple Watch, where both Siri and fitness tracking were major features.

Core Motion + Camera vision = VIO

In 2015, Apple acquired Metaio, a company spun off from an internal Volkswagen project to develop tools for Augmented Reality visualizations. Along with previous acquisitions including its 2013 buyout of PrimeSense and later Faceshift, as well as subsequent buys including last year's Flyby Media, Apple was assembling to skills required to build and navigate a new 3D world on top of motion sensing and cameras.

The ultimate goal involved the sophisticated front facing TrueDepth sensor built into iPhone X, designed to revolutionize authentication using a detailed 3D imaging of the user's facial features. However, the platform for Face ID and facial expression tracking in video will only launch when the advanced new phone ships next month.VIO allows the system to create animated 3D graphics that it can visualize live in "6 degrees of freedom," following the device's complex movements along 6 axes: up/down, back/forth, in/out and its pitch, yaw and roll

Many of the same technologies are arriving early in the form of single camera AR. New in iOS 11, devices with at least an A9 chip (incorporating the M9 Core Motion coprocessor, a base of more than 380 million iPhone 6s and later phones and newer iPads) can track motion sensor data for use in positional tracking in tandem with information from the camera using a technology called VIO (Visual Inertial Odometry).

VIO analyzes camera data ("visual") to identify landmarks it can use to measure ("odometry") how the device is moving in space relative to the landmarks it sees. Motion sensor ("inertial") data is used to fill in the blanks in providing complementary information that the device can compare with what it's seeing to better understand how it's moving in space.

Essentially, VIO allows the system to create animated 3D graphics that it can visualize live in "6 degrees of freedom," following the device's complex movements along 6 axes: up/down, back/forth, in/out and its pitch, yaw and roll.

Paired with Scene Understanding, the camera can identify real-world visual landmarks to define as horizontal surfaces to serve as the foundation for a 3D rendered graphic scene. Further, the camera is also used for Light Estimation, which is used to render adaptive lighting and shadows. The result is a photorealistic 3D model, rendered in space on top of real-world video captured by the camera, that can be freely viewed from any angle simply by tilting and rotating the device.

The Machines running on #ARKit powered by #UE4! https://t.co/xC4hum7dhk @EpicGames @UnrealEngine #ar #AugmentedReality @Apple pic.twitter.com/5rOpUIlDuA

— Directive Games (@DirectiveGames) July 21, 2017

VIO lets ARKit apps identify surfaces such as walls, floors and tables, and then place virtual objects in space, allowing the user to see the world visible to the camera augmented with computer graphics built on these surfaces, and then freely explore them the same way we would examine objects or environments in the real world: by looking up, down, over, under and orbiting around them.

AR will be hard for Android to follow

AR's VIO might sound simple conceptually, but it requires precise tracking of Core Motion sensors and visual analysis of thousands of moving points across frames of a video stream. Those mountains of data have to be calculated instantly and then the results applied to redrawing the scene in real-time, in order to render a convincing AR experience. ARKit requires very fast silicon logic on the level of Apple's latest three generations of A9, A10 Fusion and A11 Bionic chips.

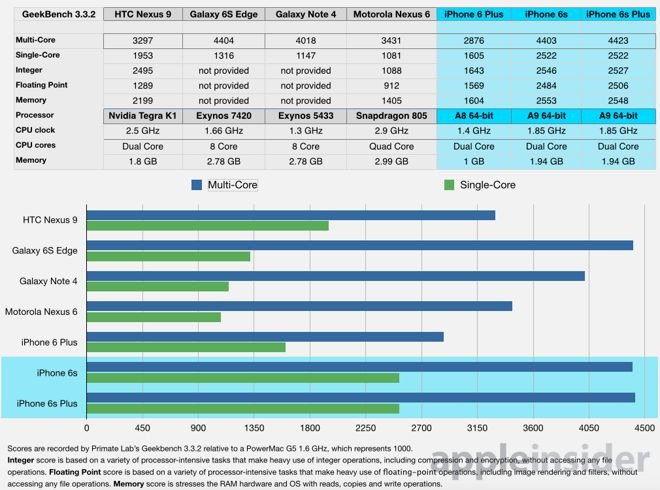

Across the last two years, Apple has sold over 380 million iOS devices that are ready to handle single camera VIO-based AR. However, the top Android flagships of 2015 were powered by Qualcomm and Samsung chips that were only around half as fast as Apple's A9.

Two years ago, it wasn't as obvious why Apple's A9's superior speed was so important. But today, none of its peers can run Android's equivalent of ARKit. Google even abandoned its "own" Nexus 6 and Nexus 9 after crowing about how fast their Tegra K1 and overclocked Qualcomm Snapdragon chips were at the time.

Even top Androids sold over the last year were significantly slower than the A9 in single core performance. And while it appears that VIO calculations can take advantage of multiple cores using multithreading, another problem for the premium Androids with chips fast enough to do AR is that their vendors have generally saddled them with extremely high-resolution displays, meaning they'd have more work to do just to render the same scene.

Apple's pairing of extremely fast silicon with displays using a manageable resolution has enabled it to rapidly deploy its AR platform across a huge existing installed base capable of running it.

A larger problem for AR on Android is the precise accuracy required between what the camera sees and what its motion sensors report. Androids are not only typically forced to use slower chips powering ultra high-resolution displays, but also face fragmentation in that they make use of a much more diverse selection of motion sensors that are harder to calibrate and support.

When Google outlined its own effort to bring ARCore development to Android, it could only support two devices: HTC's Pixel and Samsung's S8. That's only around 25 million devices sold over the last year, significantly less than one-tenth the size of Apple's existing iPhone installed base capable of running ARKit apps.

Keep in mind that these figures describe only the maximum potential number of users who could currently be interested in AR apps. Just as with phone cases, not everyone will want to use AR apps. But when you shop for phone cases, the uniform installed base of iPhones gives you far broader options than those specially fit to a specific Android model.Despite serving twice the trackable downloads of the App Store, Google Play only brings in about half the revenues

There's a threshold of critical mass that needs to be reached before developers can build a business case for investing in ARCore, as anyone with a Windows Phone can relate. If there weren't, we'd see more apps specific to Galaxy phones, for example.

Despite years of efforts trying to entice or even pay developers to adopt its proprietary APIs such as Chord, Samsung hasn't really managed to gain any traction for apps that only work on its brand of Android phones. After breathlessly announcing that "Samsung is fully committed to making Chord the top sharing protocol for app developers," the API does not appear to have outlived its 2013 press release.

AR on the iOS App Store

Additionally, while basic Android already has a very large global installed base, it is also failing to serve as an apps platform that's comparably lucrative to the iOS App Store. Despite serving twice the trackable downloads of the App Store, Google Play only brings in about half the revenues. In part, that's because Android users don't expect to pay for apps. That has changed the character of Android apps to a mostly free-with-ads model.

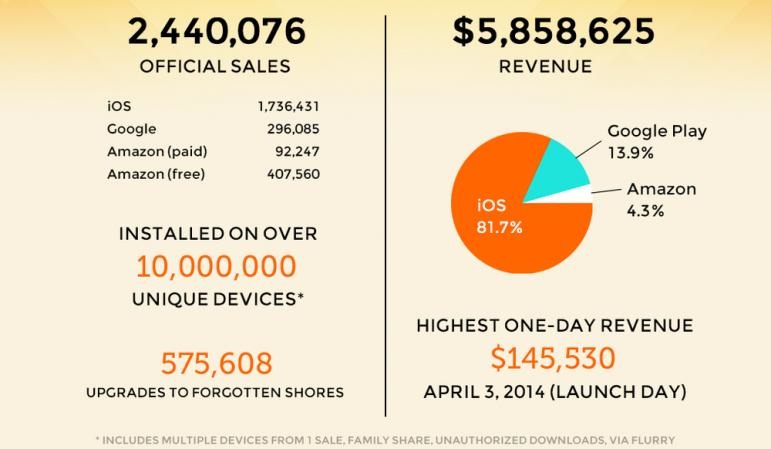

Last year, Ustwo Games reported that 40 percent of its iOS users paid to install its award-winning title "Monument Valley," while only 5 percent of Android users paid. The rest were largely downloading pirated copies. When AR comes to Android, it will likely be focused on advertising

Of the 2.4 million sales of the game, 1.7 million were on iOS while less than 0.3 occurred on Google Play. Over 80 percent of the developer's revenue came from iOS users.

Those statistics play a major role in why gaming, productivity apps and other mobile software tools are commonly built exclusively for iOS or are released on iOS first, and are commonly brought to Android only later in a version with ads.

This means that when AR comes to Android, it will likely be focused on advertising, not on utility or in paid gaming or enterprise apps that push the boundaries of what's possible with the new technology.

Apple's AR is a platform, not a feature

Notably, as we predicted this summer Apple's approach in building AR experiences— both using standard iOS cameras and with depth— is part of its apps development platform, not just a trick it performs internally.

In contrast, at this summer's Google IO, the company showed off its new Google Lens features based on Augmented Reality, Machine Learning and Optical Character Recognition, but didn't really expose these as a third-party developer platform. Instead they were rolled into its cloud-based Google Services, a feature that attaches Android to Google's proprietary services and paid placement advertising and the surveillance tracking required for monetization.

Unlike Apple, Google doesn't earn its revenues from selling hardware, supported by its apps platform. Google has been talking down mobile apps for years, crafting a narrative that suggests that nobody actually uses apps and that we should all go back to using the web, which is so much easier for Google to track and subsequently monetize user behavior. This is not unlike Microsoft's earlier Windows Phone advertising that basically asked: "why would you want apps?"

The reality is that third-party software is a major reason why people are attracted to iOS and stick with it rather than buying smartphones or tablets as a commodity device like PCs, netbooks or TVs that can all display generic streams of web-based content.

With all of its work in sophisticated camera imaging, depth sensing, machine vision and AR, Apple is working to ensure that apps remain the center of how we use mobile technology. So far, that strategy is winning.

However, there's also another frontier in AR that's about to deploy. It's been kept a tight secret, entirely to make a splash in a way Google will find harder to copy, as the next article will outline.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Christine McKee

Christine McKee

Charles Martin

Charles Martin

Mike Wuerthele

Mike Wuerthele

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

-m.jpg)

10 Comments

Nice, informative article. The only part I disagree with is "AR's VIO might sound simple conceptually, but ..." I doesn't sound simple at all. It's amazing that devices the size of an iPhone can pull this off in real time.

Wasn’t the gyroscope in the iPhone 4 three-axis?

http://www.memsjournal.com/2011/01/motion-sensing-in-the-iphone-4-mems-gyroscope.html

Accelerometers measure change in velocity (values can be integrated and combined with gyro data to measure change in position ). Relatively low error but error compounds over time.

Gyroscopes meaure change in orientation. Relatively low error but it compounds when integrated over time and error drastically effects absolute position calculations.

Compass measures fixed orientation relative to the earths magnetic field, which is weak and can be masked by nearby magnets and metals. Relatively high error but doesn’t increase with time and can be improved with calibration. Can be used to minimize/stop long term gyro error buildup through a computational feedback loop.

GPS measures absolute position. Similar to compass, error is relatively high but doesn’t increase with time and can be used to manage smooth and accurate position tracking when incorporated into a feedback loop with the differential position data coming from accelerometer and gyroscope. Requires line of site with satellites (other tech required for indoors) Error should drastically reduce next year with new satellite network (and associated next gen receiver chips) coming online.

Now, with VIO, camera data is incorporated into this feedback loop for even more smooth and exact output.