The third developer beta of iOS 13 tackles a long-standing problem with video calls: realistic eye contact between participants.

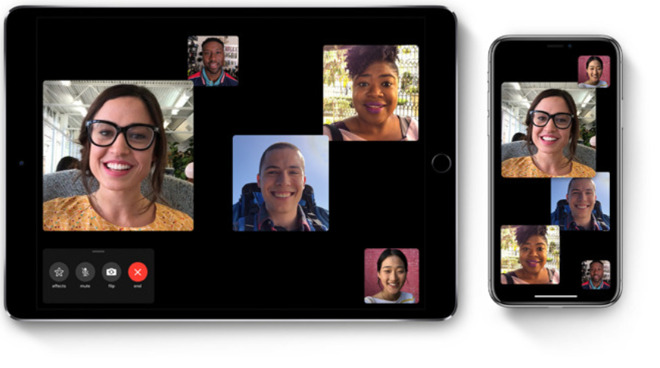

If you've ever used FaceTime to call someone, you've probably noticed that there seems to be something a little unnatural about the way it looks. This is largely due to the fact that video calls tend to make it look like the participants aren't maintaining eye contact with each other.

After all, in a video call, a user is looking at the screen, not into their front-facing camera. Rather than looking "at" the other person "through" the display, it instead gives the effect of looking at their chin or mouth.

Apple has started beta testing a solution for this, with the new "FaceTime Auto Correction" feature in iOS 13. It seems to use image manipulation to make it appear as though both participants are maintaining realistic eye contact between each other, though it is not currently known just how Apple has managed to achieve this effect.

Image Credit: Will Sigmon

The feature was discovered and made widely known by Mike Rundle on Twitter.

Haven't tested this yet, but if Apple uses some dark magic to move my gaze to seem like I'm staring at the camera and not at the screen I will be flabbergasted. (New in beta 3!) pic.twitter.com/jzavLl1zts

-- Mike Rundle (@flyosity) July 2, 2019

Currently, the feature is only being tested in the developer beta and only on the iPhone XS and the iPhone XS Max, and can be turned on or off within FaceTime's settings, and should show up soon in the iOS 13 public beta. Due to the devices it is currently enabled for, it is also unclear if it will be restricted to models with a TrueDepth camera array or for all iOS devices.