Twitter on Wednesday said it is trialing a new feature called "Safety Mode" that automatically blocks accounts sending harmful or unwanted tweets.

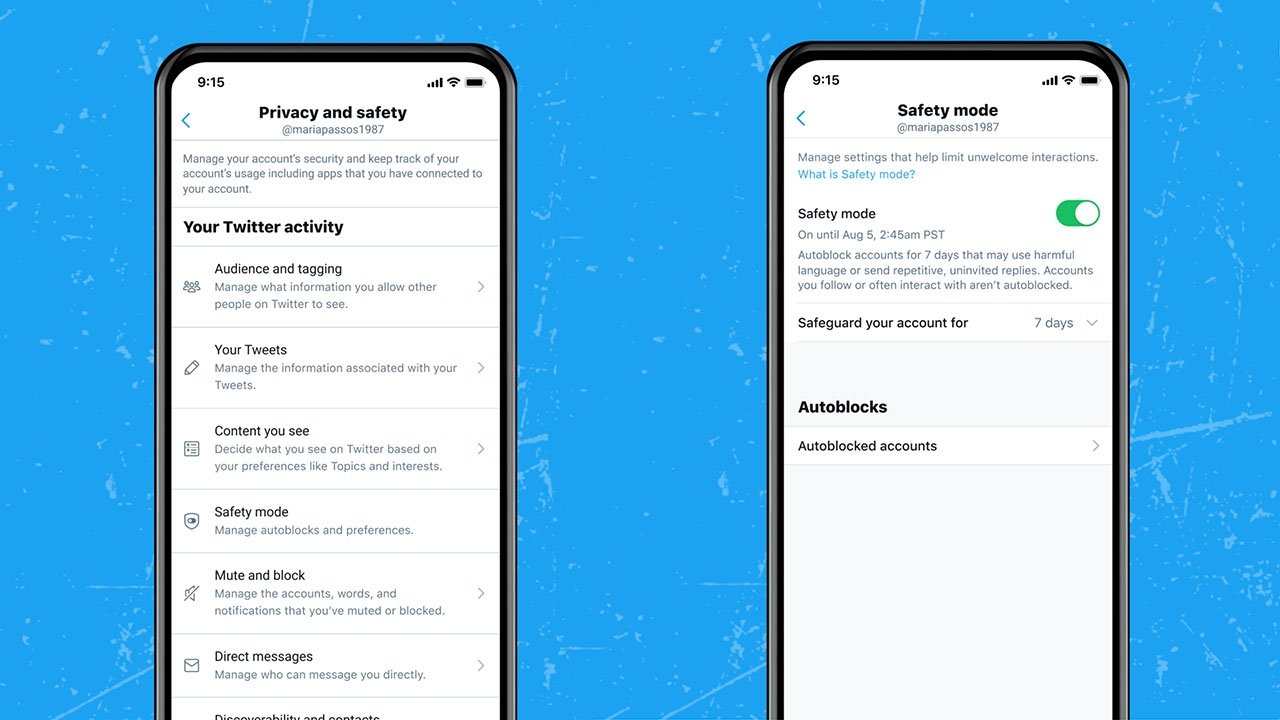

Announced in a post to the company's blog, "Safety Mode" temporarily blocks accounts determined to be using harmful language or sending repetitive and uninvited replies.

The system considers a tweet's content and the relationship between an author and replier to assess the "likelihood of a negative engagement." If the tweet or response is deemed to fall outside of Safety Mode's parameters, the account responsible for the post will be blocked for seven days.

It is unclear what data points are used to make a determination, but Twitter says existing relationships are considered and accounts that a user follows or frequently interacts with will not be autoblocked.

When blocked, accounts are unable to follow users, see their tweets or send direct messages. At the same time, users can peruse tweets flagged by Safety Mode and view information about blocked accounts at any time, Twitter says. Autoblocks can be viewed and reversed in Settings.

Twitter developed Safety Mode to promote "healthy conversations." The company conducted listening and feedback sessions with partners that have expertise in online safety, mental health and human rights.

Safety Mode is rolling out to a small group of users on iOS, Android and the web. Twitter plans to observe and improve the feature before taking it live for all users.