Apple is considering ways to allow Siri to provide personalized results for specific users speaking to it, by making the digital assistant recognize the user's identity, with the added possibility of limiting queries to just one user's voice.

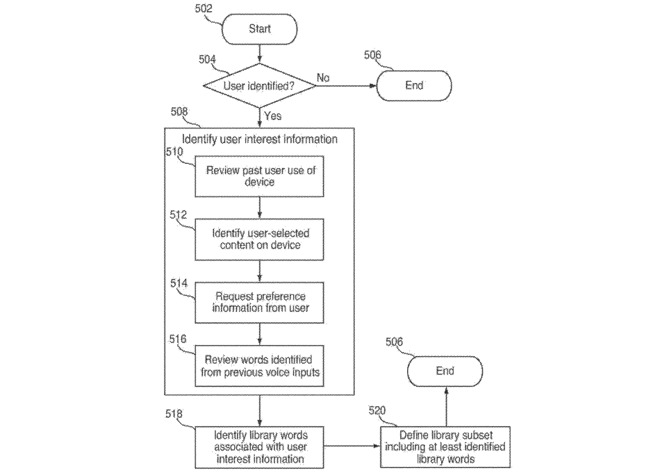

The patent for "User profiling for voice input processing," granted by the U.S. Patent and Trademark Office on Tuesday, details how a voice recognition could identify the user by their speech to use a pre-existing registered user profile. Recognition can be performed in a variety of ways, including biometric information, which the patent suggests could be a "voice print."

That profile would help the digital assistant perform tasks, including items that can only be performed just for that user.

For example, the user could ask for new messages to be read out, but the assigned user profile would restrict the messages only to those received by the user and no-one else. This can also extend to other content stored on a device, such as photographs or videos taken by the user.

As the user continues to use the assistant, the patent suggests the voice recognition system could make notes of the words and style of language used, constructing a library for that specific user. This could include words corresponding to content metadata, or for applications the user is likely to use.

The system could also analyze previous usage of the device, like the most commonly used apps or frequently used contacts, to assist in building the library. These sorts of elements would also be given a weighting to make it more likely that associated terms will be recognized in requests that use the library, rather than for general queries by other users.

While recognizing the user's voice for vocal queries would help enhance accuracy, the system has the potential to solve a number of existing usability problems with Siri. Currently, Siri is not capable of supporting multiple users, which opens up the possibility of misuse by other individuals. It is also not immune to errant inputs, such as during a recent speech in the U.K. parliament.

Apple files a large number of patent applications to the USPTO, with many granted to the company, every week. The existence of a published patent is not a guarantee that described ideas will make their way into commercial products.

That being said, the appeal of multi-user support in Siri for HomePod, as well as the fact it's a software-based concept rather than requiring hardware changes, gives it a good chance of being produced. Multi-user support was also reportedly spotted in an iOS 11.2.5 beta, with code strings suggesting the groundwork to recognize multiple voices and provide tailored results were in place, but not fully implemented.

It's also arguable that Apple has already put this style of thinking for digital assistants into practice already, in a slightly different way.

On Thursday, Apple's Machine Learning Journal advised of how Siri's results were improved by the creation of geolocation-based language models (Geo-LM) in the United States. By taking the location of the user into account, the Geo-LM used localized training to provide better recognition of local businesses and points of interest compared to the country-wide general language model.