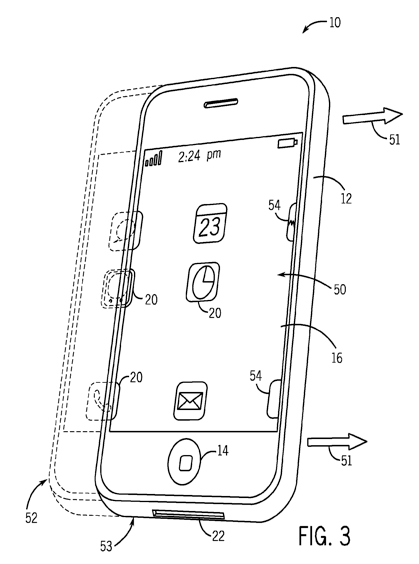

Originally filed in February of 2008, the application describes various methods which might make handling a touchscreen device or viewing a mobile screen easier as the device moves about, whether on a moving vehicle or walking about. The document details a number of ways this problem could be solved, including display adjustment using motion data and screen properties, scaling selectable images, varying the input region on a touchscreen device, or including pressure values and location values.

"The instability, whether produced by the environment or the user, may cause user interface challenges," the patent application reads. "For example, a vibrating screen may make it difficult for a user to view images on the screen. Similarly, a moving screen or input object may make it difficult for a user to select an item on a touch screen."

The patent application describes different scenarios in which this technology could be helpful, from typical experiences to highly unique circumstances.

"The screen may be part of a system used in a high vibration environment such as a tank monitoring and control system for a chemical processing plant or a personal entertainment system located within an airplane seat back," the patent reads. "Similarly, the screen may be part of a portable electronic device that is accessed in an unsteady environment such as a subway or moving vehicle. In another example, the screen may be part of a device used by a user with limited or impaired motor control."

The filing is similar to one revealed by AppleInsider earlier this year that described an iPhone-like device with a front-facing video camera with software that would adjust itself while in motion. That application described technology that would detect "signatures of motion," to which the iPhone software would adjust by enlarging areas on the screen making them easier to see and touch.

In another patent filing, Apple describes a method to use a Bluetooth module to process non-Bluetooth signals. The document details how a device might be able to process signals received from a source that uses a different set of data rates, or conversely how a device might be able to transmit similar signals.

It goes on to say that a non-Bluetooth transmitter could potentially send out a non-Bluetooth signal at a data rate a Bluetooth receiver could read.

"A Bluetooth receiver, such as a portable media player or smart phone with Bluetooth circuitry, can receive the non-Bluetooth signals sent at the particular data rate using the Bluetooth circuitry," the document reads. "In one embodiment, the Bluetooth receiver can "over-sample" the signal or transmission of the non-Bluetooth packet sent at the particular data rate using one or more data rates associated with the Bluetooth circuitry."

Finally, another patent application revealed this week demonstrates a method to transform one complex programming language into another automatically. The document notes that techniques for bridging data between different programming languages currently exist, however, they do not provide functionality for converting more complex data into a different language.

"For example, a linked list object defined in a first programming language often has no direct transformation to a corresponding list data structure supported by a second programming language," the application states. "Thus, an improved technique for transforming data structures between programming languages or systems is needed."

Neil Hughes

Neil Hughes

-xl-m.jpg)

-m.jpg)

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Malcolm Owen

Malcolm Owen

Thomas Sibilly

Thomas Sibilly

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

14 Comments

Apple: Skating to where the puck WILL be.

To keep the screen coordinated with your eye, you'd have to wear a sensor on your head. If in your hand, it could still move relative to your eye if bouncing. But maybe that will be up to the guy holding it. :>)

I won't hold my breath on this one.

What I don't get is why Apple doesn't snap up Universal Display corporation. They they have 950 patents (!) for next-gen LED/OLED displays, which is one-half of what a computer is. If Apple ponied up $278 million for PA semi to buy their brains, then the $400-$500 million to own a large chunk of the licensable displays into the future would seem a brilliant idea--that way they could keep the best for Apple and get licensing fees for OLED that don't fit in their product mix

Are you listening SJ?

I think this is very unlikely to be implemented on the standard iPhone interface. Your head and hands are all moving independently, there's no way the iPhone can help sync up what your left hand and right hand and eyes are all doing to make a button push more accurate. In fact, your brain and body are already compensating to some degree, and having a "floating interface" would probably throw your brain off more than help. I'm not sure if it would help when reading, but the fact that words might disappear off the edges while the screen "floats" might be very annoying. This seems more like a fun little iPhone app that you turn on to amuse friends.

To keep the screen coordinated with your eye, you'd have to wear a sensor on your head. If in your hand, it could still move relative to your eye if bouncing. But maybe that will be up to the guy holding it. :>)

I won't hold my breath on this one.

Yeah, it would be an interesting dynamics problem. Know how the display is moving is one thing, but predicting how the observer is moving due to the same inputs is a whole other problem.

A few cameras you digital image stabilization, which would be similar to stabilizing a display image; but most use physical stabilization of either the lens elements or the sensor itself because it's more effective due to the shorter feedback loop.

I could however see this being useful in high vibration environments where the vibrations are constant and predictable and the device is mounted on something. In that case it would be a similar problem as noise cancellation headphones, which are good at damping constant background noise (like when flying) but which allow more transient noises (talking) to be easily heard.