As Siri virtual assistant stands to gain hordes of new users around the world with the iPhone 5s and 5c, Apple is looking to improve accurate voice recognition through geolocation and localized language models.

Apple's iOS devices already boast a number of voice-recognizing input features, including Siri and speech-to-text, but worldwide availability makes accurate representation of some local dialects and languages an issue.

Looking to improve the situation, Apple is investigating ways to integrate location data with language modeling to create a hybrid system that can better understand a variety of tongues. The method is outlined in a patent filing published by the U.S. Patent and Trademark Office on Thursday, titled "Automatic input signal recognition using location based language modeling."

Apple proposes that a number of local language models can be constructed for a desired service area. A form of such a system is already in use with Siri's language selection, which allows users to choose from various models, like English (United States) and English (United Kingdom).

However, the method can serve the opposite effect and further complicate system recognition. Apple explains:

That is, input signals that are not unique to a particular region may be improperly recognized as a local word sequence because the language model weights local word sequences more heavily. Additionally, such a solution only considers one geographic region, which can still produce inaccurate results if the location is close to the border of the geographic region and the input signal corresponds to a word sequence that is unique in the neighboring geographic region.

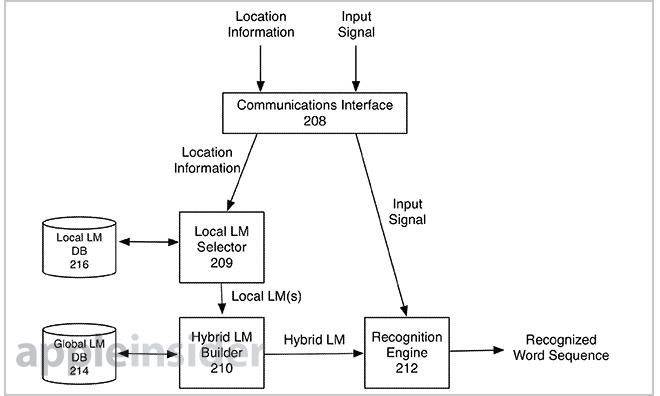

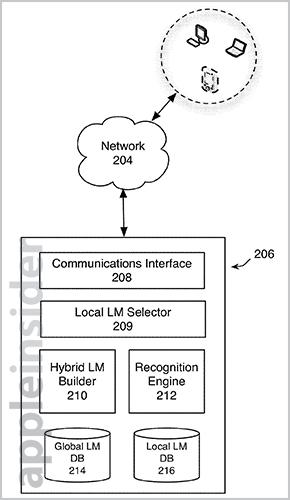

Apple's new invention hybridizes local language models by weighting them according to location and speech input, then merging them with other localized or global models. Global models capture general language properties and high-probability word strings commonly used by native speakers.

In some embodiments, the local language model is first identified by geography, which is governed by service location thresholds. This first model is merged with the global version of the language and compared against input words or phrases that are statistically more likely to occur in the specified region.

Information data can be used to pick out word sequences that have a low likelihood of occurrence globally, but may be higher for a certain location. The document offers the words "goat hill" as an example. The input may have a low probability of being spoken globally, in which case the system may determine the speaker is saying "good will." However, if geolocation is integrated, it may be recognized that a nearby store is called Goat Hill, leading the system to determine that input as the more likely word string.

Location data can be gathered via GPS, cell tower triangulation, and other similar methods. Alternatively, a user can manually enter the location into a supported device. Language assets include databases, recognition modules, local language model selector, hybrid language model builder, and a recognition engine.

Combining the location data with local language models involves a "centroid," or a predefined focal point for a given region. Examples of the so-called centroid can be an address, building, town hall, or even the geographic center of a city. When the thresholds surrounding centroids overlap, "tiebreaker policies" can be implemented to weight one local language model higher than another, creating the hybrid language model.

Illustration of overlapping regions and centroids.While it is unknown if Apple will one day use the system in its iOS product line, current technology does allow for such a method to be implemented. Cellular data can be leveraged for database duties, while on-board sensors and processors would handle location gathering, language recognition and analysis, and hybrid model output.

Apple's location-based speech recognition patent application was first filed for in 2012 and credits Hong M. Chen as its inventor.

Mikey Campbell

Mikey Campbell

-m.jpg)

Malcolm Owen

Malcolm Owen

Andrew Orr

Andrew Orr

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Christine McKee

Christine McKee

William Gallagher

William Gallagher

-m.jpg)

13 Comments

In a quick read it doesn't seem to be all that different from this already issued patent. https://www.google.com/patents/US8219384 IMHO It's unfortunate that the USPTO allows a patent for something like this anyway.

In a quick read it doesn't seem to be all that different from this already issued patent.

https://www.google.com/patents/US8219384

IMHO It's unfortunate that the USPTO allows a patent for something like this anyway.

Yes they are very similar in the abstract, but that's not what gets patented. It's the implementation and method (claims) that do and in this example they may be unique enough to allow for two separate patents.

And why shouldn't it patented... If you work hard to come up with a way to carry this out why can't you protect it?

[quote name="mjtomlin" url="/t/159497/apple-looks-to-geolocation-for-enhanced-speech-recognition#post_2395446"]And why shouldn't it patented... If you work hard to come up with a way to carry this out why can't you protect it?[/quote] He doesn't like it when Apple patents things because it means his best bud Google can't copy it without fear of being sued. Every patent Apple applies for, it's always 'oh they don't have it yet, we'll see if they get it, they shouldn't because it's obvious or oh, look someone else has a patent that looks the same', as if anybody cares one way or the other. If Google does it first, that's ok though because they are perfect in every way and have never sued anybody, not even through a subsidiary. You'll also notice this thread has nothing to do with Google - a certain someone apparently never brings up Google, Google, Google, Google, Google, Google, Google, Google unless it's explicitly brought up by someone else.

[quote name="mjtomlin" url="/t/159497/apple-looks-to-geolocation-for-enhanced-speech-recognition#post_2395446"] Yes they are very similar in the abstract, but that's not what gets patented. It's the implementation and method (claims) that do and in this example they may be unique enough to allow for two separate patents. And why shouldn't it patented... If you work hard to come up with a way to carry this out why can't you protect it? [/quote] That's an entirely different and way too involved discussion for here. There's lots of rational arguments for treating software or mathematical algorithms differently than real property which has been patentable for hundreds of years. In a nutshell tho regarding [I]this specific patent application[/I] there's so many similar patents for the same general idea and dating back as much as 20 years or more it hardly qualifies as [B]"inventive"[/B] IMO. Just more clutter in the patent system.