Apple's work on Bluetooth Low Energy Audio, a new technology that streams digital sound directly to hearing air implants, enables people with hearing impairments to communicate and use their iPhone as mic via iOS 10's Live Listen feature.

AppleInsider previously noted Apple's work in developing AirPod-like streaming directly to hearing aid devices, including the Live Listen feature.

Writing for Wired, Steven Levy has further detailed the engineering work behind the feature, which uses Bluetooth Low Energy to stream audio, a new technology called Bluetooth LEA, or "Low Energy Audio."

BLE was developed for highly efficient data transfers to small devices with limited battery life, such as heart rate monitors. Apple adapted the protocol to stream audio directly from iOS devices to hearing aid implants, which rely on small batteries.

"We chose Bluetooth LE technology because that was the lowest power radio we had in our phones," noted Sriram Hariharan, an Apple CoreBluetooth engineering manager, adding that his group "spent a lot of time tuning our solution it to meet the requirements of the battery technology used in the hearing aids and cochlear implants."

In addition to enhancing its iOS accessibility features for users with hearing impairments, Apple also introduced Live Listen, a feature that uses an iPhone's mic to focus on conversations in loud environments.

Incremental accessibility enhancements

Apple first introduced MFi support for Bluetooth hearing aids in iOS 7 and iPhone 4s. Its latest software expands support for direct streaming of phone calls, FaceTime conversations, movies and other audio to supported hearing aids, without the need for a middleman device known as a "streamer."

Apple has worked with a series of hearing aid manufacturers to enable advanced Bluetooth streaming support, as detailed in a support page.

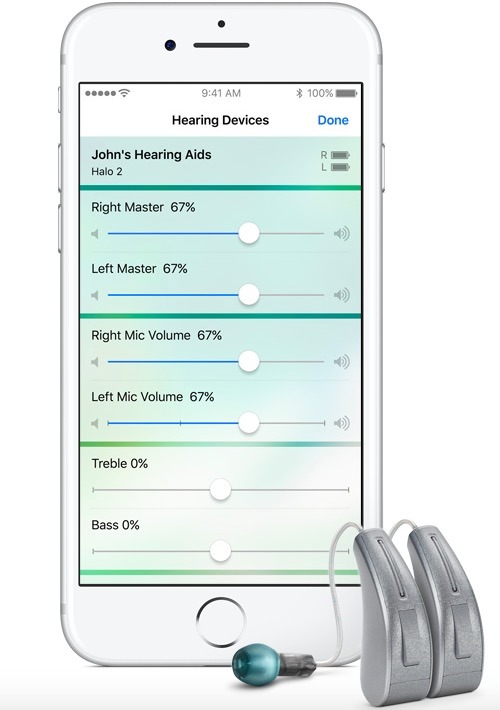

New iOS 10 hearing aid features integrate device battery life and independent base, treble, right and left volume controls, and supports audiologist-designed presets for handling sound from concerts or restaurants. Using geolocation, devices can even automatically recognize when the user walks into, say, a Starbucks, and their hearing aids can adjust automatically. It also supports a "find my hearing aid" feature.

This enhanced, built in support for hearing aids borrows some technology developed for AirPods, the company's new wireless headphones leveraging Apple's proprietary new W1 chip for effortless, flexible device pairing. But it uses unique technology developed to work around the fact that hearing aids rely on smaller batteries than AirPods.

Apple's integrated bundling of an audio streamer for users with hearing impairments is similar to its previous introduction of Voice Over, the screen reader technology introduced for iPods, the iOS devices and Macs.

By building such accessibility features into the OS level for free, third party developers can take full advantage of Apple's screen reading and streaming technologies because they know they will be available on every device. Previously, users with disabilities had to buy a bolted on solution that required specialized support from app developers.

In addition to supporting audio originating on the phone, the new Live Listen feature (above) also allows users to relay focused audio picked up by the iPhone's mic, enabling clearer conversations when in a loud environment.

In an earlier report for CNET, Shara Tibken detailed how individuals are taking advantage of Apple's latest accessibility technologies to remain productive and erase barriers.

Tibken noted that Google doesn't offer similar streaming support built into Android. The company is stymied to deploy Apple's level of tight integration across Android devices because it has no control over the hardware features its partners chose to support in their own phones making use of some version of Android software.

Levy's new report for Wired noted that Google "says that its accessibility team's hearing efforts have so far focused on captioning. Hearing aid support, the company says, is on the roadmap, but there's no public timeline for now."

Levy noted that users with cochlear implants can now take phone calls in a loud environment, something that often is impossible for people with normal hearing. With Live Listen, users with hearing aids can similarly focus on what they want to listen to, suggesting the potential for future digital augmentation of hearing and other senses using technology to not only enhance accessibility, but to improve our human experience in new ways.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Charles Martin

Charles Martin

Mike Wuerthele

Mike Wuerthele

-m.jpg)

5 Comments

Now we just need a tiny blood sugar fuel cell to power an embeded device like a cochlear implant. Then we can think about embedding devices.

Listen Different

RetinaEardrum Audio.Great info. Thanks for this DED. Now if only I could get my Dad onboard this technology...