Apple is continuing to come up with ways to secure its devices while still making it as easy as possible to use, with one concept involving unlocking an iPhone or iPad and performing a Siri request, but only if the voice it hears matches that of its owner.

Granted by the U.S. Patent and Trademark Office on Tuesday, the patent for "Device access using voice authentication" is relatively straightforward, namely detecting the speaker's voice for a vocal request and determining if it is the main registered user.

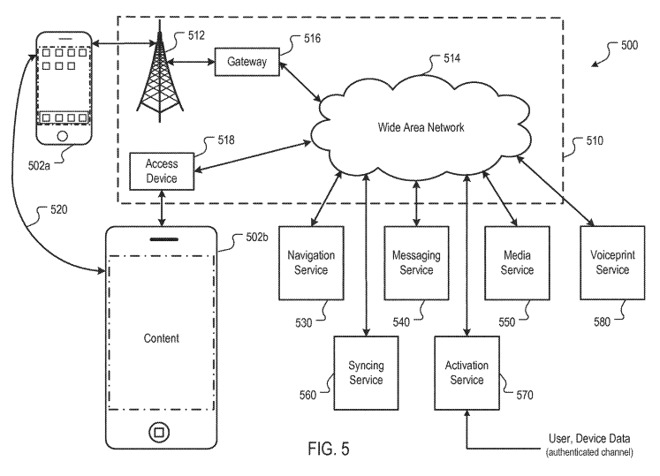

According to the patent, a device capable to receive speech input from a user could conceivably create a "voice print" for the owner, using multiple examples of their speech. This text-independent voice print would determine characteristics of the user's voice to create a model, either on the device or via an external service, with the result being the point of comparison for future checks.

The voice print could use "statistical models of the characteristics" of a user's pronunciation of phonemes, to create the signature. In theory, this could include characteristics such as voicing, silences, stop bursts, nasal or liquid interference, frication, and other elements.

By being text-independent, the voice print would theoretically be able to determine the speaker's identity just from normal speech, without requiring the use of a specific passphrase. This would be a better way of dealing with voice-based authentication in public situations, where users may not wish to loudly state a passphrase they would rather keep private.

At the time the device receives a vocal command, it effectively creates a model based on the uttered phrase, which it then compares against the already-established voice print. If there is a certain threshold met for similarity, the device could be unlocked then carry out the spoken command.

It is also suggested a voice print match may not need to unlock the phone entirely, but could still enable the spoken request to be performed for the user. For example, Siri on a device could provide a verbal and text-based response to a vocal query about a general topic if it detects the user's voice, but would refrain from providing a response involving user data unless the device is unlocked by other means beforehand.

There is even the possibility of a user electing for specific functions that may use personal data to be accessed by Siri while in a locked state, only if the voice of the user is recognized. This would still make the device available for general non-user-specific Siri queries by other people, without permitting access to data.

In the event the user fails to convince the device of their identity by speech alone, secondary authentication methods are offered, such as other types of biometric security or a passcode.

Apple submits a large number of patent applications every week, but not all ideas make it into commercial products. Considering Apple's existing work concerning biometric security, including Touch ID and Face ID, an extension into voice authentication makes sense, especially when looked at in the context of Siri on the iPhone.

This patent is one of a number Apple has filed in the field, and it certainly isn't the first to surface from the company. For example, a 2011 patent application for "User Profiling for Voice Input Processing" suggests virtually the same idea as the newly-granted patent.

In August, it was revealed Apple was looking into using voice prints for a slightly different purpose: differentiating between multiple users.

Aside from Siri on iPhone, another area this could be useful is with Siri for HomePod, a version of the digital assistant that can only provide verbal responses on hardware without any visual or physical biometric security. Multi-user support has been touted for the smart speaker before, with user voice recognition enabling the possibility of personalized query results or even playing an individual's private playlist.