Future iPhones may identify users by the sound of their voice

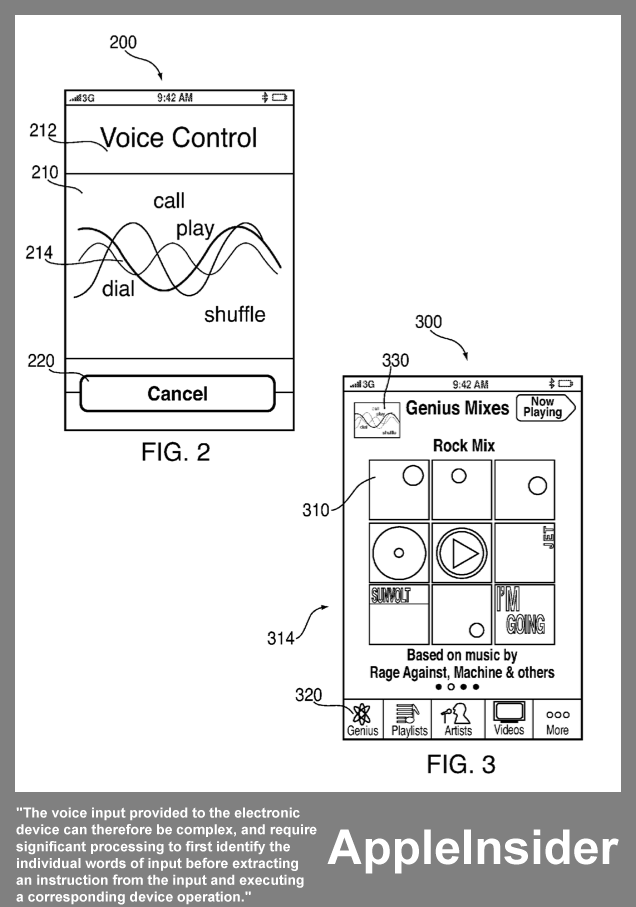

The concept was revealed this week in a new patent application published by the U.S. Patent and Trademark Office and discovered by AppleInsider. Entitled "User Profiling for Voice Input Processing," it describes a system that would identify individual users when they speak aloud.

Apple's application notes that voice control already exists in some forms on a number of portable devices. These systems are accompanied by word libraries, which offer a range of options for users to speak aloud and interact with the device.

But these libraries can become so large that they can be prohibitive to processing voice inputs. In particular, long voice inputs can be time prohibitive for users, and resource taxing for a device.

Apple proposes to resolve these issues with a system that would identify users by the sound of their voice, and identify corresponding instructions based on that user's identity. By identifying the user of a device, an iPhone would be able to allow that user to more efficiently navigate handsfree and accomplish tasks.

The application includes examples of highly specific voice commands that a complex system might be able to interpret. Saying aloud, "call John's cell phone," includes the keyword "call," as well as the variables "John" and "cell phone," for example.

In a more detailed example, a lengthy command is cited as a possibility: "Find my most played song with a 4-star rating and create a Genius playlist using it as a seed." Also included is natural language voice input, with the command: "Pick a good song to add to a party mix."

"The voice input provided to the electronic device can therefore be complex, and require significant processing to first identify the individual words of input before extracting an instruction from the input and executing a corresponding device operation," the application reads.

To simplify this, an iPhone would have words that relate specifically to the user of a device. For example, certain media or contacts could be made specific to a particular user of a device, allowing two individuals to share an iPhone or iPad with distinct personal settings and content.

In recognizing a user's voice, the system could also become dynamically tailored to their needs and interests. In one example, a user's musical preferences would be tracked, and simply asking the system aloud to recommend a song would identify the user and their interests.

The proposed invention made public this week was first filed in February of 2010. It is credited to Allen P. Haughay.

Another voice-related application discovered by AppleInsider in July explained a robust handsfree system that could be more responsive and efficient than current options. That method describes cutting down on the verbosity, or "wordiness" of audio feedback systems, dynamically shortening or removing redundant information that might be audibly presented to the user.

Both The Wall Street Journal and The New York Times reported earlier this year that Apple was working on improved voice navigation in the next major update to iOS, the mobile operating system that powers the iPhone and iPad. And a later report claimed that voice commands would be "deeply integrated" into iOS 5.

The groundwork was laid for Apple's anticipated voice command overhaul when Apple acquired Siri, an iPhone personal assistant application heavily dependent on voice commands, in April of 2010. With Siri, users can issue tasks to their iPhone using complete sentences, such as "What's happening this weekend around here?"

In June, it was claimed that those rumored voice features weren't ready to be shown off at Apple's annual Worldwide Developers Conference. However, it was suggested that the feature may be shown off this fall, and will be a part of the anticipated fifth-generation iPhone.

Further supporting this, evidence of Nuance speech recognition technology has been discovered hidden inside developer beta builds of iOS 5. These features buried within the software have been hidden in builds released to developers.

Neil Hughes

Neil Hughes

Amber Neely

Amber Neely

Thomas Sibilly

Thomas Sibilly

AppleInsider Staff

AppleInsider Staff

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

14 Comments

Stuff like this, if not necessarily this particular tweak, demonstrates that Apple has years of product innovations ahead of them. The team in place should be able to handle this just fine.

Yes, they still need another Steve Jobs. But what company doesn't?

Well puberty done passed so no worry about voice change but hope they have fail-safes for hoarse throat and Laryngitis...

/

/

/

Who shares their iPhone with multiple users? IMO, limited appeal.

Yes, they still need another Steve Jobs. But what company doesn't?

Given that he is Chairman of the Board and likely also a Product Design Consultant, they still have THE Steve Jobs, why do they need another one.

Also, is it legal to post an article about anything that isn't about Jobs resigning. I thought you had to wait 24 hours before talking about any other subject

Who shares their iPhone with multiple users? IMO, limited appeal.

Good point.

I suspect the rumors of this feature aren't exactly reflecting truth. My guess is that the tech isn't focused on telling one voice apart from another to share a device but more in the realms of better voice identification so that the phone can recognize what you are saying. I have a bit of an accent being a Kiwi and sometimes the current iphone doesn't hear what I am saying correctly when I use Voice Control because of the way I pronounce certain sounds. Better voice recognition software would help with that.

Who shares their iPhone with multiple users? IMO, limited appeal.

Limited appeal?!

I'd absolutely love to have my iPhone be able to say, "Hey, you don't sound like my owner. I'm not going to let you make any calls. In fact, I'm going to report my location to the police because my owner told me to do that if someone else tries to use me."

Who the heck WOULDN'T want that?!