Apple has been rumored to be working on some kind of Apple VR headset for years, and it's finally here. Though the Apple Vision Pro isn't quite VR, instead, it is mixed reality with AR and VR applications.

The headset and its operating system visionOS are referred to by Apple as spatial computing. They blend software with the world around the user via video passthrough.

Apple Vision Pro is a headset that obscures the user's vision, not a pair of transparent goggles or glasses. But high-quality cameras pass 3D video of the environment to the user through a pair of pixel-dense displays.

It is seen as the first step into an entirely new kind of computing that could lead to the long-rumored Apple Glass — AR glasses. Even this headset, with its "pro" moniker, is likely just a stepping stone to a more budget-friendly Apple Vision model down the line.

Next Apple Vision

Rumors are already building about future models. Apple could be preparing four new Apple Vision headsets with some focused on lowering the price, but may not release until late 2025 or 2026.

Apple Vision Pro 2 would iterate on the existing hardware while some speculate an "Air" or "SE" model could make things lower-priced without sacrificing too much technology. Some hope for a more lightweight model without the glass front display.

Apple announced visionOS 2 during WWDC 2024 and it had modest updates that focus on quality-of-life changes like a new gesture to summon Home View and Control Center. Other changes include bringing more ecosystem features to the platform like Safari Profiles and increasing hand tracking to 90Hz.

Leaks suggest Apple is developing the next generation of Apple Vision in tandem with the smart AR glasses we've dubbed Apple Glass. No release has been shared.

Meta Orion, seen above, is a pair of 2024 prototype glasses that use projectors to show UI over the real world on transparent lenses. Apple Glass are likely to be transparent OLED or similar rather than projectors.

New rumors suggest Apple will release the Apple Vision Pro 2 before releasing a lower-priced model. This shift in strategy seems to stem from difficulty getting the lower priced model into the right price range without sacrificing functionality.

Apple Vision Pro design

The ski goggle-like design that's been rumored since early 2021 ended up being the final product. A curved piece of glass acts as a lens for the multiple cameras, while the exterior case is aluminum.

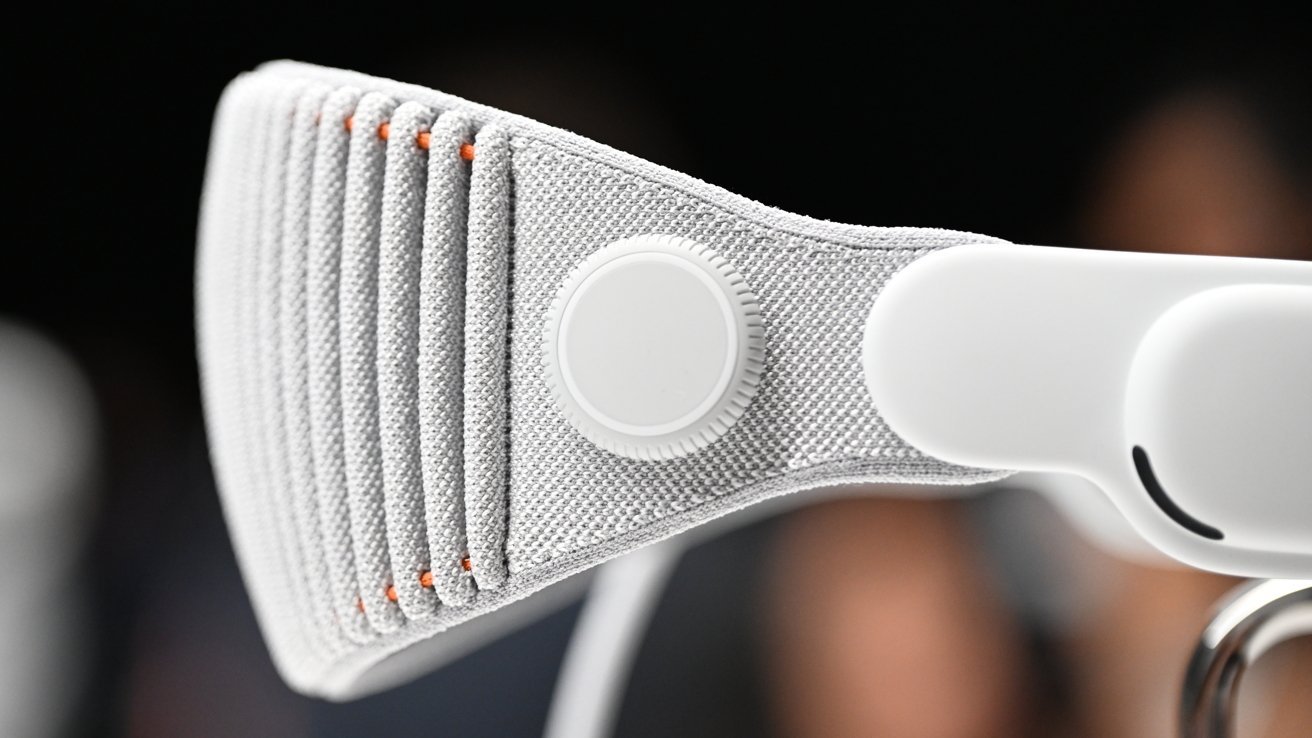

The Solo Knit Band is 3D-knitted as a single piece, easily adjusted with a fit dial, and attached using a pull-tab for easy removal. It provides a snug fit, but leaves most of the headset weight on the wearer's face.

The headset is so form-fitting that users can't wear glasses, unlike PlayStation VR 2. Apple partnered with Zeiss to offer prescription or reader lenses that are available as a separate $150 or $100 purchase.

The sides of Apple Vision Pro house small speakers called Audio Pods that point spatial audio into the user's ears. The top has a button for controlling certain features like the 3D camera, and the Digital Crown on the right acts as a Home Button and controls immersion.

A power cable attaches to the side via a proprietary mechanism. It is attached to an external battery pack that enables around 2 hours of portable use, but users can attach the battery to power if needed.

If the battery dies, the entire headset shuts down, as no backup power is available. When Apple Vision Pro is shut down, window placement is forgotten and has to be reset.

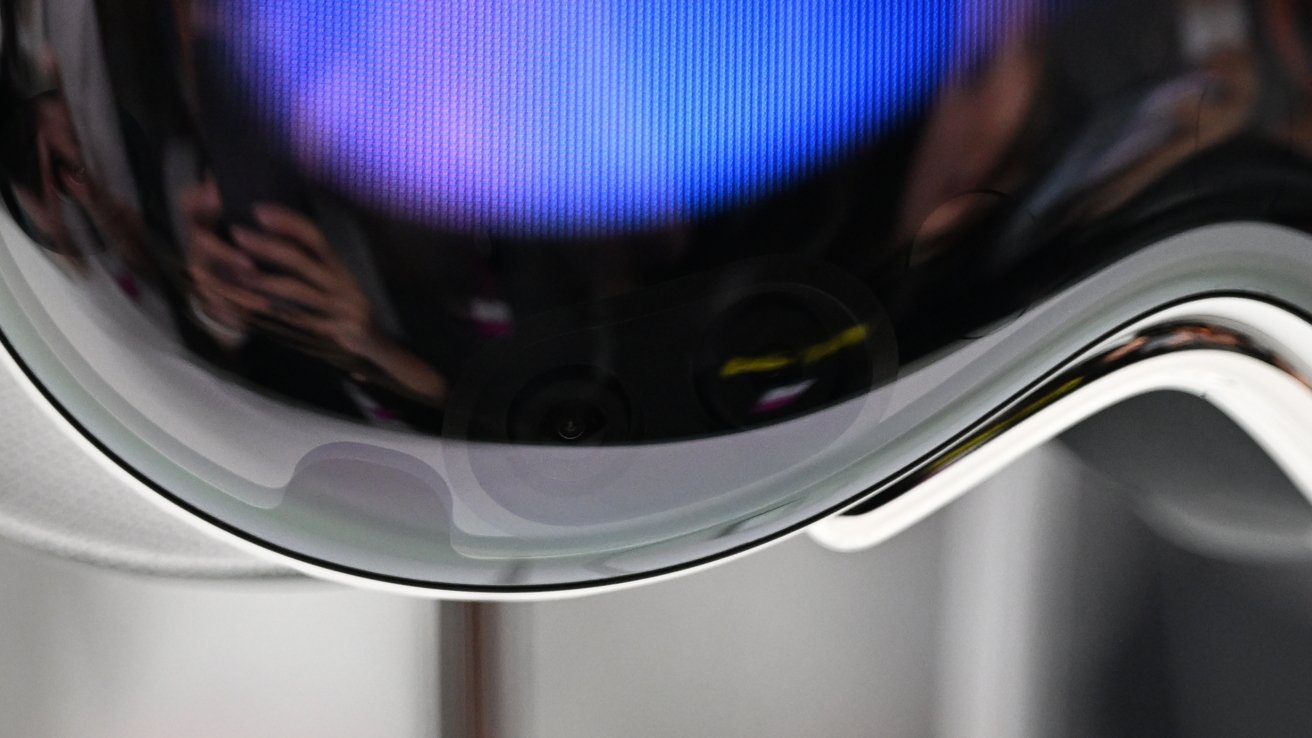

The front glass acts as an external display for a feature called eyeSight. It enables users to indicate if they are paying attention or not via an admittedly odd effect.

When the user is fully immersed and can't see the outside world, a colorful waveform appears. If passthrough is active, the user's eyes are visible through a lenticular display. Nothing is shown on the external display if a person isn't looking at it.

eyeSight was designed to ensure that users could feel confident in using the headset in any environment. However, it seems some social hurdles will have to be overcome before such technology is commonplace during social interactions.

Since eyeSight relies on a user's Persona, it has the same uncanny valley effect. Personas are still in beta, so odd bugs appear that make user's eyes look shut or distorted.

Apple Vision Pro technology

Apple's headset is a standalone device running on an M2 processor and R1 co-processor. The dual-chip design enables spatial experiences — M2 processing visionOS and graphics, while R1 is dedicated to processing camera input, sensors, and microphones.

A reference found in Xcode suggests the Apple Vision Pro has 16GB of RAM, which is the same amount in developer kits. Customers can configure their devices with 256GB, 512GB, or 1TB of storage.

There is a 12-millisecond lag from camera to display, which users shouldn't be able to notice. The passthrough works best in bright environments, but it is done well enough that depth perception isn't an issue.

An eye-tracking system of LEDs and infrared cameras enables precise controls just by looking. Users can glance at a text input field and then speak to fill it in.

There are 12 cameras, five sensors, and six microphones in the aluminum and glass enclosure. This all adds up to a heavier-than-average product when compared to competing VR headsets.

Two high-resolution cameras transmit over one billion pixels per second to the displays. Each display has a 3,660 pixels by 3,200 pixels resolution, or about 3,380 pixels per inch.

The display system uses a micro-OLED backplane with pixels seven and a half microns wide, allowing for it to fit about 50 pixels in the same space as a single iPhone pixel. A custom three-element lens was designed to magnify the screen and make it wrap around as wide as possible for the user's vision.

LiDAR and a TrueDepth camera work together to create a fused 3D map of the environment. Infrared flood illuminators work with other sensors to enhance hand tracking in low light.

There is a fan keeping the processors cool and vent holes in the top and bottom of the headset. While certain spots on the Apple Vision Pro exterior might feel warm during use, it's never hot on the user's face thanks to separation by the Light Seal.

Since Apple Vision Pro has multiple cameras, it can capture "spatial" 3D content. Recorded spatial video can be viewed in the Photos app on an Apple Vision Pro headset. Over 150 3D movies were available within the Apple TV app at launch.

Apple added basic 3D video recording to the iPhone 15 Pro with iOS 17. It can capture 1080p 30Hz spatial video that can be played back within the headset natively.

Spatial video captured on Apple Vision Pro has more depth, but spatial video captured on iPhone is more crisp. Either way, future hardware will make today's videos look terrible by comparison.

Spatial Personas

One of the first post-release features to get added to Apple Vision Pro is called Spatial Personas. The user's Persona is no longer locked inside of a 3D window during FaceTime calls and SharePlay, enabling more immersive interactions.

The Spatial Persona takes on a ghost-like consistency since Apple Vision Pro has no awareness of what the back of a person looks like. Only the user's face, a bit of hair, and upper chest are visible with detached hands floating around.

The effect is uncanny at first, but becomes more natural as the oddly designed Personas play a psychological trick to convince you you're sharing a space with the person. The combination of 3D, spatial audio, and the ability to look each other in the eye creates quite the convincing effect.

Optic ID, privacy, and security

For security, Apple introduces Optic ID as an iris-scanning system. Much like Face ID or Touch ID, it will be used to authenticate the user, enable Apple Pay purchases, and other security-related elements.

Apple explains that Optic ID uses invisible LED light sources to analyze the iris, which is then compared to the enrolled data stored on the Secure Enclave, which is similar to the process used for Face ID and Touch ID. The Optic ID data is fully encrypted, not provided to apps, and never leaves the device itself.

Continuing the privacy theme, the areas where the user looks on the display and eye tracking data aren't shared with Apple or websites. Instead, data from sensors and cameras are processed at a system level so that apps don't necessarily need to "see" the user or their surroundings to provide spatial experiences.

Apple VR, AR, or MR

Apple avoided calling any experience offered by Apple Vision Pro a conventional name like "Apple VR." Instead, it stuck to its own marketing terms like Spatial Computing.

There has been some contention on what to call this headset. Some refer to it as Apple VR since users put on a headset and are shown content. Others call it Apple AR with options for full immersion thanks to the Digital Crown.

Even the term Mixed Reality (MR) doesn't quite fit. Even if it is just marketing, Spatial Computing describes the experience well.

Think of it this way — Oculus and PlayStation VR are easily classified as VR headsets since virtual reality experiences are their primary function. For Apple Vision Pro, VR is just a feature, not the entire purpose.

During the keynote and developer sessions, Apple used in-house marketing terms to refer to each experience. It calls 2D apps "windows," 3D objects "volumes," and fully immersive VR "spaces."

visionOS

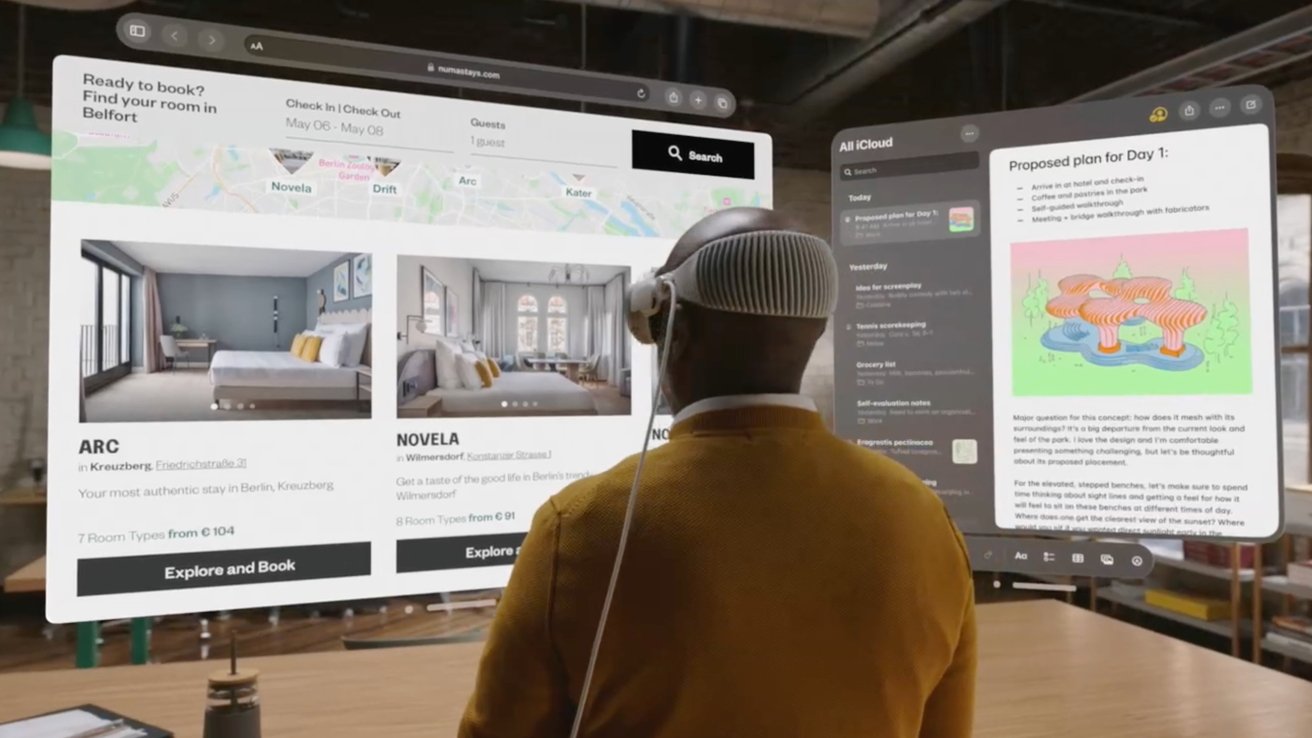

The software is run via visionOS with apps from iPhone and iPad able to operate with little to no developer intervention. Compatible apps shown in the 3D space are still 2D windows that constrains content within that space.

Native apps take on 3D effects and developers can add 3D objects around a window called ornaments that enhance interactions. Menus and controls appear to pop in front of or beside the app.

Objects created in the USDZ format can be pulled into a space, but it seems developers will need to tool unique experiences for visionOS using specific frameworks. Apps previously built with ARKit will show up as a 2D window.

Full Apple VR experiences weren't the focal point of the keynote, but they do exist. Apple plans to film multiple 360-degree videos for users to become immersed in, not to mention third-party VR apps.

The visionOS SDK was provided to developers in late June, 2023. It included a simulator to interact with software in a testing environment, but full-on-device testing wasn't possible until hardware kits and sessions arrived in July.

Many developers opted out of including their existing iPad app in the visionOS app store at launch. The lack of access to hardware to test the apps on made it difficult to know if the apps would be usable or lead to customer complaints.

Only about 600 native applications were available at launch on February 2, 2024. That number is increasing rapidly as developers finally get their hands on the hardware.

Apple revealed visionOS 2 during WWDC 2024 and it contained a few quality-of-life updates. Users can convert any photo to 3D, new gestures make navigation easier, and developers have more APIs.

The new display feature lets users view the virtual Mac display in normal, wide, and ultra wide sizes. The screen can literally wrap around the user with a high-resolution view that takes advantage of foveated rendering.

Spatial Computing competition

Apple may have only just announced its Spatial Computing platform, but that doesn't mean it's the only one on the market. Competitors have been exploring AR and other extended-reality solutions for over a decade.

Google has abandoned Glass, and Microsoft has moved on from Hololens, but other options still exist. Xreal Air are among the few products attempting to provide AR today, despite the limited technology in play at the consumer level.

Apple Vision Pro review

Apple Vision Pro is Apple's first spatial computer, so that makes reviewing it a significant challenge. While the device has been around for a while now, not enough has changed to warrant revisiting our initial views from the early review and one month review.

After a year, it is clear that Apple needs to push harder for more native apps and content on Apple Vision Pro.

Design

The first thing to understand about Apple Vision Pro is that there's very little on the market it can be compared to directly, if any. It runs a spatial operating system called visionOS, it is a wearable computer meant for more than just gaming, and it doesn't have physical controllers.

Apple Vision Pro is on the heavy end of the spectrum when compared to other headsets. The aluminum and glass enclosure looks and feels more premium than products like the Oculus or PSVR that rely on plastic construction.

There are two band options, the Solo Knit Band and Dual Loop Band. Which one is more comfortable will depend entirely on the user, but many seem to prefer the Dual Loop Band.

The Light Seal, cushion, and band are all sized during the order process. Apple Vision Pro is precisely fit to the user, and wrong sized components can lead to discomfort.

There is an external battery pack that offers a 2 hour battery life. A cable can be attached to the battery to keep Vision Pro powered indefinitely.

Apple Vision Pro weighs between 21.2 and 22.9 ounces, depending on the paired accessories. The battery pack weighs 12.45 ounces.

Display

Perhaps the most impressive part of Apple Vision Pro is the dual micro-OLED displays. They provide a 3,660 pixel by 3,200 pixel resolution, which is nearly 4K per eye.

The display can refresh between 90Hz, 96Hz, and 100Hz. Videos that play at 30fps and 24fps are able to play at their native frame rates to prevent judder.

Zeiss optical inserts are available for those that require vision correction. With or without the additional inserts, expect a slight glare when viewing high contrast content.

The external cameras and sensors work with the R1 to recreate the physical world as a virtually lag free 3D rendering inside the headset. The resulting image is a slightly dimmer representation of the real world, but it is done well enough that the passthrough is quite convincing.

Unlike other virtual reality headset options on the market, Apple prioritized visual fidelity when using passthrough. However, it also provided a high-density display capable of producing incredible immersive content in a 3D space.

Features

Apple Vision Pro has a varied feature set similar to an iPad or Mac. It can perform work and productivity tasks, be an entertainment device, or a communication tool. The most obvious difference here is the interface behind how you get those tasks done.

App windows float about in a virtual space, either a representation of the room you're in or a spatial environment. Native apps have 3D UI elements and are easier to use with look and tap gestures, while compatible apps from iPad are not optimized, but work well with a Magic Trackpad.

Watching video is immersive even if it is just a basic 2D YouTube channel. The immersion increases with 3D movies and the most incredible experiences can be found with Apple Immersive Video.

The biggest issue with Apple Vision Pro in the months since launch is a lack of immersive content. This special video type is new and Apple seems to be the only company able to produce it at the moment, and the rollout has been slow if non-existent.

The immersive content and 3D video can be found in the Apple TV app, Disney+, and other apps. Apple Arcade even has a selection of spatial games.

Audio playback from Apple Vision Pro works great with the dual driver Audio Pods. However, for even better audio and bass, AirPods Pro 2 with USB-C are the way to go.

Another powerful feature of Apple Vision Pro is the Mac Virtual Display. It allows the user to bring in a 4K display from their Mac and use applications in the virtual window.

A spatial computing start

Apple Vision Pro is in its infancy and until it launches globally, there will likely be many popular apps missing from the native App Store. It is not a device for everyone, the price alone says as much.

That isn't to say it is a failure. It is an excellent first-generation product that is good enough and represents an exciting future for spatial computing.

Apple is expected to reveal visionOS 2.0 during WWDC in June. That update may sand off some of the rough edges in this nascent platform.

Using Apple Vision Pro (pre-release)

Apple is providing developer labs and kits to help with the pre-release development of apps for the Spatial Computing platform. This has allowed some first-hand experience beyond Apple's initial WWDC announcement to make it out to the public.

Overall, those who have interacted with the headset come away with similar ideas and feelings. This is a first-generation product that is entering a crowded space but also stands apart as a mix of AR and VR with high-end technology making things feel effortless.

Users familiar with the competitor's headsets say that Apple Vision Pro has a wider and taller field of view. Certain lighting environments, like super bright rooms or dim areas, show the limitations of the video feed, but it isn't distracting.

Eye tracking and gesture controls seem to be excellent, even in pre-release demos. These systems feel well thought out and executed and are missing some of the usual growing pains new interaction paradigms tend to have.

Dedicated apps seem to work well and blend in with the environment, giving enterprise developers an excellent option for job or maintenance training. And while Apple says iPad apps can be ported with little effort, developers will need to give some attention to their apps to ensure legibility and controls translate to the platform.

Weight wasn't an issue, though after over an hour of constant use, fatigue did start to set in. Eye strain didn't seem to be an issue, given the quality of the displays.

Apple Vision Pro release date and price

Apple tends to announce products shortly before they are released, but since Apple Vision Pro is a new platform, it gave itself plenty of space. Developers were given about a year to work on their visionOS experiences, and developer kits are available with stringent secrecy protocols via a request application.

Estimates place the first year of shipments between 400,000 and 1 million units. The high price may prove prohibitive, but ambitious supply chain numbers indicate 10 million shipped in three years.

Apple Vision Pro was available to pre-order on January 19 starting at $3,499 with 256GB of storage and shipped February 2, 2024. It is bundled with two head straps, light shields, and the battery. The optical inserts are available online-only at $99 for reader-strength lenses and $149 for prescription-strength lenses.

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

Mike Wuerthele

Mike Wuerthele