Apple announced Apple Vision Pro during WWDC 2023 and an operating system to go with it — visionOS. It is Apple's first platform built for spatial computing.

Instead of building an isolating VR headset focused on transporting users to other worlds or a set of limited AR glasses that overlays apps on the real world, Apple chose a mixture of both. Primarily, visionOS is a mixed reality operating system that can adapt to the user's needs, be it complete isolation or social-friendly augmented computing.

The Home View acts as the center of the operating system by acting as an app launcher and environment picker. Floating icons and app windows reflect the light in the room and cast shadows.

Apple Vision Pro is Apple's first product to run visionOS, but it won't be the last. Rumors expect it will eventually power a set of AR glasses that's been dubbed Apple Glass.

visionOS 3

There aren't any rumors about what Apple could do with visionOS 3 at WWDC on June 9. It may see some slight design and interaction changes, but the update will likely focus on making Apple's apps native.

Apple Intelligence will likely be a significant part of the presentation since the contextual features were delayed. Apple Vision Pro only got AI features as of visionOS 2.4.

Every other operating system is rumored to be getting significant redesigns to bring them closer to visionOS. While changes might be limited for platforms like tvOS 19 and watchOS 12 due to how those platforms work, iOS 19, iPadOS 19, and macOS 16 could get redesigns as extensive as iOS 7.

The redesigned elements are expected to have glassy surfaces that reflect light as the iPhone is moved around. Other changes include new styles for buttons and menus, plus a possible change to Apple's classic squircle icons on the Home Screen.

visionOS 2

visionOS 2 was revealed during WWDC 2024. It contains several new quality-of-life improvements like new navigation gestures and a better Safari video player.

Apple revealed Apple Intelligence as well, but it didn't come to Apple Vision Pro at launch. It has been introduced as a part of visionOS 2.4 with all features available from Writing Tools to Notificaiton Summaries.

Developers have more APIs for creating spatial experiences and 3D apps. Users can convert 2D photos to spatial 3D photos with stereoscopic effects, and the Home View can be reorganized.

The new gestures help address complaints from the first OS version. Users can look at their back of their hand for a button that opens the Home View, or flip their hand over to see a status bar that opens Control Center.

visionOS: going spatial

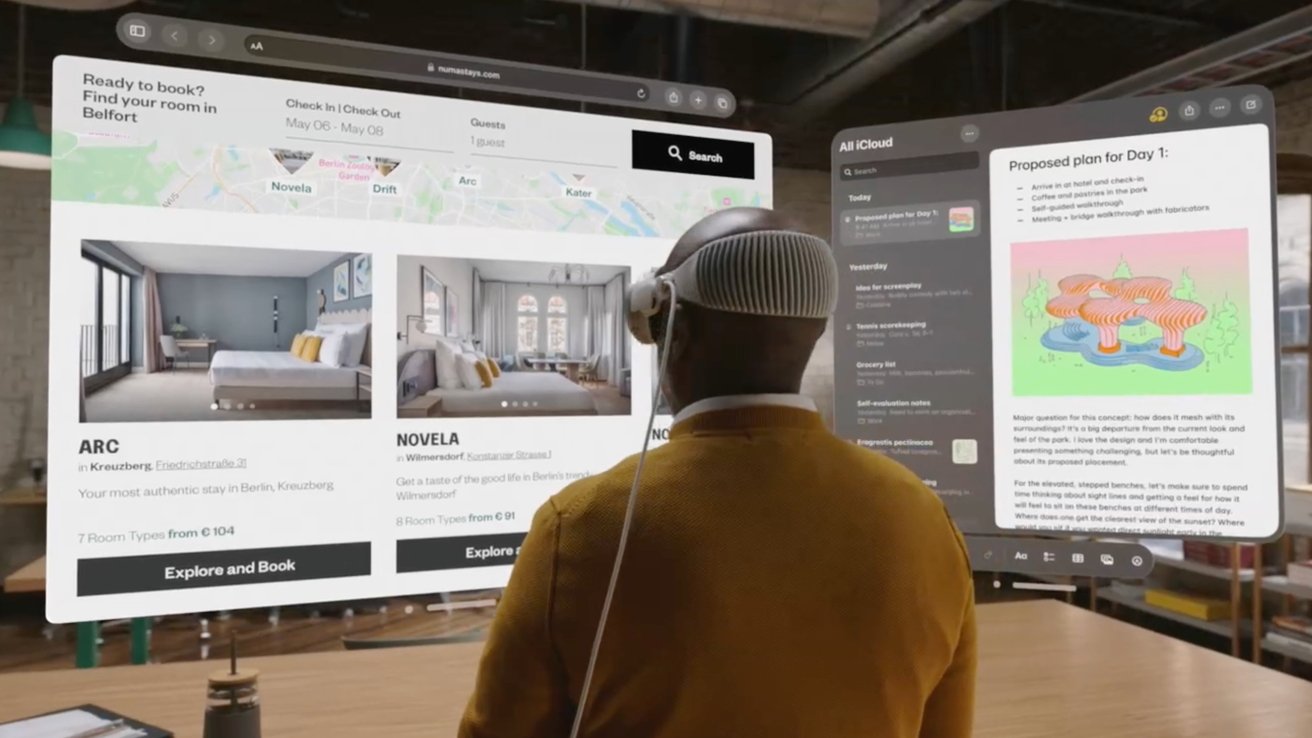

visionOS provides users with an infinite canvas to place apps in a 3D space. Apps can appear as 2D windows, include 3D objects called ornaments, or expand to fill a space with an immersive view.

Design

Existing apps like Safari will show up as a 2D window floating in the air, but it has expanding menus and an address bar that floats external to the window. iPad and iPhone apps are constrained to their window boundary similar to viewing the app on a display.

The Home View looks like a mix of tvOS and watchOS. Round icons arranged in a grid have a 2.5D effect that appears when interacting or looking at them.

Cameras capture the room around the user and pass that through to the internal displays. With Apple Vision Pro on, the user is able to see a near-perfect representation of the real world with depth.

Objects and windows appear to have a physical presence with placement anchors, shadows, and transparency. ARKit plays a part in developing experiences, but 3D scenes have to be built with SwiftUI and RealityKit.

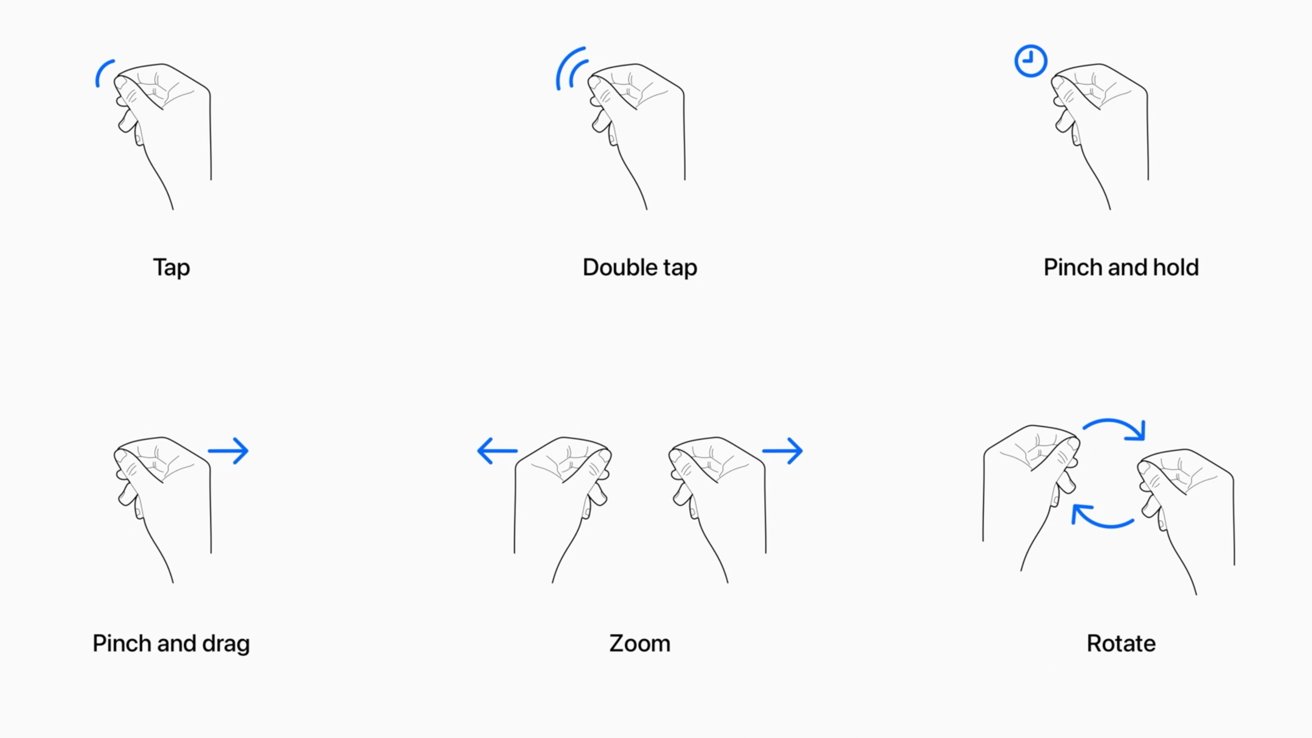

Gestures and control

Hand gestures, eye tracking, and voice controls enable software manipulation without additional hardware. There is a virtual keyboard, but users can utilize Bluetooth keyboards, the Magic Trackpad, or Universal Control from a Mac for input.

Eye tracking allows users to look at an object they wish to interact with. Selecting or grabbing objects is accomplished with a finger-pinching motion.

Look at a text field, pinch, then speak or type to fill it in. A setting to activate speech to text when looking at the microphone icon is enabled by default.

Objects will understand where the user is and what their hands are doing for further interaction. For example, placing a hand out might allow a virtual butterfly to land on a finger.

Force quitting apps is possible by holding down the Digital Crown and Top Button for a few seconds. Or, if it seems like tracking is off, recalibrate by pressing the Top Button four times in a row.

Apple seemed to prepare users for Double Tap on Apple Vision Pro by introducing a similar gesture for Apple Watch Series 9 and Apple Watch Ultra 2. When users perform a pinching motion with their thumb and another finger twice, it activates the active button on the Apple Watch.

Double Tap on Apple Watch is possible thanks to advanced algorithms and the S9 SiP processing multiple sensor signals. Apple Vision Pro is able to interpret the gesture using cameras, but Apple Watch might add another element to that control feature in a future update.

FaceTime

Even though the user will have their face obscured by a headset, FaceTime is still possible on Apple Vision Pro. You'll see other participants through floating windows while you'll be presented via a Persona — a digital representation of yourself.

A user's persona is created by scanning their face with the Apple Vision Pro cameras. The 3D model looks like the user but has an element of uncanny valley about it since it is fully computer generated.

Up to nine floating windows can be viewed during a FaceTime call. The usual limit of 32 participants is still there, but they won't all be visible.

Spatial Video

Owners of an iPhone 15 Pro or iPhone 15 Pro Max running iOS 17.2 can record Spatial Video that can be viewed on Apple Vision Pro. It is a 3D video recorded at 1080p and 30Hz.

Spatial Video can also be recorded while wearing the Apple Vision Pro. However, that may prove a bit awkward, especially in social environments.

While the resolution and frame rate aren't ideal, they shouldn't create poor experiences on the headset. Future iPhones should be able to record higher quality 3D videos, but what is captured today is sufficient for viewing within Apple Vision Pro.

The 3D effect is possible by using the Main Camera and Ultra Wide Camera to capture optically separated video. When each video is played on a separate display in the headset, the user's eyes will be able to see a 3D video, as long as it was captured correctly.

The Apple TV app also offers 3D movies. Apple says over 150 3D titles were available at launch alongside a handful of 8K fully immersive videos.

Security

Apple Vision Pro has a new kind of biometric security — Optic ID. It scans the user's iris to authenticate logins, purchases, and more.

Everywhere a person would use Touch ID or Face ID is where Optic ID would work. Like those other biometric systems, iris data is encrypted and stored in the Secure Enclave so that it never leaves the device.

Apple also prevents apps from gaining valuable data from the device's cameras and sensors. While eye tracking and video passthrough occur during app use, it happens in a separate layer that can't be accessed for third-party data collection.

Like on iPhone or iPad, when it's time to authenticate something using Optic ID, the user confirms with a double-press of the Top Button. This prevents accidental authorization just by looking at a payment field.

Development

Apple has paved a clear path for developers to follow when creating apps and content for visionOS. However, developers were limited at launch by only having access via limited in-person sessions or a simulator tool in Xcode.

There were only about 600 native apps available on Vision Pro at launch. With the hardware available for customers and developers alike, more apps will be released for the platform as fast as is reasonable.

SwiftUI is the bedrock of coding for spatial computing. It has 3D-capable tools with support for depth, gestures, effects, and scenes.

RealityKit provides developers with the ability to generate 3D content, animations, and visual effects. It is able to adjust to physical lighting conditions and cast shadows.

ARKit can help an app understand a person's surroundings with things like Plane Estimation and Scene Reconstruction. This gives apps the ability to understand where a physical wall or floor is.

However, apps built with ARKit are not automatically rendered in 3D. In fact, if an AR app built for iPhone is run in visionOS, it'll appear as a 2D plane showing content as if it were on an iPhone.

Developers will have to start over with RealityKit for 3D content. So no, you'll not be catching Pokemon in your living room with full AR experiences just yet, at least not until Nintendo and Niantic develop an app specifically for that.

Note that Apple has avoided using industry-wide terms for its spatial computing platform. The 2D apps are called "windows," the 3D objects are called "volumes," and full environments are called "spaces" in place of terms like AR objects or VR experiences.

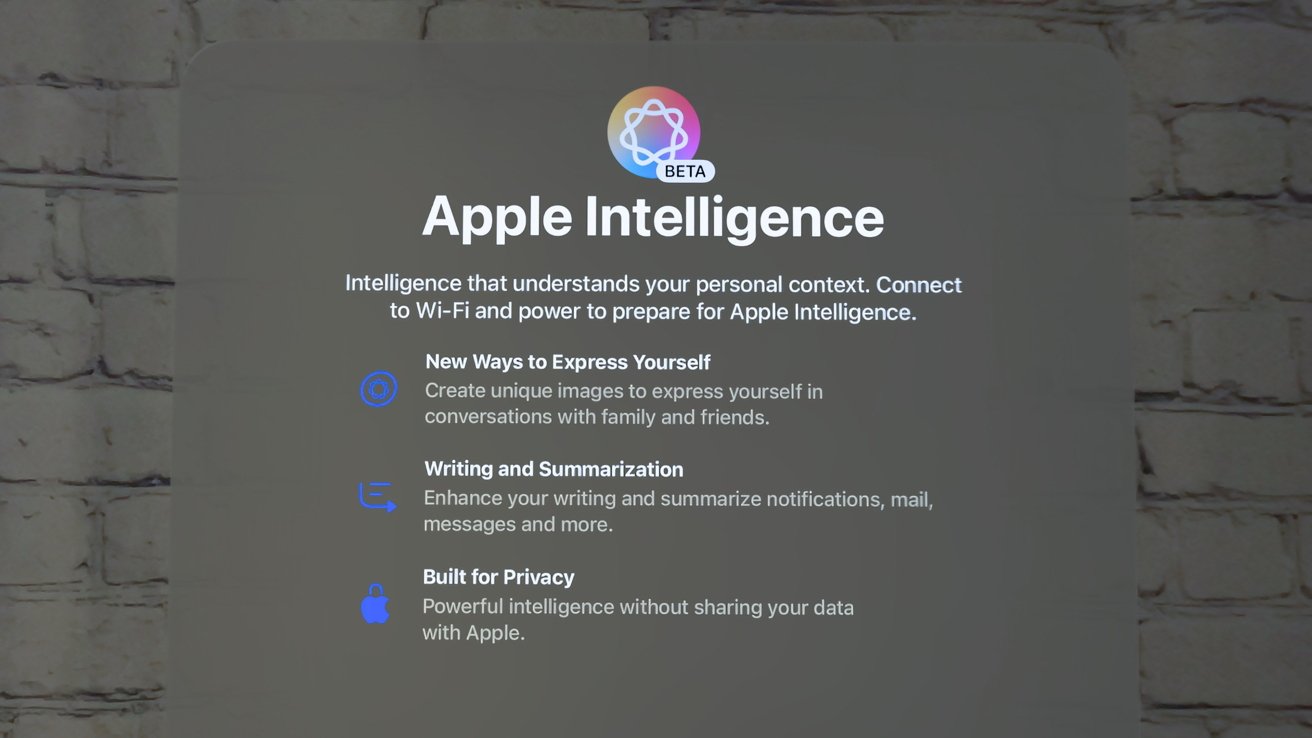

Apple Intelligence

Apple Vision Pro got access to Apple Intelligence with visionOS 2.4. Every public feature available on iPhone, iPad, and Mac made its way to the headset in a single release.

Access Writing Tools anywhere text input is available, use Image Plaground to make images, make new emoji with Genmoji, summarize messages, and get priority notifications. The only feature that seemingly isn't available as of the beta run is access to ChatGPT via Siri.

Apple's AI models run on device and never train with user data. If something needs to get sent to Apple's Private Cloud Compute servers, it gets the same privacy and security protections as an on-device request.

A new app intent system that will enable Apple Intelligence to be more personal and contextual has been delayed into later in 2025. It will likely not emerge until a visionOS 3 release.

visionOS release and its future

Spatial computing is new territory for Apple, and it will take time for the products and software to mature. Apple Vision Pro is just the start in 2024, with a standard Apple Vision model expected in 2026 at a lower price and ultimately AR glasses.

Apple released Apple Vision Pro on February 2, 2024 alongside the operating system visionOS.

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Andrew O'Hara

Andrew O'Hara

Wesley Hilliard

Wesley Hilliard

Amber Neely

Amber Neely