Apple on Tuesday won a patent for an augmented reality (AR) system that can identify objects in a live video stream and present information corresponding to said objects through a computer generated information layer overlaid on top of the real-world image.

Published by the U.S. Patent and Trademark Office, Apple's U.S. Patent No. 8,400,548 for "Synchronized, interactive augmented reality displays for multifunction devices" describes an advanced AR system that uses various iOS device features like a multitouch screen, camera and internet connectivity, among others, to facilitate advanced AR functionality.

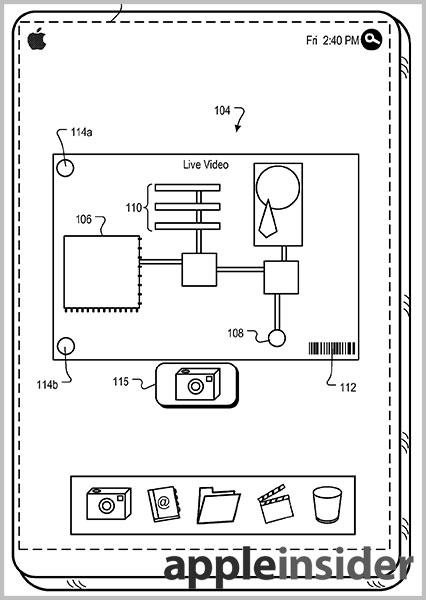

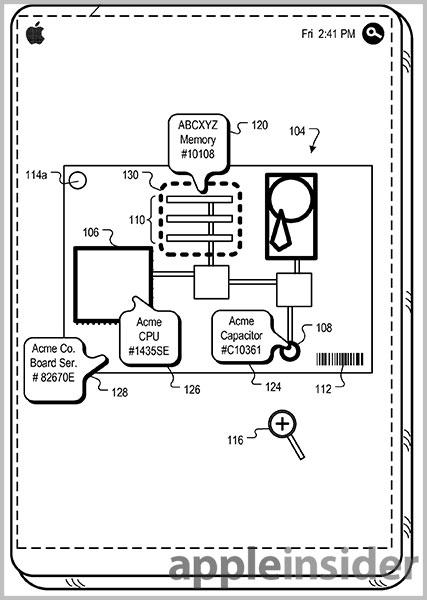

As with other AR systems, the '548 patent uses real-world images and displays them underneath a computer-generated layer of information. In the invention's example, a user is holding their portable device over a circuit board. The live video displays various components like the processor, memory cards and capacitors. Also included in this view is a bar code and markers.

In one embodiment, the live video is overlaid with a combined information layer, which can take the appearance of dashed or colored lines, annotations and other visual cues that are processed and generated by the device's on-board CPU. Annotations can be in text form, images or Web links, and provide information in real time relating to the corresponding objects. To identify the objects, Apple's invention calls upon existing recognition techniques, including edge detection, Scale-invariant Feature Transform (SIFT), template matching, explicit and implicit 3D object models, 3D cues and leveraging Internet data, among many others. Recognition can be performed on device or through the Web.

Unique to Apple's system is the ability for users to interact with the generated information. For example, if the detection system failed to correctly identify an object, the user can input the necessary annotation by using on-screen controls. In some embodiments, users can send the live view data to another device over a wireless network. Annotations, such as circles or text, can be added to the image and the two collaborators can interact with one another via voice communications.

Perhaps most interesting about the '548 patent is the a split-screen view which is employed by having the live and computer generated views separated in two distinct windows. The computer generated windows can complete the usual recognition tasks as described above, with manual entry available, or it can build a completely new set of data.

In the example given, a user is viewing downtown San Francisco in the live view window while the computer generated image asset is drawing a real time 3D composite of the scene. In this case, the generated asset can be used to compute a traveling route with manually-entered markers to be sent to a second user. The recipient can then use the generated overlay on their own camera view and tap on included points of interest, called markers, to launch communication apps.

Taking the idea a step further, the split-screen AR data can be synchronized between two devices, meaning the real time and computer generated views of a first device would be displayed on a second and vice versa. This could be useful if two users were trying to find each other in an unknown place or when a user want to give out basic directions.

Illustration of split screen synchronization.In most embodiments, various embedded sensors like motion and GPS can be leveraged to enhance accuracy and ease of use.

Other examples of AR utilization include medical diagnosis and treatment in which a doctor can share a live view with a patient, complete with x-ray or other vital information.

Apple's '548 patent was first filed in 2010 and credits Brett Bilbrey, Nicholas V. King and Aleksandar Pance as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

-m.jpg)

5 Comments

nearly identical to Google Goggles, but with a balloon popup and a few minor UI's added???

nearly identical to Google Goggles, but with a balloon popup and a few minor UI's added???

Obviously, seeing as those you wear and this you… can't possibly wear without the assistance of at least one bungee cord.

[quote name="Tallest Skil" url="/t/156541/apples-interactive-augmented-reality-system-identifies-real-world-objects-allows-screen-sharing#post_2296739"] Obviously, seeing as those you wear and this you… can't possibly wear without the assistance of at least one bungee cord. [/quote] TS, he's not referring to Google Glass. This is what he's talking about, which is pretty darn similar to what Apple was given a patent for. I don't see it as particularly inventive on Apple's part considering there's a widely used existing app for reference but perhaps different enough to quality for patent issuance. At least the USPTO examiner thought so. http://www.google.com/mobile/goggles/#text

TS, he's not referring to Google Glass. This is what he's talking about. which is pretty darn similar to what Apple was given a patent for. Certainly not inventive but perhaps different enough to quality for patent issuance. At least the USPTO examiner thought so.

http://www.google.com/mobile/goggles/#text

Goggles, Glass…

Next they'll make Google Glasses and Google Spectacles. Google Plexiglass and Google Giggles (unrelated) soon to follow…

Goggles, Glass…

Next they'll make Google Glasses and Google Spectacles. Google Plexiglass and Google Giggles (unrelated) soon to follow…

You won't see Google Giggles until they release the Google Oogle (x-ray glasses). This product will be a favorite of the TSA gropers and teenaged boys.