The U.S. Patent and Trademark Office on Tuesday granted Apple a patent for a camera system that uses three separate sensors, one for luminance and two for chrominance, to generate images with both higher resolution and color accuracy.

Apple's U.S. Patent No. 8,497,897 for "Image capture using luminance and chrominance sensors" describes a unique multi-sensor camera system that can be used in portable devices like the iPhone.

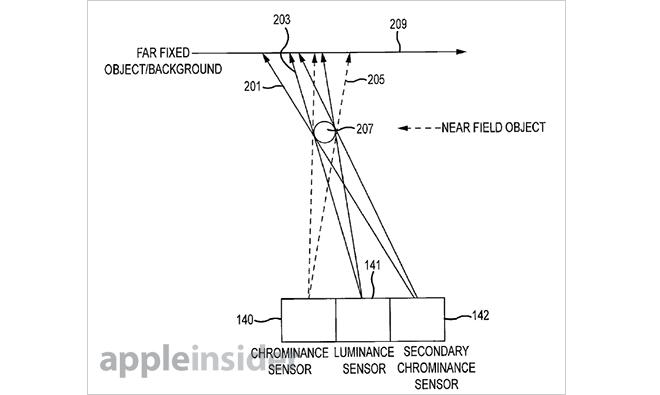

The main thrust of the patent is to combine three separate images generated by one luminance sensor disposed between two chrominance sensors. Each sensor has a "lens train," or lens assembly, in front of it that directs light toward the sensor surface. The document notes that the sensors can be disposed on a single circuit board, or separated.

Important to system's functionality is sensor layout. In most embodiments, the luminance sensor is flanked on two sides by the chrominance sensors. This positioning allows the camera to compare information sourced from three generated images. For example, an image processing module can take raw data from the three sensors, comprising luminance, color, and other data, to form a composite color picture. The resulting photo would be of higher quality than a system using a single unified sensor.

To execute an effective comparison of the two chrominance sensor images, a stereo map is created so that differences, or redundancies, can be measured. Depending on the situation and system setup (filters, pixel count, etc.), the stereo map is processed and combined with data from the luminance sensor to create an accurate scene representation.

Source: USPTOThe stereo map also solves a "blind spot" issue that arises when using three sensors with three lens trains. The patent offers the example of an object in the foreground obscuring an object in the background (as seen in the first illustration). Depending on the scene, color information may be non-existent for one sensor, which would negatively affect a photo's resolution.

To overcome this inherent flaw, one embodiment proposes the two chrominance sensors be offset so that their blind regions do not overlap. If a nearby object creates a blind region for a first sensor, the offset will allow for the image processor to replace compromised image data with information from a second sensor.

Further, the image processor can use the stereo disparity map created from data generated by the two chrominance sensor images to compensate for distortion.

Other embodiments call for varied resolutions or lens configurations for the chrominance and luminance sensors, including larger apertures, different filters, or modified image data collection. These features could enhance low-light picture taking, for example, by compensating for lack of luminance with information provided by a modified chrominance sensor. Here, as with the above embodiments, the image processor is required to compile data from all three sensors.

While Apple is unlikely to implement the three-sensor camera tech anytime soon, a future iPhone could theoretically carry such a platform.

Apple's luminance and chrominance sensor patent was first filed for in 2010 and credits David S. Gere as its inventor.

Mikey Campbell

Mikey Campbell

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

William Gallagher

William Gallagher

21 Comments

Fun patent. It would be possible to have for example 12Mpix x3 setup for 36mpix camera. I doubt however that Apple will use 3 lenses. It would cost to much. (even if it just cost 1 dollar. Apple sells 120 million iphones per year. That is almost 300 million in extra cost)

@shompa - no need to worry: - Samsung will have a phone using it before Apple; - Apple will sue; - Nokia will sue Apple because they're doing something "similar" in Lumia, first; - Sony will jump at the chance to say they thought of it first; - Engadget, Verge, Ars and countless other sites will claim it as "obvious"; - Fans of Android will claim to have seen the same sensor on Star-Trek in 1971, so "prior art"; - Any and all lawsuits will drag out for 5 years; - USPTO will invalidate Apple's original patent; Summary: not worth the time or ink to register the patent. End. Of. Story. /s

Is this like Photoshop's LAB mode?

[quote name="ThePixelDoc" url="/t/158776/apple-patents-three-sensor-three-lens-iphone-camera-for-enhanced-color-photos#post_2370545"]@shompa - no need to worry: - Samsung will have a phone using it before Apple; - Apple will sue; - Nokia will sue Apple because they're doing something "similar" in Lumia, first; - Sony will jump at the chance to say they thought of it first; - Engadget, Verge, Ars and countless other sites will claim it as "obvious"; - Fans of Android will claim to have seen the same sensor on Star-Trek in 1971, so "prior art"; - Any and all lawsuits will drag out for 5 years; - USPTO will invalidate Apple's original patent; Summary: not worth the time or ink to register the patent. End. Of. Story. /s[/quote] Best post of the day :D

[quote name="ThePixelDoc" url="/t/158776/apple-patents-three-sensor-three-lens-iphone-camera-for-enhanced-color-photos#post_2370545"]@shompa - no need to worry: - Samsung will have a phone using it before Apple; - Apple will sue; - Nokia will sue Apple because they're doing something "similar" in Lumia, first; - Sony will jump at the chance to say they thought of it first; - Engadget, Verge, Ars and countless other sites will claim it as "obvious"; - Fans of Android will claim to have seen the same sensor on Star-Trek in 1971, so "prior art"; - Any and all lawsuits will drag out for 5 years; - USPTO will invalidate Apple's original patent; Summary: not worth the time or ink to register the patent. End. Of. Story. /s[/quote] Apple's patent filings are Samsung's R&D.