The U.S. Patent and Trademark Office on Thursday published an Apple patent describing a device with built-in pressure sensors that work in concert with touchscreen input to provide enhanced UI navigation.

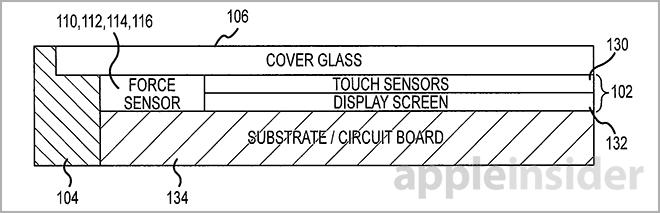

Apple's "Gesture and touch input detection through force sensing" patent application details a device that implements the usual multitouch displays seen in the iPhone and iPad, but adds at least three force sensors underneath the screen's surface. By deploying the pressure-sensitive components around corners of the device, or other known areas, the sensors can be translated into a secondary mode of input.

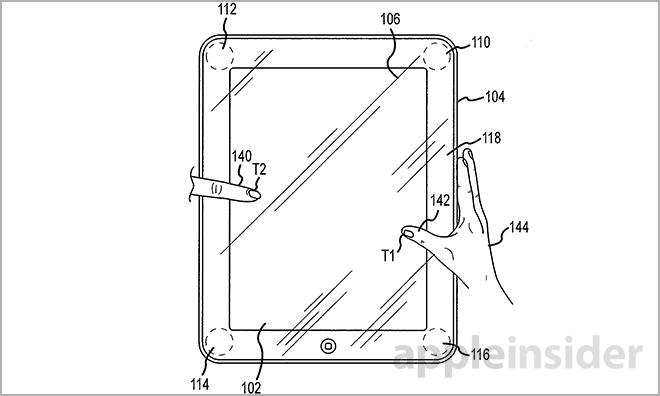

The filing notes that, while accurate, current forms of touch control may not be sufficient in the detection of certain multitouch gestures. For example, if a user were to invoke a gesture from the edge of a device's screen, as in a left or right swipe, the input may not be logged as intended.

Ideal embodiments of the invention include at least three force sensors, but the deployment of four or more is typical in such systems. The sensors must be operatively attached to the touch surface, such as a display, though not necessarily in areas seen by the user. An example would be the masked-out bezel area on an iPhone's display.

In addition, force sensors do not need to be co-located with capacitive touch regions as they act independently of said system. Instead, selective sensor distribution is used to determine where a user is pressing during a given gesture.

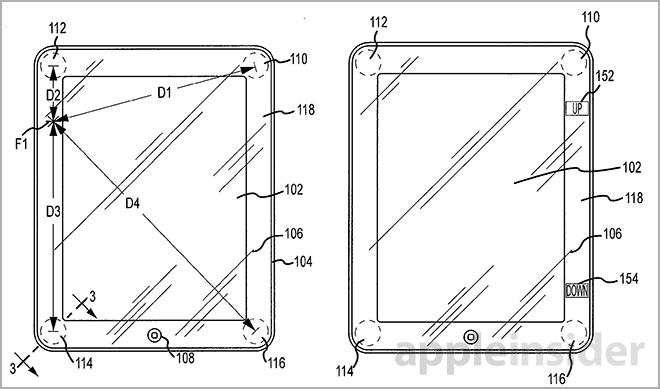

When an input force is detected, each individual sensor receives varying pressure values that can be calculated to find a "force centroid," or force origin point. For example, when a user exerts force near the top right corner of a device, the sensor disposed adjacent to that corner will receive a certain force value. Likewise, sensors located in other areas will receive different values, likely with a lower amplitude.

By analyzing the force centroid, one or more touch inputs and/or force inputs can be resolved from a single cohesive mechanism. In one example, the centroid can be used in conjunction with a touch input to determine that a swipe gesture originated offscreen. Another embodiment can use the data to filter out accidental touches.

The system can act in the same way as current palm rejection technology, but on a more granular level. An example provided illustrates a user resting their thumb on one portion of a display while interacting with the UI via another finger. Without force sensor tech, the GUI would recognize the motion as a multitouch event rather than reject the inadvertent thumb touch.

In some embodiments, the force sensors are positioned outside of the active touch area. This may allow for a secondary mode of input that correlates to onscreen user interface elements like buttons or arrows. In such cases, required input for a UI graphic is offloaded beyond the border of a touchscreen's active surface, thereby freeing up much needed display space.

The remainder of the filing deals with centroid calculation and further refinement of control software.

In November, Apple was granted a patent for a similar system in which force sensors were disposed beneath an iPhone's surface glass. That property, however, focused on input based on varying degrees of pressure rather than selective location.

Apple's force sensor patent was first filed for in 2012 and credits Nima Parivar and Wayne C. Westerman as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

William Gallagher

William Gallagher

7 Comments

Is this really going to be that accurate with sensors only around the bezel in a display the size of the iPad Air? Seems rather a crude method, just throwing pressure sensors in there, and not in a uniform way across the whole display. I would have hoped for something cleverer like measuring the relative surface area of the pressure point, or detecting blood flow, or some other smart metric.

I imagine if implemented they would do just that. This is just a single patent filing concentrating on one aspect/method rather than a guide to their overall implementation ;)

Is this really going to be that accurate with sensors only around the bezel in a display the size of the iPad Air? Seems rather a crude method, just throwing pressure sensors in there, and not in a uniform way across the whole display. I would have hoped for something cleverer like measuring the relative surface area of the pressure point, or detecting blood flow, or some other smart metric.

Exactly, most people tend to apply pressure to the bezel in different ways when holding the device. I'm sure Apple has thought of that, I hope.

Yes, gestures from the border are great, but the hardware has to be improved to better detect them for sure.

I predicted this long ago. Bye bye home button. Now the screen can grow without a larger footprint.