An Apple patent published on Tuesday details a miniaturized iPhone camera system that employs a light-splitting cube to parse incoming rays into three color components, each of which are captured by separate sensors.

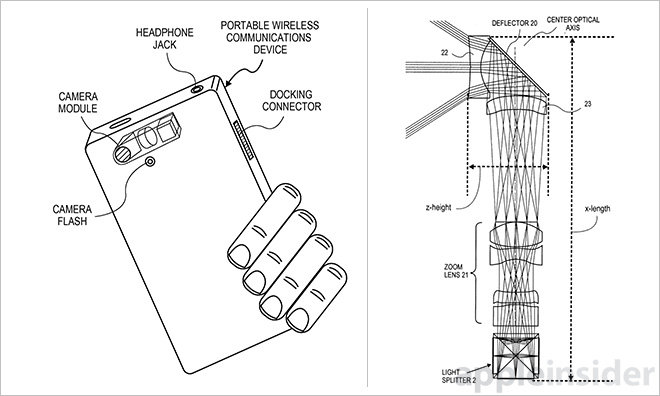

As granted by the U.S. Patent and Trademark Office, Apple's U.S Patent No. 8,988,564 for a "Digital camera with light splitter" examines the possibilities of embedding a three-sensor prism-based camera module within the chassis of a thin wireless device, such as an iPhone. Light splitting systems do not require color channel processing or demosaicing, thereby maximizing pixel array resolution.

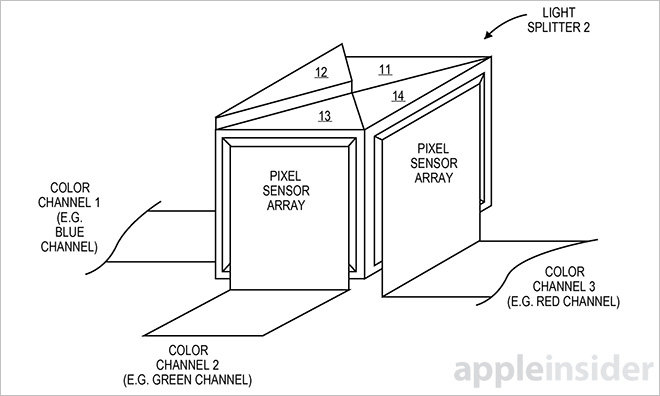

Commonly found in prosumer video cameras, and more recently in handheld camcorder models, three-sensor imaging technology splits incident light entering a camera into three wavelengths, or colors, using a prism or prisms. Usually identified as red, green and blue, the split images are picked up by dedicated imaging sensors normally arranged on or close to the prism face.

Older three-CCD cameras relied on the tech to more accurately capture light and negate the "wobble" effect seen with a single energy-efficient CMOS chip. Modern equipment employs global shutter CMOS modules that offer better low-light performance and comparable color accuracy, opening the door to entirely new shooting possibilities.

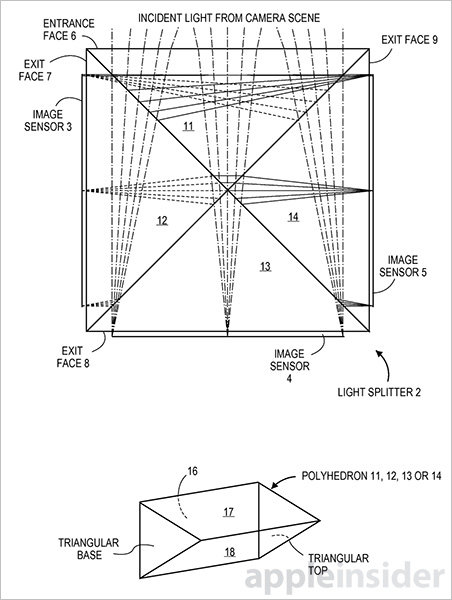

Apple's design uses light splitting techniques similar to those applied in current optics packages marketed by Canon, Panasonic, Philips and other big-name players in the camera space. For its splitter assembly, Apple uses a cube arrangement constructed using four identical polyhedrons that meet at dichroic interfaces.

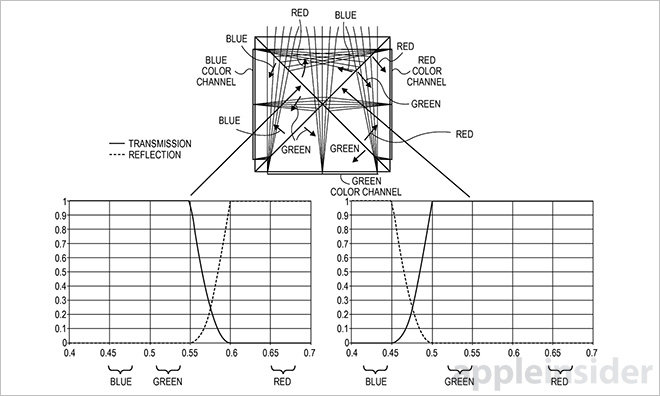

By coating each interface with an optical coating, particular wavelengths of incident light can be reflected or allowed to transmit through to an adjoining tetrahedron. Adjusting dichroic filters allows Apple to parse out red, green and blue wavelengths and send them off to three sensors positioned around the cube. Aside from RGB, the patent also allows for other color sets like cyan, yellow, green and magenta (CYGM) and red, green, blue and emerald (RGBE), among others.

Light splitters also enable other desirable effects like sum and difference polarization, which achieves the same results as polarization imaging without filtering out incident light. The process can be taken a step further to enhance image data for feature extraction, useful in computer vision applications.

Infrared imaging can also benefit from a multi-sensor setup, as the cube can be tuned to suppress visible wavelength components, or vice versa.

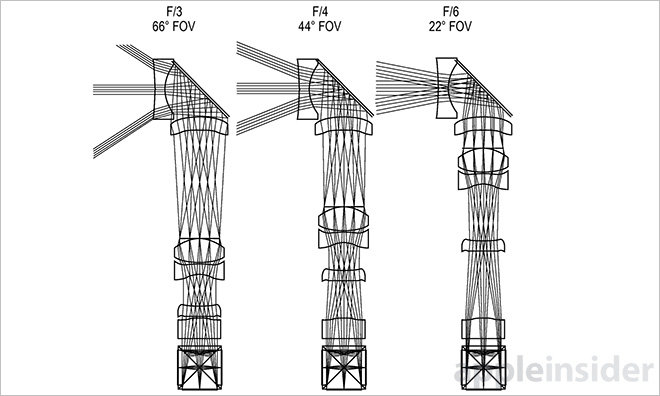

Tuesday's patent is an extension of a previously published Apple invention that uses mirrors and optics to achieve optical image stabilization without eating up valuable space. In such embodiments a foldable, or mobile, mirror redirects incoming light, bouncing it toward a rectangular tube that terminates with a cuboid light splitter. In between the mirror and sensor package are a series of lenses that can be operably moved for focus and zooming. Motors are applied to vary the mirror's angle to offset hand shake recorded by onboard sensors.

It is unclear if Apple intends to implement a light splitter into a future product, though the company is working hard to keep iPhone at the fore of ultra-mobile photography. For example, the latest iPhone 6 Plus includes an OIS system that produces sharp images even in low-light situations.

Apple's cube light splitter camera system patent was first filed for in 2011 and credits Steven Webster and Ning Y. Chan as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

-m.jpg)

42 Comments

Just make the damn thing Apple! Seriously I'd be all over a new iPhone that is a seriously good photography tool. To that end I would hope that they don't miniaturize it to the point that any optical benefit goes out the window. Hopefully they can hit 12 mega pixels while upping light gathering effciency significantly. At is 12 mega pixels that are measurably of higher quality than what we get now.

Very very interesting. Now impliment it in a product and take my money! ?

The problem with this system is that it would mean less light hits the sensor array due to the mirror and additional glass in the way. This means noisier images and slower shutter speeds let alone the fact that there are more mechanical parts to do wrong.... I wouldn't hold your breath for this to be realistically an option for a while.... Sensor tech would need to improve substantially to obviate the lack of light.

You forgot that they can put in three times the sensor area and they don't need the colour filters on the sensor dots anymore or to interpolate the colours.

The Dark Side of the Moon tech