A patent application filed with the U.S. Patent and Trademark Office on Thursday reveals Apple is continuing to explore light field camera applications, in this case imagining how users would manipulate image data to change focus or depth of field in a photo editing app.

Published under the wordy title "Method and UI for Z depth image segmentation," Apple's application covers the software side of light field imaging, describing an application that edits data produced by highly specialized imaging sensors. At its most basic level, the filing imagines steps a user could take to focus, and later refocus, an image captured by a light field camera.

Apple starts off by outlining hardware capable of producing imaging data necessary for advanced refocusing computations. A generic "light field camera" is described as being a camera that not only captures light, but also information about the direction at which light rays enter the system. This rich data set can be used to produce a variety of images, each with a slightly different perspective.

Such specialized imaging sensors do exist in the real world, perhaps most visibly in equipment made by consumer camera maker Lytro. Alternatively, the document could be referring to a plenoptic camera that uses a microlens array to direct light onto a traditional CMOS chip, achieving similar results with less fuss. Apple coincidentally owns IP covering such implementations.

One of the main features of a light field camera is its ability to recalculate depth of field, a term describing a function of aperture size and subject distance that presents itself as a camera system's range of focus.

A large aperture results in a shallow depth of field, meaning only objects that fall within a tight depth range are in focus, while small apertures offer a wider depth of field. In a highly simplified example, objects between two and four feet away from a camera system set to an aperture of f/2 at a specific focusing distance may come out pin-sharp in a photo. Conversely, the same camera system set with an aperture of f/16 or smaller would reproduce objects in sharp focus from two feet to infinity.

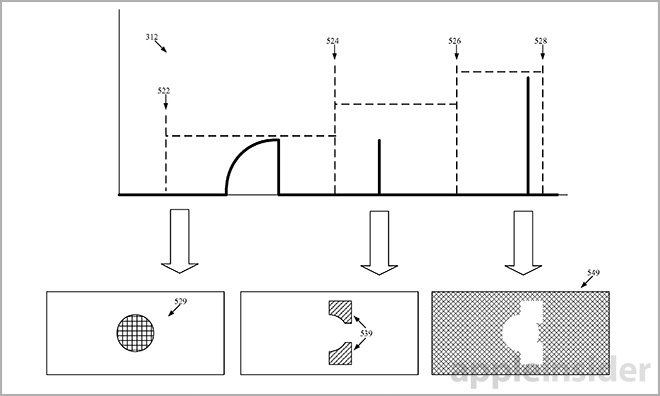

In a light field system, data related to light ray direction is processed to determine the depth of an object or objects in frame. Apple's invention uses this depth information to parse an image into layers, which can then be altered or even removed by an editing application.

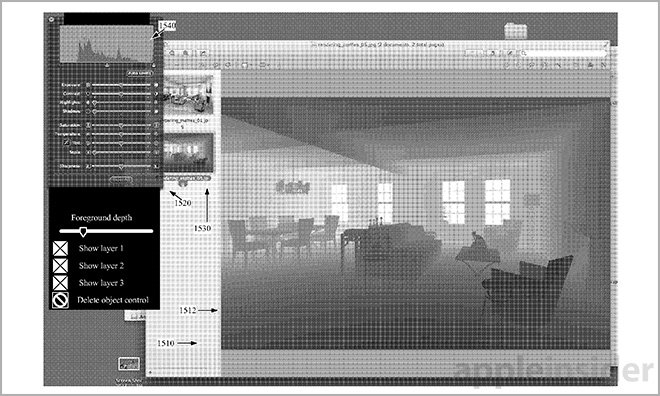

In one embodiment, Apple's system ingests light field image data, processes it and generates a histogram displaying depth versus number of imaging pixels. Analyzing the histogram, the system can determine peaks and valleys from which image layers are formed.

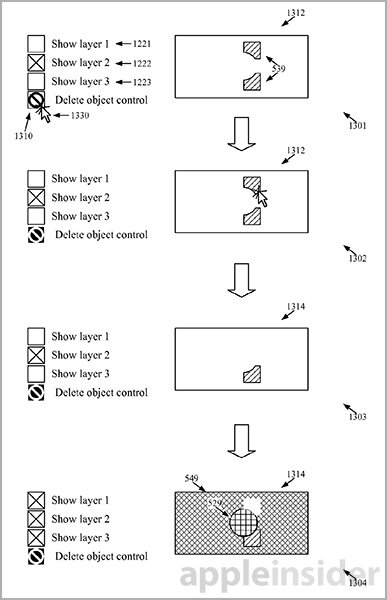

Each layer corresponds to a certain depth in the light field image. Applying a UI tool, such as a slider bar, users can instruct the system to show foreground layers while obscuring background layers. Manipulating the depth slider causes certain layers to pop into and out of focus.

Alternative methods include selecting an object for focus within an image by clicking or tapping on it directly. With light field data, layers assigned to these objects can be reproduced in various states of focus, or calculated focusing distance, in relation to other layers (foreground and background). In some scenarios, layers can be deleted altogether.

Apple's image editing program also incorporates the usual assortment of photo adjustment settings like contrast, brightness and color correction. Bundled into one app, users can manipulate photo properties alongside depth of field, focus, layer selection and other advanced features specific to light field imaging.

Put into the context of recent events, today's filing is especially interesting. On Tuesday, Apple confirmed — albeit through usual non-answer comment — the purchase of Israel-based LinX Computational Imaging Ltd., a firm specializing in "multi-aperture imaging technology." Some estimate the deal to be worth some $20 million.

What Apple plans to do with LinX technology is unclear, but it wouldn't be a stretch to connect the IP with a light field camera system. In the near term, however, Apple is more likely to apply its newly acquired multi-lens camera solutions to capturing traditional photos at high resolutions using small form-factor components.

Apple's light field imaging software patent application was first filed for in October 2013 and credits Andrew E. Bryant and Daniel Pettigrew as its inventors. According to what is believed to be Pettigrew's LinkedIn profile, he has since left his post as Image Processing Specialist at Apple for the same position at Google.

Mikey Campbell

Mikey Campbell

-m.jpg)

Chip Loder

Chip Loder

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

15 Comments

Very cool report. The Lytro technology has the potential to change electronic imaging forever. tl; dr: you can focus a picture [B]after[/B] you take it. That would work well on a phone, tablet or watch (and other unrealized future products).

Yes! This is what I was hoping for. This is the future of Photography IMHO. Post editing RAW images will be a whole new ball of wax. This is very big people! p.s. I hope Apple is granted this patent and has a slew more in the works. Google and Scamsung will be hard at work and willing to spend years in court to sell a rip off no doubt, all the while we will have years of Gatorguy posting prior art and spurious articles showing Google invented this already.

Yes! This is what I was hoping for. This is the future of Photography IMHO. Post editing RAW images will be a whole new ball of wax. This is very big people!

p.s. I hope Apple is granted this patent and has a slew more in the works. Google and Scamsung will be hard at work and willing to spend years in court to sell a rip off no doubt, all the while we will have years of Gatorguy posting prior art and spurious articles showing Google invented this already.

This is really huge.

FWIW, Google could just buy Lytro, although I don't see how that would align with ad sales.

"Someone" is trolling me pretty hard today. :rolleyes: At least the third attempt in the last several hours but I don't plan to play along so no need to continue diverting thread discussions with[B] egregious trolling.[/B] http://arstechnica.com/staff/2011/09/announcing-increased-moderation-of-trolls-in-discussion-threads/

Interesting patent, but the connection with the LinX technology is not obvious, since LinX does not use light field techniques.