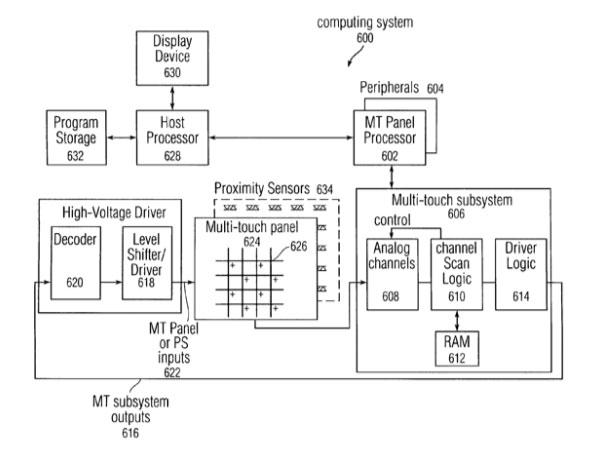

More specifically, the electronics maker believes the combination of these two different types of sensors — multi-touch and proximity — could be used to detect the presence of one or more fingers, body parts or other objects hovering above a touch-sensitive surface.

The detection of fingers, palms or other objects hovering near the touch panel is desirable, Apple says, because it can enable the computing system to perform certain functions without necessitating actual contact with the touch panel, such as turning the entire touch panel or portions of the touch panel on or off, turning the entire display screen or portions of the display screen on or off, powering down one or more subsystems in the computing system, enabling only certain features, dimming or brightening the display screen, and so forth.

"Additionally, merely by placing a finger, hand or other object near a touch panel, virtual buttons on the display screen can be highlighted without actually triggering the 'pushing' of those buttons to alert the user that a virtual button is about to be pushed should the user actually make contact with the touch panel," the filing says. "Furthermore, the combination of touch panel and proximity (hovering) sensor input devices can enable the computing system to perform additional functions not previously available with only a touch panel."

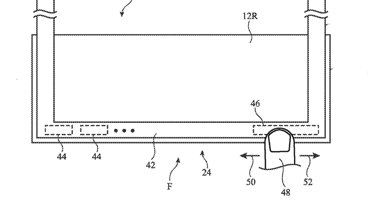

The proximity sensors could be composed of IR transmitters for transmitting IR radiation, and IR receivers for receiving IR radiation reflected by a finger or another object in proximity to the panel. To detect the location of touch events at different positions relative to the panel, multiple IR receivers can be placed along the edges touch-screen's blackened display bezel, like those on the latest iMacs and MacBooks, the company says.

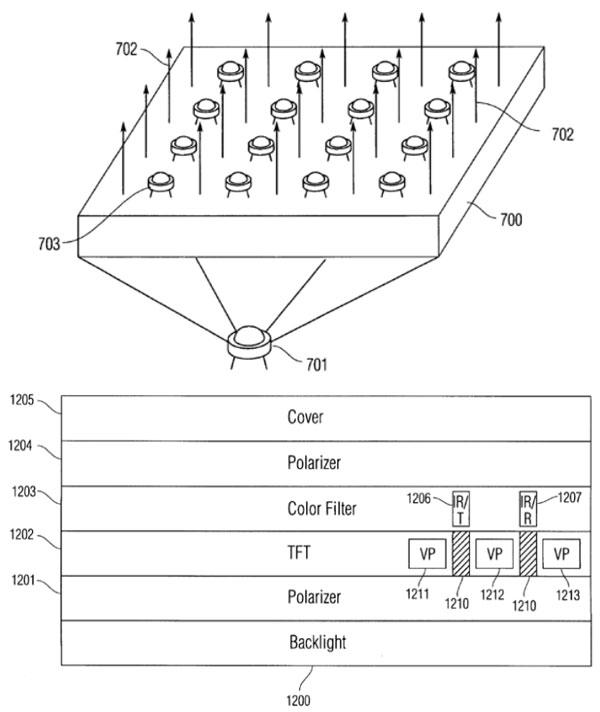

However, the July 2008 filing primarily focuses on an "integrated photodiode matrix," or proximity panel that includes a two-dimensional grid of multiple IR transmitters and a grid of multiple IR receivers.

For example, a grid of IR receivers can be placed on the panel behind a multi-touch screen, allowing each IR receiver to serve as a "proximity pixel" indicating the presence or absence of an object in its vicinity and, in some cases, the distance between the receiver and the object. Data received from multiple receivers in of a panel could then be processed to determine the positioning of one or more objects above the panel.

"The transmitters and receivers can be positioned in a single layer, or on different layers. In some embodiments, the proximity panel is provided in combination with a display," Apple explained. "The display can be, for example, a liquid crystal display (LCD) or an organic light emitting diode display (OLED display). Other types of displays can also be used. The IR transmitters and receivers can be positioned at the same layer as the electronic elements of the display (e.g., the LEDs of an OLED display or the pixel cells of an LCD display). Alternatively, the IR transmitters and receivers can be placed at different layers."

The 30-page filing is credited to Apple employees Steve Hotelling, Brian Lynch, and Jeffrey Bernstein.

Sam Oliver

Sam Oliver

-m.jpg)

Andrew Orr

Andrew Orr

Wesley Hilliard

Wesley Hilliard

Oliver Haslam

Oliver Haslam

Christine McKee

Christine McKee

Amber Neely

Amber Neely

10 Comments

Um...nevermind...the joke is too easy

I know that Apple does not follow through with many of these patents, but I am personally convinced that this is why they have moved to glass displays across their product line.

Large touch screens right now are a bit gimmicky and I think it will take time for people to seriously consider them as a means of input for desktop devices. But if anyone can make it catch on, it'll be Apple and not HP.

I know that Apple does not follow through with many of these patents, but I am personally convinced that this is why they have moved to glass displays across their product line.

No doubt. This would be cool for the MacBook lines of laptops for the glass glidepads. HP's touch senitive monitors have limited use but Apple's ideas are more practical in using the glidepad. Hopefully this will make it into next year's systems.