A new batch of Apple patent applications published on Thursday by the U.S. Patent and Trademark Office shows Apple is still working on technology relating to virtual and augmented reality, with filings showing a multi-resolution system for creating VR content, headset concepts, and a 3D document editing system.

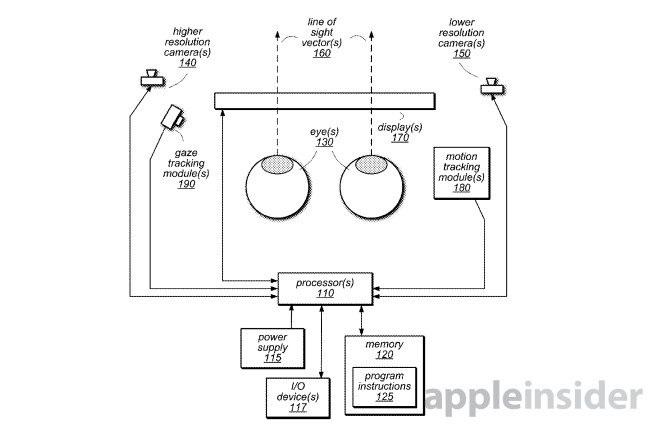

The "Predictive, Foveated Virtual Reality System," filed on September 21 2017, is aimed as a way to produce VR and AR content, as well as to show it to the user, with minimal latency. The high amount of data required to show high-quality imagery in VR can cause lag, which may cause users to suffer from headaches, nausea, and eyestrain, with Apple adding the normal solution of upgrading the equipment would be costly.

Apple's solution is to reduce the amount of data being piped through to the user's VR headset in a selective way. At the time of rendering, the proposed system will output at two different resolutions, with one high-quality image showing detail accompanied by a lower-quality version.

It is also suggested this could also be employed with a multi-camera setup, using cameras configured to produce high- and low-resolution imagery.

In theory, the higher-resolution image would be shown in areas the user is focused on, monitored by gaze-tracking modules. The rest of the picture is made up of the lower-resolution image, working on the principle that a user's peripheral vision is unable to pick up on fine details, aided by the user's attention being focused on the high-resolution areas.

The "Predictive" element involves the system analyzing the user's head and eye movements to work out what areas of the picture will need to be in higher resolution.

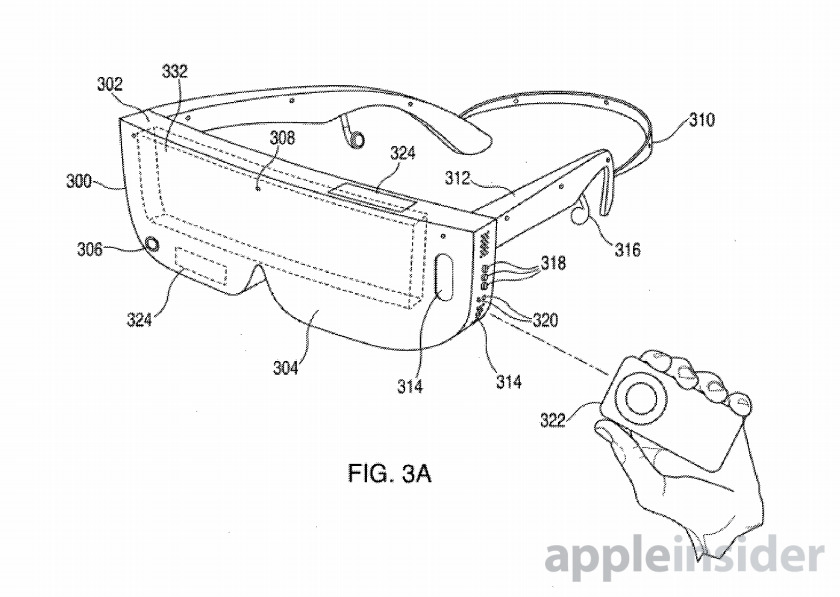

Filed on November 15 last year, the "Head-Mounted Display Apparatus for Retaining a Portable Electronic Device with Display" is a headset that users can slot a smartphone into from one side. Images illustrating the concept depict shade-style glasses that are thick enough to accept an iPhone, slid in from one side.

The application retreads a lot of earlier ground already covered by Apple, one that was granted to Apple in 2016, and even reference a related visor-based patent from 2008. The repeated filings are certainly a sign that Apple is still interested enough in the concept to work on it, but aside from rumors, the firm has yet to progress to producing such a device.

Inside the mounting section shown in today's filing is a Lightning connector to enable the iPhone to communicate with the headset's components, with the screen lining up with the user's eyes. The headset includes space for a small camera to show the view in front of the user, potentially for AR applications, with the eye section also including speakers and buttons on the side, earbuds on the arms to provide audio, and a separate remote providing extra controls.

The application explains the headset has an advantage over wired versions that connect to computers, in that it does not need to be tethered to other hardware to work, using the iPhone to render images and to display them.

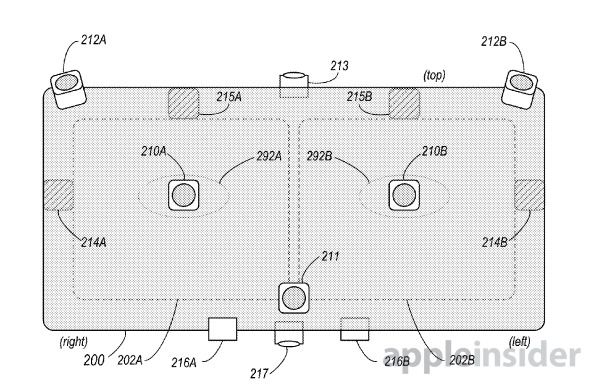

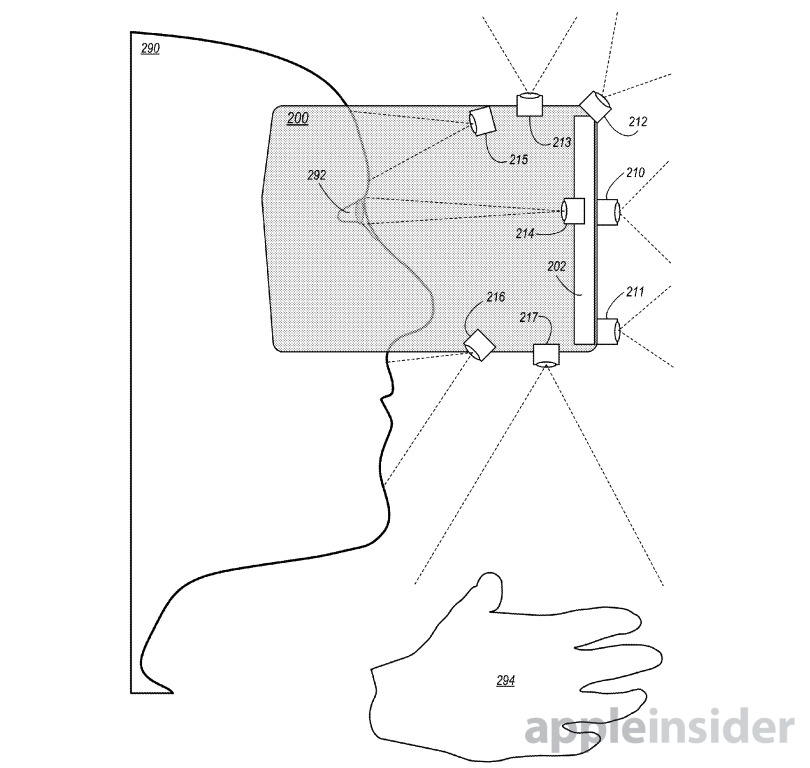

The connected "Display System Having World and User Sensors," filed on September 22 2016, describes a mixed reality system using a head-mounted display, capable of providing 3D virtual views of the environment augmented with virtual content. While it looks like a typical VR headset in the mould of the Oculus Rift, Apple's version apparently relies a lot more on sensors than its potential rival.

On the outside are sensors used to collect information about the user's environment, including multiple live video views, depth information, and lighting data. At the same time, other sensors will detect the user's head movement, gaze, expressions, and hand gestures, which can be interpreted as user inputs for the system.

By capturing video feeds of what each eye would see if unobstructed by the headset, it is able to create a form of augmented reality image that combines the virtual object with the live feed, which is then shown to the user. Such a system would be useful for both AR and VR purposes, though unlike the previously mentioned patent application, would most likely need to be connected to a host to function.

Originally filed on September 20, 2016, the "3D Document Editing System" defines the way a document could be edited in 3D space, using a VR or AR device, a keyboard, and a gesture recognition system. Apple writes that using a 3D-based system instead of the traditional 2D software used today, users will be able to make native 3D documents, including text effects taking advantage of the extra dimension.

In its basic form, the system is fairly similar to a normal 2D-based text editor, except the screen is suspended in the air in front of the user. As well as using the X and Y axis when writing text or adding other data, gestures can be used to move areas of the document forward on the Z axis, brought closer to the user compared to the rest of the document.

This can be used to make some elements more prominent to the viewer than others, to draw attention to that section. For example, web links could be brought forward on the document to make it easier for a reader to access while using gestures or a controller.

Apple regularly applies for patents, filing ideas with the USPTO tens or hundreds of times a week. The listings detailed today are only a handful of 128 patent applications published in a single day.

In many cases, Apple files the idea but does not commercialize the concept. As a result, there is no guarantee the applied patents will make an appearance in a future product or service.

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Chip Loder

Chip Loder

Christine McKee

Christine McKee

William Gallagher

William Gallagher

Amber Neely

Amber Neely

-m.jpg)

1 Comment

Build it, and they will come.