Future iPhones and iPads could work without the user touching the display, with Apple exploring the possibility of using the proximity of fingers or the Apple Pencil to the screen to enable selections and gestures in situations where touching the device isn't desirable.

Touchscreens are, as the name suggests, interacted with via touching the display, and are quite a simple system to use for the vast majority of smartphone and tablet users. There are some times when touching the screen should be avoided, such as if the user is cooking and has dirty hands, but even though the increased functionality of digital assistants like Siri may help mitigate this problem, they simply cannot compete with directly interacting with the device with fingers.

There is also the possibility of creating enhanced gestures that do not rely on constant contact with the display. The ability to hover fingers above the screen in concert with touches could result in entirely new types of gestures and controls.

However, while hover-based interfaces have been around for a while in a limited fashion, they don't work seamlessly with traditional touch interfaces. Learning two different types of interface controls through effectively the same medium may confuse some users.

Granted to Apple by the US Patent and Trademark Office on Tuesday, the patent for "Devices, methods, and user interfaces for interacting with user interface objects via proximity-based and contact-based inputs" aims to solve issues in both of those areas.

In Apple's solution, there are one or more sensors used to detect proximity of an input object, such as a stylus or a finger, above a touch-sensitive surface, as well as for detecting the intensity of contact with the display.

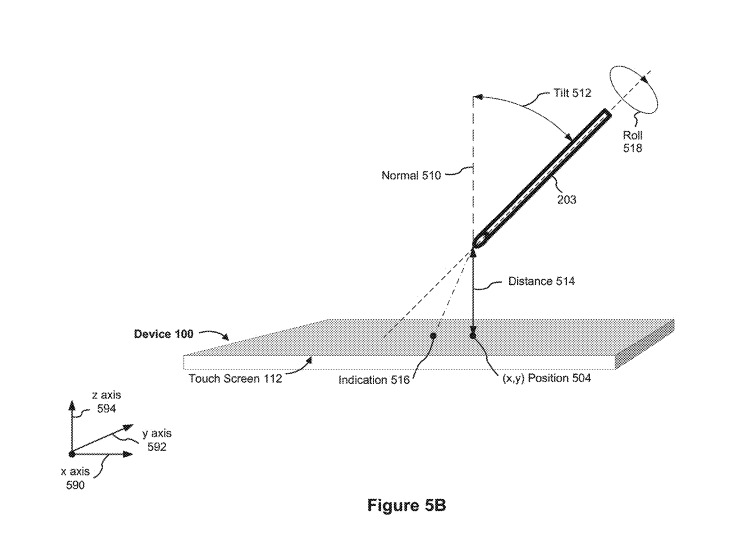

What the patent suggests could be read by detecting the stylus, and what it would interpret for positioning

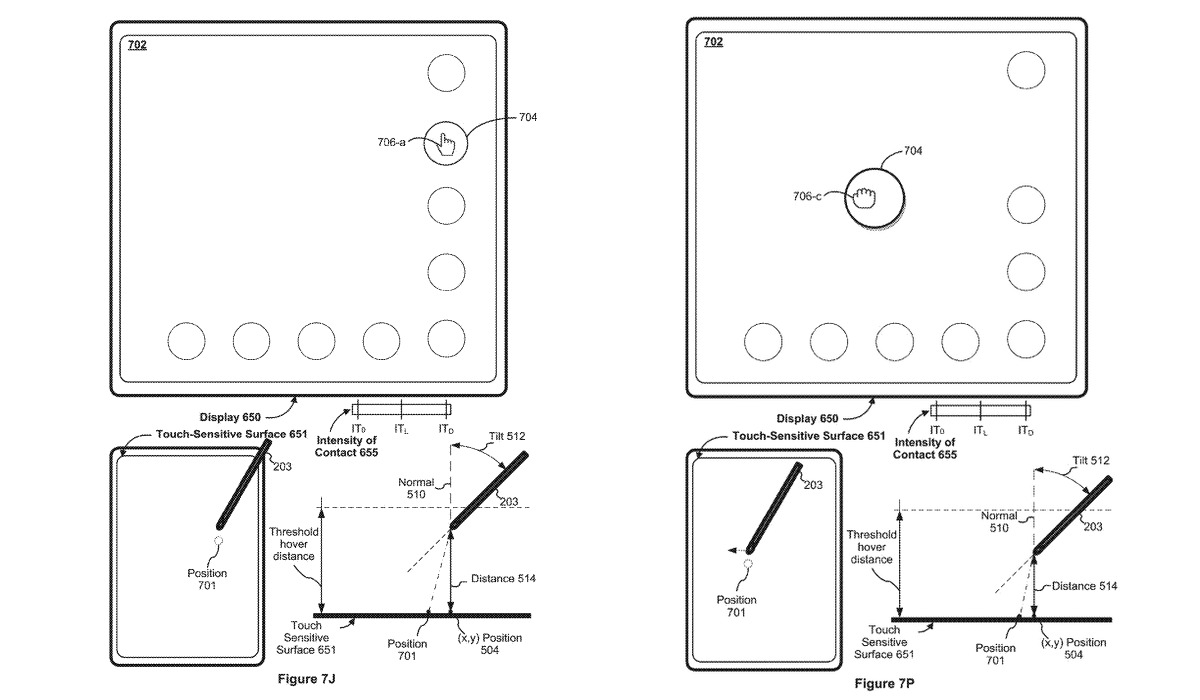

What the patent suggests could be read by detecting the stylus, and what it would interpret for positioningDetecting a hovering item isn't new, but Apple's patent errs more towards how a user interface could interact with the elements, rather than detecting the presence of the element. In this case, how far the stylus or finger is from the display, the angle of the stylus, the position, and when it contacts the display with a specific intensity or pressure.

Depending on the current state and previous actions taken, the interface can react and present different options to the user.

For example, the interface may only take into account a user's actions when the stylus is within a certain range of the display, which could prompt icons to appear on the display ready for the user's section in the region of the screen where the stylus is hovering above. A press on the just-added elements of the interface by the stylus could then trigger other actions.

The intensity or pressure of the press could also be taken to mean different actions, with harder presses able to count as a separate selection or to alter the options further, like 3D Touch can provide on some iPhone models. The length of time of a press can also be measured and used for input purposes, with long presses able to start actions differently from a short tap or press.

The patent also suggests how interface objects could also move around to track the hovering position of the stylus or finger, with similar contact with the screen used for selection. By continuing the monitoring of the hovering position of the stylus or finger, this could enable users to perform more complex actions as gestures.

For example, a user could use a grabbing motion with their fingers to cut text or images from a document, then with their fingers still bunched together, move the entire hand to another part of the document, all while having a cursor on screen moving to show where the item could be deposited, based on where the hand is hovering. Users could then reverse the motion to release the cut content and paste it into the place where the cursor was located, or pulling the stylus out of proximity range.

Apple also suggests similar hovering mechanics could be used to vary a visual characteristic of the position indicator, to indicate range to the user, or to alter elements of the interface itself.

The publication of patents are not a guarantee that Apple will be bringing concepts described within to future products or services, but it does show areas of interest for the company's research and development efforts.

This is not the first time Apple has looked into hover-based interactions. Among others, a patent from 2016 suggested the use of in-line proximity sensors near keyboards and trackpads, while one from 2010 specifically related to a touchscreen proximity sensor.

Apple has also looked into how it could use force-sensing gloves to enhance input, such as by detecting gestures and how physical objects are gripped in a 2019 patent. Continuing the theme, another 2019 patent application suggested the wearing of finger devices that lightly squeezed the sides of the finger when the user presses, giving a sense of tactile feedback to the user when typing on a touchscreen.

Malcolm Owen

Malcolm Owen

-xl-m.jpg)

-m.jpg)

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Thomas Sibilly

Thomas Sibilly

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

5 Comments

Oh lord no. I type and stop to think, type and stop to think. The LAST thing I want is my fingers accidentally triggering something because I held them within a half an inch of the screen for a few seconds.

EDIT: I just reread the article and it's talking about the Apple Pencil gaining proximity gestures. Not that that would make any difference. I draw, and think, and draw and think. It would be the same problem. Something getting triggered accidentally while the tip is close to the surface.

Anyone recall the passage from Hitchhiker's Guide about a gesture sensitive interface? "For years radios had been operated by means of pressing buttons and turning dials; then as the technology became more sophisticated the controls were made touch-sensitive--you merely had to brush the panels with your fingers; now all you had to do was wave your hand in the general direction of the components and hope. It saved a lot of muscular expenditure, of course, but meant that you had to sit infuriatingly still if you wanted to keep listening to the same program."

Good on Apple to include features that have been around the Android world for quite a while...see for instance the Galaxy Note Pro which had hovering stylus support for aeons... https://www.youtube.com/watch?v=43MfWGGz8UE

Similar to the amazing announcement of now being able to pair a mouse on iPad...as has also been possible on Android for aeons (and not just on tablets, but phones) https://www.youtube.com/watch?v=unln6OQjq0g

Or "desktop-class" browser with full keyboard support...oh, why bother ;)