Apple's acquisition of Xnor.ai suggests that the machine learning tools developed by the company may appear natively on iPhones and iPads in the future, with processing on-device instead of in the cloud.

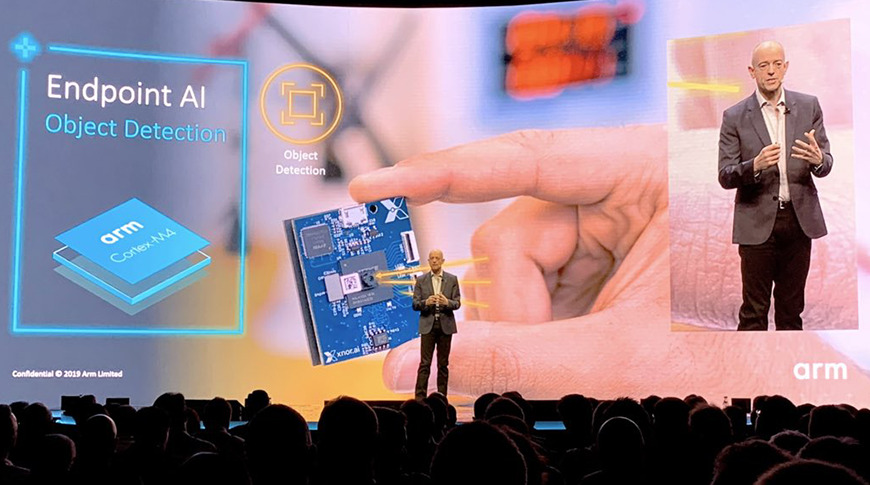

The company in question is Seattle-based Xnor.ai, a three-year-old startup that specializes in low-power edge-based artificial intelligence. The technologies developed by the company are capable of running on devices such as smartphones, IoT devices, cameras, drones, and even embedded low-power mobile CPUs.

Xnor.ai's tagline is — or was — "AI Everywhere, for everyone," which they achieve by employing machine learning and image recognition tools that can be used on-device, rather than needing to execute something from the cloud.

Edge-based computing seeks to bring data storage and basic computational tasks out of the cloud and back onto the device. The end goal is to improve response times and save bandwidth. Edge-based AI would allow for faster programs that would use significantly less data. Additionally, by keeping the data out of the cloud, it offers a more secure system than the alternative.

Xnor.ai has also created a self-service platform to make it easier for software developers to employ AI-centric code and data libraries into apps.

According to an anonymous source who spoke to GeekWire, Apple doled $200 million for the company. Apple had paid the same $200 million for another Seattle-based AI startup, Turi, in 2016.

Forbes had ranked Xnor.ai at 44th on a list of America's most promising AI companies. They applauded the company for developing a standalone AI chip that could be run for years on solar power or a coin-sized battery.

Amber Neely

Amber Neely

Wesley Hilliard

Wesley Hilliard

Andrew O'Hara

Andrew O'Hara

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Chip Loder

Chip Loder

Christine McKee

Christine McKee

William Gallagher

William Gallagher

-m.jpg)

9 Comments

A thorough description of edge computing here: https://en.m.wikipedia.org/wiki/Edge_computing

This is an area they cannot afford not to be in, and other players have already made it clear they are there too.

A wise direction to take IMO although I would imagine it is to flesh out other work already in progress.

Latency and privacy are the two main reasons to have some things handled locally on the device. Latency is especially relevant for 'AI' on things like cars.

From the image, it looks like it uses ARM too.