Apple is researching how to blend virtual objects more seamlessly into the real world with "Apple Glass," and how to correctly display AR at the periphery of a wearer's view.

Following many previous reports regarding the image quality of "Apple Glass," the company continues to research just what — and when — a wearer will see Apple AR. Three newly-revealed patent applications all concentrate on the issues of mixing virtual objects with real ones.

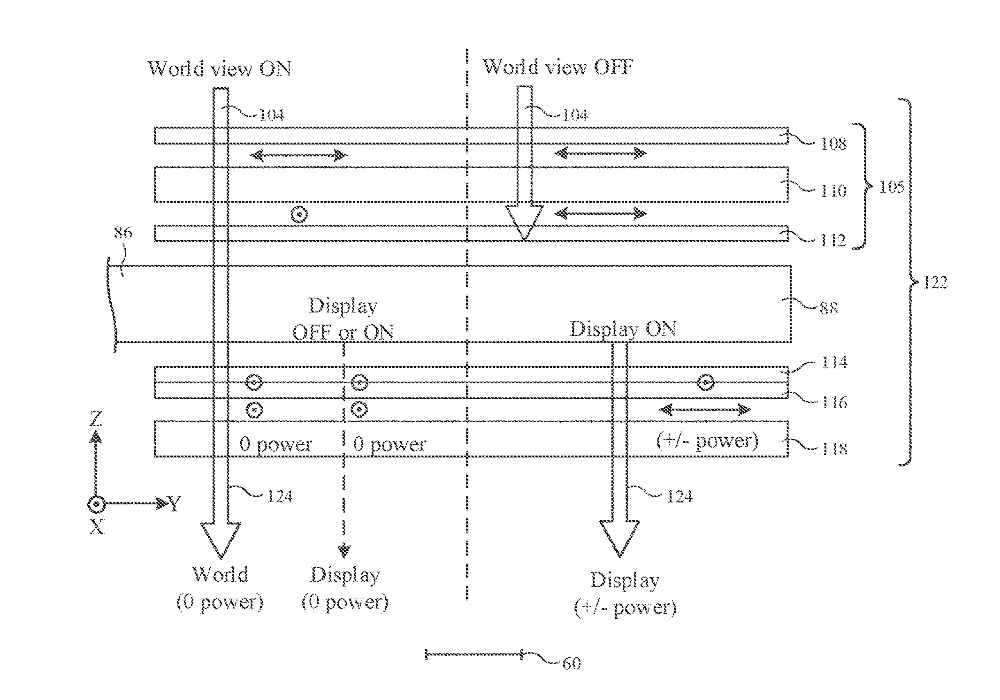

"Display System With Time Interleaving," is concerned with the image quality, and the speed, of mixing AR, VR, and the real world.

"Challenges can arise in providing satisfactory optical systems for merging real-world and display content," says Apple. "If care is not taken, issues may arise with optical quality and other performance characteristics."

Although the application does not use the word "speed," it is aimed at making sure there is no lag between a virtual and real object. One way Apple proposes it does this is to use "Apple Glass" to show its virtual images in time with the real environment.

"The optical system may use time interleaving techniques and/or polarization effects to merge real-world and display images," continues Apple. "Switchable devices such as polarization switches and tunable lenses may be controlled in synchronization with frames of display images."

To synchronize like this, "Apple Glass" could have sensors that include " three-dimensional sensors," LiDAR, or "three-dimensional radio-frequency sensors." They would be tied in to further sensors that register where a user's eyes are focused.

"[For example]," a gaze tracking system [could be] based on an image sensor and, if desired, a light source that emits one or more beams of light that are tracked using the image sensor after reflecting from a user's eyes," says Apple.

A second newly-revealed patent, "Display Systems With Geometrical Phase Lenses," is close to identical to the first, and is credited to the same three inventors. It has the same overall aim, but it concentrates less on the timing aspects of sychronization, and more on the use of what Apple describes as "geometrical phase lenses."

These are like a regular lens — or an array of them — except that they are polarized to allow only certain light to pass. A lens can be made up of multiple layers of different polarizations, and Apple says this means the optical system can be "adjusted in synchronization with alternating image frames".

It's to do with how "Apple Glass" will "place display images at different respective focal plane distances" from your eyes.

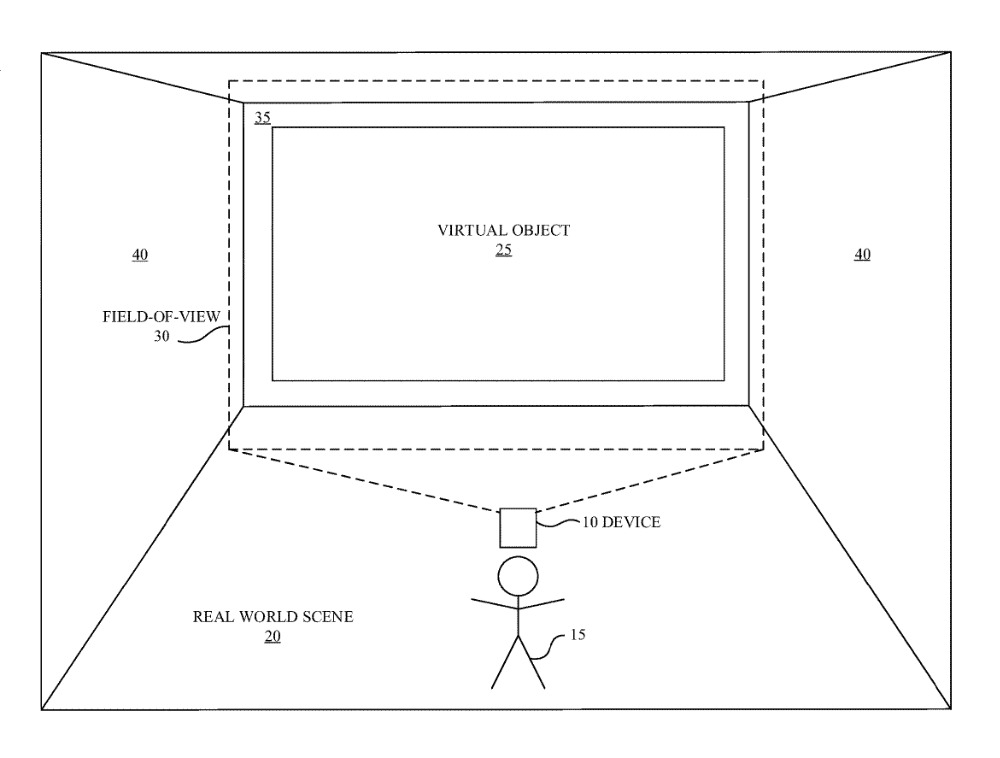

A third newly-revealed patent application takes this issue of different focal distances, and applies it to how you see virtual objects in the periphery of your vision.

"Virtual content may be difficult to view at peripheral portions of a display," http://appft.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&u=%2Fnetahtml%2FPTO%2Fsearch-adv.html&r=46&p=1&f=G&l=50&d=PG01&S1=(apple.AANM.+AND+20210218.PD.)&OS=aanm/apple+and+pd/2/18/2021&RS=(AANM/apple+AND+PD/20210218)

">says Apple in "Small Field of View Display Mitigation Using Transitional Visuals."

"For example, the virtual content may be clipped or cut-off as the virtual content approaches a peripheral edge of the display," it continues. "[The] limited dynamic range of the transparent display may make seeing or understanding the virtual content difficult at the limits of the optical see-through display's field of view."

Apple's proposal is to fade virtual objects in and out as they enter or leave the user's field of view (FOV). "Apple Glass" will have to be "aware of the FOV of the device," which means both knowing where the bezels are, and which way a user has turned their head.

By knowing what should be displayed where, the device can prevent objects being "clipped," or cut off.

"For example, by presenting 'transition-in' and 'transition-out' behaviors as the visuals approach the FOV limit," continues Apple, "(e.g., scaling in/out, fading in/out, app logo to application launch, application sleep to wake, polygon flipping, materialization/dematerialization, etc.)."

Virtual objects on the periphery of a user's vision could fade in or out, rather than be unnaturally cut off.

Virtual objects on the periphery of a user's vision could fade in or out, rather than be unnaturally cut off."[So] the visuals never reach the peripheral edges of the FOV and the edges of the FOV are hidden from the user, says the patent application.

The sole credited inventor on this patent application is Luis R. Deliz Centeno, whose previous related work includes research into gaze-tracking in "Apple Glass."

William Gallagher

William Gallagher

-m.jpg)

Chip Loder

Chip Loder

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Malcolm Owen

Malcolm Owen

3 Comments

To tune the focus so that could deal with prescriptions would be awesome.

Put on Apple Glass, now see Apple Car.

This kind of thing is coming.

https://mobile.twitter.com/domhofdesign/status/1359587098043568133

Well, I hope Apple Glass can this. You don't even need to have a laptop. It could just be an empty table, and you can use a virtual keyboard, virtual this of that, and the computing core could be your phone, a Mac mini, and maybe even the Glass itself.