Apple's Apple Intelligence research team have released two new small but high-performing language models used to train AI generators.

The Machine Learning team at Apple are taking part in an open-source DataComp for Language Models project alongside others in the industry. The two models Apple has recently produced have been seen to match or beat other leading training models, such as Llama 3 and Gemma.

Language models like these are used to train AI engines, like ChatGPT, by providing a standard framework. This includes an architecture, parameters, and filtering of datasets to provide higher-quality data for the AI engines to draw from.

I am really excited to introduce DataComp for Language Models (DCLM), our new testbed for controlled dataset experiments aimed at improving language models. 1/x pic.twitter.com/uNe5mUJJxb

— Vaishaal Shankar (@Vaishaal) June 18, 2024

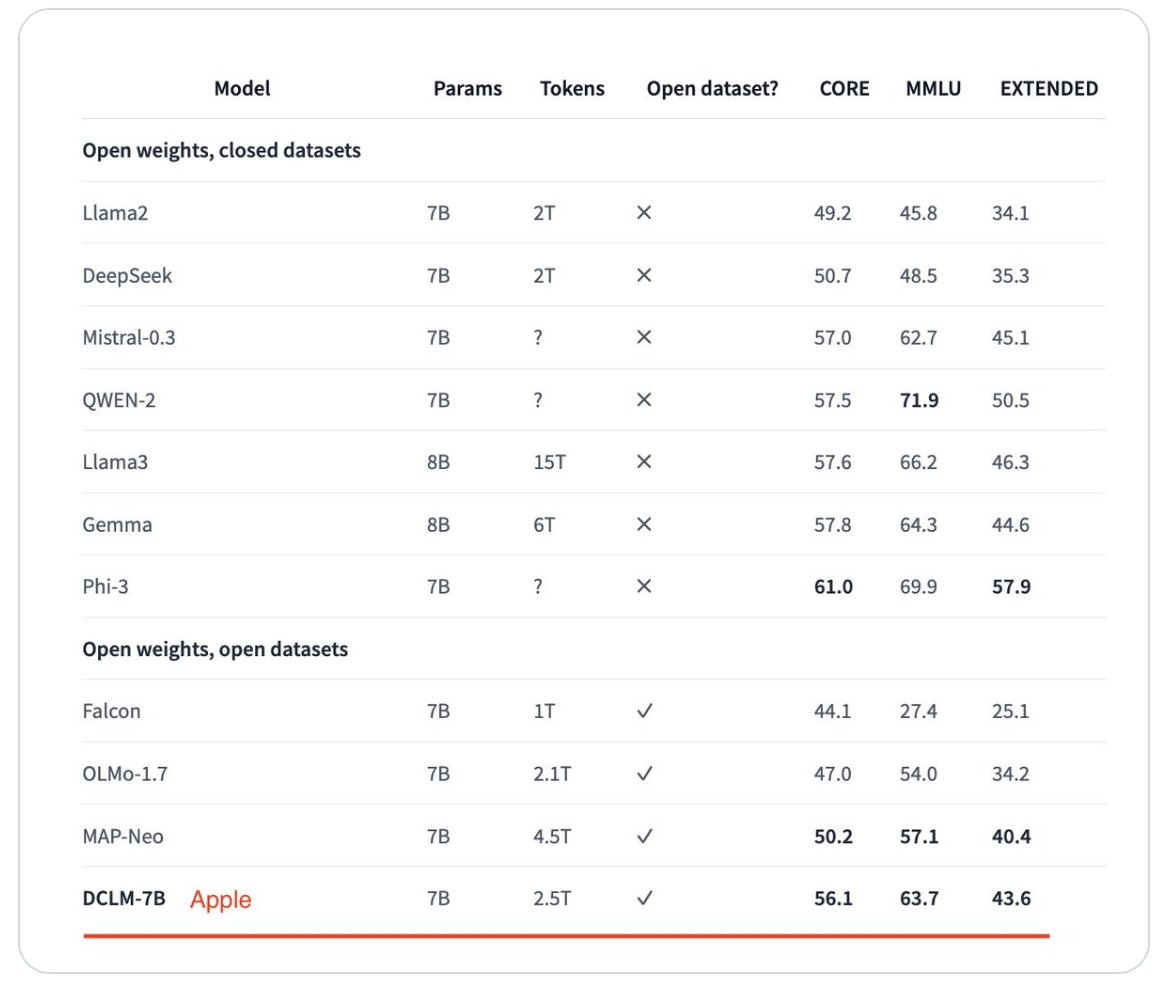

Apple's submission to the project includes two models: a larger one with seven billion parameters, and a smaller one with 1.4 billion parameters. Apple's team said the larger model has outperformed the previous top model, MAP-Neo, by 6.6 percent in benchmarks.

More remarkably, the Apple team's DataComp-LM model uses 40 percent less computing power to accomplish those benchmarks. It was the best-performing model among those with open datasets, and competitive against those with private datasets.

Apple has made its models fully open — the dataset, weight models, and training code are all available for other researchers to work with. Both the larger and smaller models scored well enough in the Massive Multi-task Language Understanding benchmarks (MMLU) to be competitive against commercial models.

In debuting both Apple Intelligence and Private Cloud Compute at its WWDC conference in June, the company silenced critics who had claimed that Apple was behind the industry on artificial intelligence applications in its devices. Research papers from the Machine Learning team published before and after that event proved that the company is in fact an AI industry leader.

These models the Apple team has released are not intended for use in any future Apple products. They are community research projects to show improved effectiveness in curating small or large datasets used to train AI models.

Apple's Machine Learning team have previously shared research to the larger AI community. The datasets, research notes, and other assets are all to be found at HuggingFace.co, a platform dedicated to expanding the AI community.

Charles Martin

Charles Martin

-m.jpg)

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Oliver Haslam

Oliver Haslam

Thomas Sibilly

Thomas Sibilly

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

-m.jpg)

13 Comments

Google, Meta, Microsoft, Mistral and tons of other companies, both American and Chinese, are making open models and all of those are better than Apple's models, yet this site is saying that the famously proprietary supporting company is doing anything that would let them steer a field where they are the worst at.

Meanwhile, Apple's LLM can't even say who Obama is.

https://www.reddit.com/r/singularity/comments/1ck55ag/apples_open_elm_model_is_fast_but_it_isnt_very/?rdt=32852