Apple had to achieve what Craig Federighi says were breakthroughs so that Apple Intelligence could use large language models in the cloud while still protecting users' privacy. Here's what Apple did.

During the "It's Glowtime" event, Apple talked about Private Cloud Compute as the way that user privacy is protected. Apple aims for as much of Apple Intelligence should work entirely on device, but there are also user requests that will require sending data to cloud services like ChatGPT.

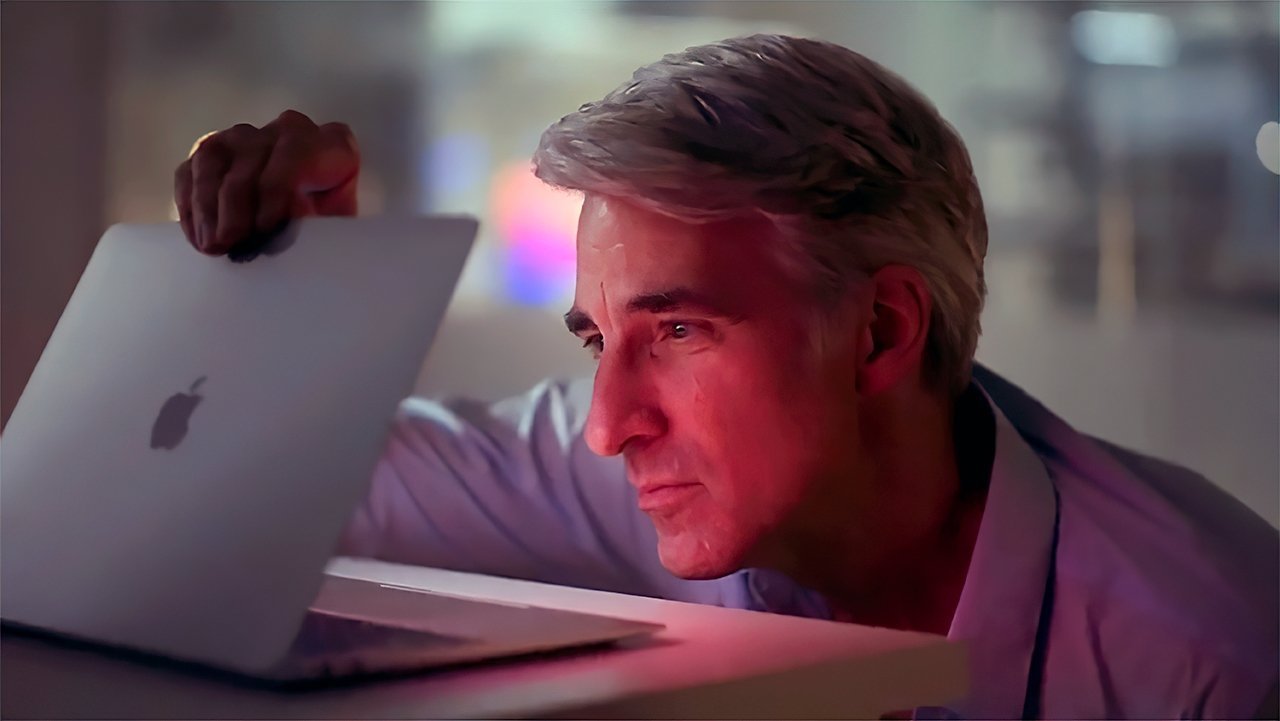

"We set out from the beginning with a goal of how can we extend the kinds of privacy guarantees that we've established with processing on-device with iPhone to the cloud— that was the mission statement," Apple's senior vice president of software engineering, Craig Federighi, said in an interview with Wired. "It took breakthroughs on every level to pull this together, but what we've done is achieve our goal."

Users will always be prompted to approve that an Apple Intelligence request is going to use ChatGPT, it won't ever happen in the background. But then when such a request is approved, it's likely that ChatGPT will need access to date that is ordinarily held encrypted on device.

For instance, any request such as asking Apple Intelligence to look through photos or emails, will mean that data being exposed to ChatGPT.

"What was really unique about the problem of doing large language model inference in the cloud was that the data had to at some level be readable by the server so it could perform the inference," said Federighi. "And yet, we needed to make sure that that processing was hermetically sealed inside of a privacy bubble with your phone."

"So we had to do something new there," he continued. "The technique of end-to-end encryption — where the server knows nothing — wasn't possible here, so we had to come up with another solution to achieve a similar level of security."

The solution that Apple calls Private Cloud Compute required developing new servers that an iPhone can be certain is trustworthy. It involved changing how those servers then handle the data so that, for instance, it isn't retained once a request has been dealt with.

"Building Apple Silicon servers in the data center when we didn't have any before, building a custom OS to run in the data center was huge," says Federighi. "[Creating] the trust model where your device will refuse to issue a request to a server unless the signature of all the software the server is running has been published to a transparency log was certainly one of the most unique elements of the solution - and totally critical to the trust model."

" I think this sets a new standard for processing in the cloud in the industry," he continues. And following all of the development work, he says that the "rollout of Private Cloud Compute has been delightfully uneventful."

Apple Intelligence won't launch in Europe

That may be true for the technical challenges behind Private Cloud Compute, but the implementation of it and Apple Intelligence has had a bigger problem in Europe. Apple has said that Apple Intelligence will not be available in the EU because it says the territory's Digital Markets Act is unclear.

During the "It's Glowtime" presentation, Federighi did commit to Apple localizing Apple Intelligence in France during 2025. In the new interview, he goes further.

"Our goal is to bring ideally everything we can to provide the best capabilities to our customers everywhere we can," he said. "But we do have to comply with regulations, and there is uncertainty in certain environments we're trying to sort out so we can bring these features to our customers as soon as possible."

"So, we're trying," he concludes.

William Gallagher

William Gallagher

-m.jpg)

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Mike Wuerthele

Mike Wuerthele

6 Comments

Translation: EU=FAFO, no AI4U.

F the EU. Their crazy undefinably laws. The will get features from Apple, Microsoft and Google, maybe never. Good luck with that plan.

Regulation can never keep up with technology. A cold dead hand on innovation.