Recent advances in AI and the release of ChatGPT have sparked new interest in AI as a tool. Agentized LLMs are the latest attempt to make highly-specialized AIs, and to avoid them going rogue.

AI is all the rage today, with people and organizations rushing to implement or use AI for increased efficiency and profit. But one nagging concern still lingers in the AI world, which has become more and more worrisome as time has advanced: alignment.

AI alignment refers to the process of designing and implementing AI systems so that they conform to human goals, values, and desired outcomes. In other words, alignment is concerned with making sure AI doesn't go rogue.

This is a nascent field in AI, and researchers and developers are only generally starting to become aware of its importance. Fears of AI getting out of control and potentially harming or destroying humanity are behind the drive for better AI alignment.

Breaking up AI tasks with composition

One way to achieve working AI alignment which preserves both accuracy and alignment is composition - a concept taken from the software world in which a piece of software is constructed by assembling existing components to create an app or suite.

Alignment is usually used when referring to training Large Language Models (LLMs) to learn about a specific knowledge domain - and retraining those models periodically when they begin to drift off course.

The idea of using composition in AI is to break learning models into subtasks, with each task focusing on one thing. The overall software checks in periodically with each task to make sure it is performing its function - and only its function.

By using composition to focus learning tasks on one thing, AI systems can be built to be more reliable and accurate by keeping subtasks and models aligned with desired goals.

Reflection, or reflexion

One way to train AI models to stay on target is to enable them to use reflection - in which a model or task periodically checks itself to ensure that what it is pursuing is only aligned with its goal. If a model or task begins to wander off-topic, software can readjust the task periodically to make sure it stays focused.

Task-driven autonomous agents

Since the end goal of alignment is accuracy and enforcing boundaries, and since composition is a good way to do that, an end goal is to develop a system of agents. Each agent becomes a domain expert on a particular subject.

Agentized LLMs and other general AI agents are already in development, and in some cases are already released, and an entire AI agent ecosystem is springing up around the subject.

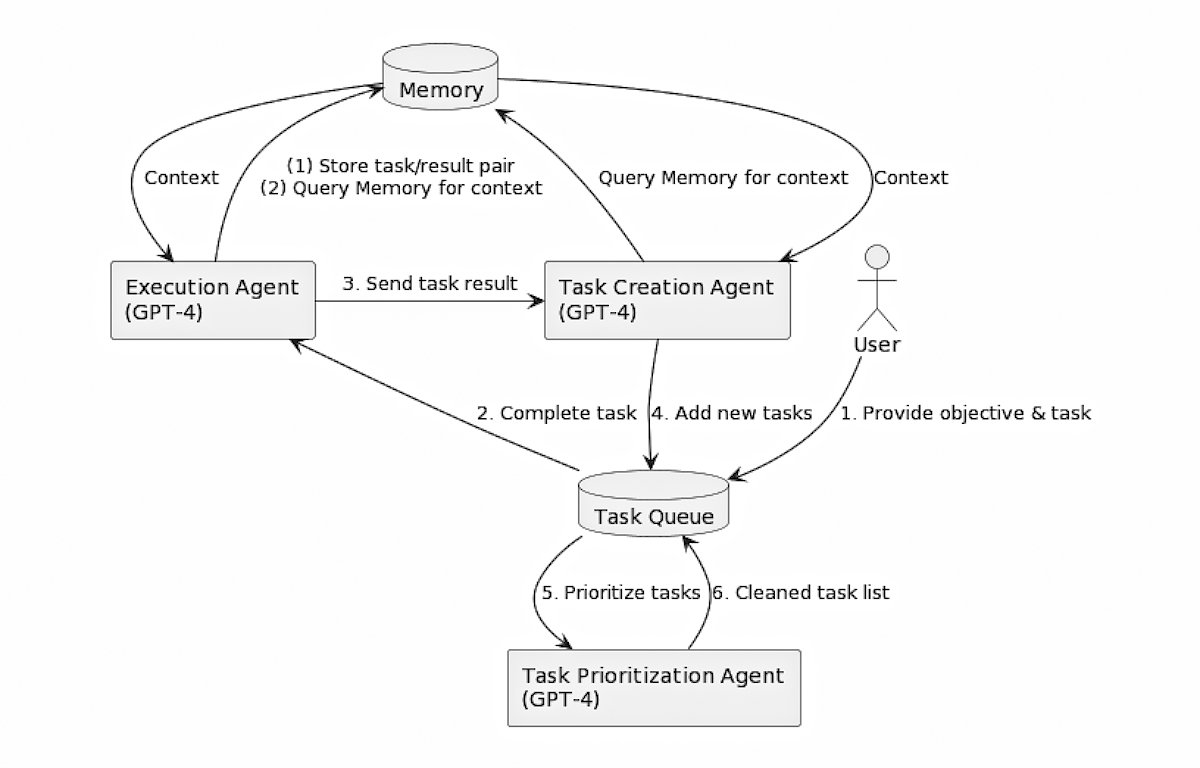

AI researcher Yohei Nakajima has published a paper on his blog titled "Task-driven Autonomous Agent Utilizing GPT-4, Pinecone, and LangChain for Diverse Applications".

LangChain is a set of AI tools and agents that helps developers build agentized LLMs via composability.

Nakajima also has a blog post titled "Rise of the Autonomous Agent". Nakajima's paper shows diagrams of one possible way agentized LLM systems might work:

AI agent operating systems

e2b.dev has released EB2, which it describes as an "Operating System for AI Agents". Eb2.dev has also released a list of "Awesome AI Agents" on GitHub. There's also a repository for awesome SDKs for AI agents.

In the future, we can envision AI systems that can be altered simply by changing which agents and LLMs are chosen for alignment until the desired outcome is achieved. It's possible we'll see AI agent operating systems emerge to handle these tasks for us.

Additional resources

In addition to the above-mentioned resources, also check out the AI Tool Hub - in particular Introduction to AI Alignment: Making AI Work for Humanity, as well as The Importance of AI Alignment, explained in 5 points at the AI Alignment Forum.

There's also a good introductory paper on the subject of AI alignment titled Understanding AI alignment research: A Systematic Analysis by Jan H. Kirchner, Logan Smith, Jacques Thibodeau, et al.

Another interesting web-based AI agent company to check out is Cognosys.

We'll have to wait and see what the future holds for AI and alignment, but work is already well underway to attempt to mitigate some of the risks and potential negative aspects AI may bring as time goes by.

Chip Loder

Chip Loder

Charles Martin

Charles Martin

Marko Zivkovic

Marko Zivkovic

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele