Known as head-tracking, the technology has been known and implemented for sometime. But a new patent application filed by Apple this week suggests the Mac maker could employ the advanced method to allow users greater interactivity with their computer.

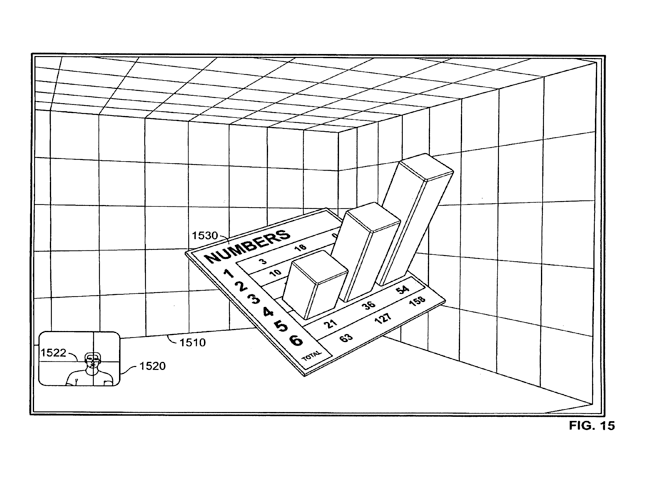

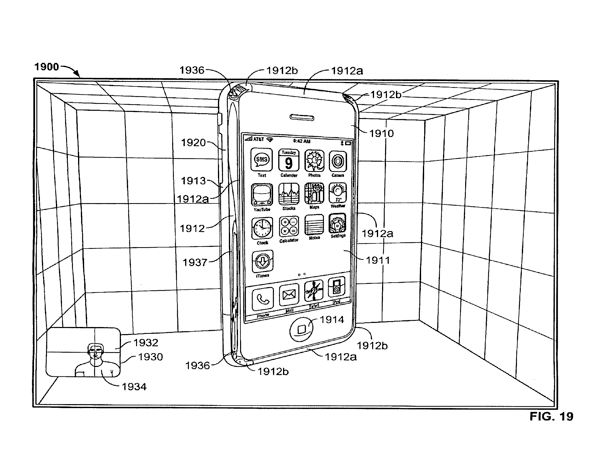

Through the use of a camera, the system could detect a user's position, and adjust the image on screen accordingly. In addition, the camera could use the image of the user and overlay it onto an on-screen object, giving the impression of a "reflection" and creating a more immerse experience.

Apple noted that the conventional methods of interacting with 3D objects on screen, by using a mouse and keyboard, are not intuitive for most users. In addition, on-screen objects often lack realism because they do not interact with the living environment.

Apple's newly described technology would address that through the use of a camera or appropriate "sensing mechanism."

"Using the detected position of the user, the electronic device may use any suitable approach to transform the perspective of three-dimensional objects displayed on the display," the application reads. "For example, the electronic device may use a parallax transform by which three-dimensional objects displayed on the screen may be modified to give the user the impression of viewing the object from a different perspective."

The technology would be capable of defining the visual properties of different surfaces, and determining how well it would reflect light. Using this, images of the user and their environment could be recreated on the screen with effects added.

"Using this approach, surfaces with low reflectivity (e.g., plastic surfaces) may not reflect the environment, but may reflect light, while surfaces with high reflectivity (e.g. polished metal or chrome) may reflect both the environment (e.g. the user's face as detected by the camera) and light," the application states. "To further enhance the user's experience, the detected environment may be reflected differently along curved surfaces of a displayed object (e.g. as if the user were actually moving around the displayed object and seeing his reflection based on his position and the portion of the object reflecting the image."

It's a new level of virtual reality, meshing the real world with a nonexistent one, but giving the visual perception that an object is real. Apple is not alone in exploring this technology: Microsoft's "Project Natal" is an unreleased video game accessory that aims to use full-body tracking through the use of cameras to allow a user's physical actions to be translated into Xbox 360 games.

Programmer Johnny Chung Lee also gained attention 2007 when he created a homemade head tracking device using the infrared remote controller for the Nintendo Wii. The Carnegie Mellon University student created a simple interactive display that adjusted its picture based on the relative position of the user. His technology has been viewed more than 7.6 million times on YouTube.

This week's patent application isn't the first time Apple has investigated different kinds of 3D technology. In 2008, the company applied for a patent on new display hardware that would employ autostereoscopy to produce 3D, viewable without head gear or glasses. Apple also, in 2008, revealed details on a possible 3D user interface for Mac OS X. And the company has also shown interest in a 3D gaming controller for the Apple TV.

A different kind of virtual reality, known as augmented reality, has proven popular in various applications for the iPhone. Made possible with the release of iPhone OS 3.1, the display of markers and content over live pictures from the iPhone camera is made possible through the use of the autofocusing camera of the iPhone 3GS and its built-in compass. With them, the phone is capable of recognizing the direction the phone is facing and getting a detailed look at a subject to tag it with information.

Forecasting media player battery life

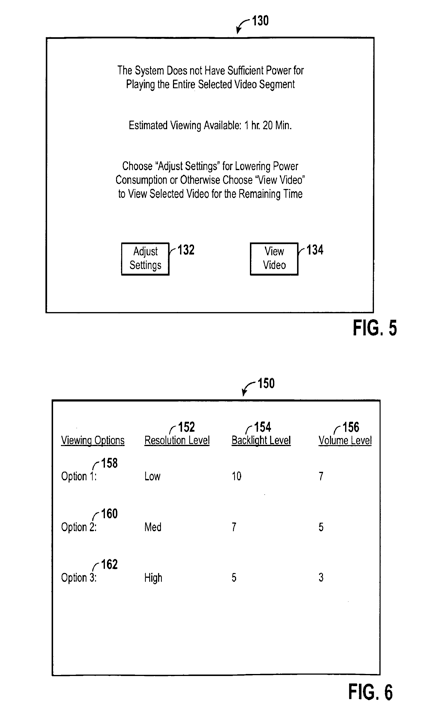

In another patent application, Apple has described a system that would calculate the amount of power needed to play content like a video, and determines whether there is enough left in the battery to complete the task. The system could then adjust its playback settings, either automatically or through user input, to reduce the amount of power needed to play the video in its entirety.

Apple said with current technology, a user might begin playing a video without the knowledge that there is not enough power left in the battery to complete it. But those issues can usually be addressed from the beginning by adjusting picture resolution, video bit-rate, backlight strength, sound volume and other factors.

The "intelligent power management method" would take all of those into consideration when trying to maximize battery life and ensure that a video will be played in its entirety. The software would employ a "priority ranking" for video settings, which can be customized by the user.

Neil Hughes

Neil Hughes

-m.jpg)

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

22 Comments

So this is a pseudo 3D achieved by perspective and lighting but still shown in 2D. I am still hoping we get genuine 3D one day. I can imagine moving back into the finder to locate folders on shelves I know this is not required with spotlight but it would be neat all the same.

You need a corporate team like Apple with a dynamic, demanding leader like Steve to be productive and profitable enough in these times to have the wherewithal and capacity to have this kind of forward vision and prediction.

I wish Apple had something like BumpTop. If you haven't seen it yet go check it out! It is a desktop environment with 3D objects and realistic physics. Currently it is windows only.

See a preview by Johnny Chung Lee:

http://johnnylee.net/projects/wii/

With a WiiMote, glasses with IR-LEDs and the Software you can try out Head-Tracking

That's awesome.