Apple invention uses 'faceprints' to identify people, objects

In a patent filing with the U.S. Patent and Trademark Office on Thursday, Apple outlined a system that analyzes the characteristics of an image's subject and uses the data to create a "faceprint," which can then be matched with other photos to determine a person's identity.

Curiously, most of the patent language in Apple's application for "Auto-recognition for noteworthy objects" focuses on "famous people and/or iconic images," and not a user's friends or family.

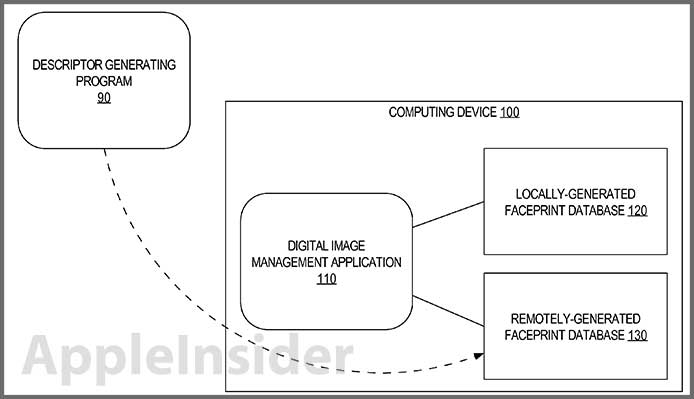

The invention calls for a digital image management application to analyze a subject in a given photo, creating a "faceprint" of the person by using facial recognition to identify certain unique characteristics. Here the filing notes that a faceprint is "a subset of feature vectors that may be used for object recognition," and can therefore be used for non-facial objects like structures.

From the filing's background:

In order to automatically recognize a person's face that is detected in a digital image, facial detection/recognition software generates a set of features or a feature vector (referred to as a "faceprint") that indicate characteristics of the person's face. The generated faceprint is then compared to other faceprints to determine whether the generated faceprint matches (or is similar enough to) one or more of the other faceprints. If so, then the facial detection/recognition software determines that the person corresponding to the generated faceprint is likely to be the same person that corresponds to the "matched" faceprint(s).

After a photo is analyzed, the management application compares the faceprint with other generated faceprints stored locally or remotely. If a match is discovered, the software tags the person with an identity, such as Tom Hanks. This stage relies on face recognition technology that assigns a reliability score to the faceprint, thus allowing for more accurate matches. If a score falls below a certain threshold, the system rejects the match.

User feedback can also be included during this phase to aid in enhancing the attached metadata by pressing either a green check or red "X" button.

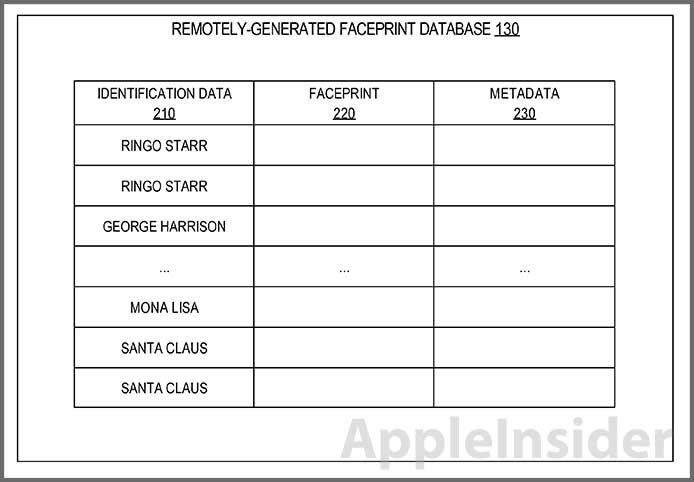

As for the database, the patent allows for a device like the iPhone to create and store faceprints locally or pull from an off-site cache. This remotely-generated faceprint store has the ability to operate in the cloud, and can be sent to or shipped with the device. Images can be pre-processed and tagged with metadata for quicker facial recognition, but the step is not necessary for the system to function as the remote system is integrated in the cloud.

Additional features include the ability to group together multiple faceprints using metadata along with reliability scores. The patent gives the example of pictures of Paul McCartney taken over a number of decades. While his face looks markedly different than it did forty years ago, vetted metadata assigned to a pool of images can help parse out photos of the legendary artist to give a decade-by-decade retrospective.

Going further, the metadata can be used to offer information about a subject like their Facebook page, Twitter feed or an iTunes Store link to their music.

While the exact purpose of the invention is unclear, the solution could extend beyond famous people and places and be used to intelligently identify subjects in a user's photo library for quick and easy tagging or file management.

Mikey Campbell

Mikey Campbell

Chip Loder

Chip Loder

Andrew Orr

Andrew Orr

Marko Zivkovic

Marko Zivkovic

David Schloss

David Schloss

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher