Apple's iPhone-based augmented reality navigation concept has 'X-ray vision' features

According to a pair of patent applications published on Thursday, Apple is investigating augmented reality systems for iOS capable of providing users with enhanced virtual overlays of their surroundings, including an "X-ray vision" mode that peels away walls.

Apple filed two applications with the U.S. Patent and Trademark Office, titled "Federated mobile device positioning" and "Registration between actual mobile device position and environmental model," both describing an advanced augmented reality solution that harnesses an iPhone's camera, onboard sensors and communications suite to offer a real-time world view overlaid with rich location data.

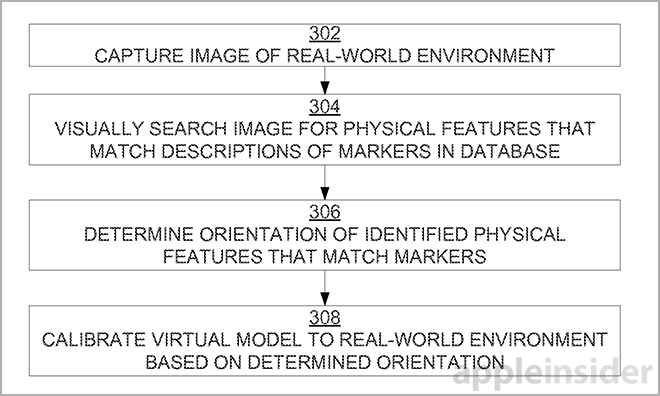

The system first uses GPS, Wi-Fi signal strength, sensor data or other information to determine a user's location. From there, the app downloads a three-dimensional model of the surrounding area, complete with wireframes and image data for nearby buildings and points of interest. Corresponding that digital representation with the real world is a difficult task with sensors alone, however.

To accurately place the model, Apple proposes the virtual frame be overlaid atop live video fed by an iPhone's camera. Users can align the 3D asset with the live feed by manipulating it onscreen through pinch-to-zoom, tap-and-drag and other gestures, providing a level of accuracy not possible through machine reckoning alone.

Alternatively, users can issue audible commands like "move left" and "move right" to match up the images. Wireframe can be "locked in" when a point or points are correctly aligned, thus calibrating the augmented view.

In yet another embodiment, the user can interact directly with the wire model by placing their hand into the live view area and "grabbing" parts of the virtual image, repositioning them with a special set of gestures. This third method requires object recognition technology to determine when and how a user's hand is interacting with the environment directly in front of the camera.

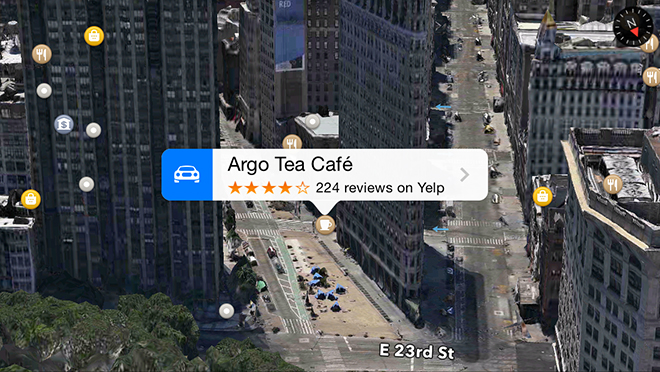

In addition to user input, the device can compensate for pitch, yaw and roll, as well as other movements, to estimate positions in space as associated with the live world view. Once a lock is made and the images are calibrated, the augmented reality program can stream useful location data to the user via onscreen overlays.

For example, an iPhone owner traveling to a new locale may be unfamiliar with their surroundings. Pulling up the enhanced reality app, users would be able to read names of nearby buildings and roads, obtain business information and, in some cases, "peel back" walls to view the interiors of select structures.

The "X-ray vision" feature was not fully detailed in either document aside from a note saying imaging assets for building interiors are to be stored on offsite servers. It can be assumed, however, that a vast database of rich data would be needed to properly coordinate the photos with their real life counterparts.

Finally, Apple makes note of crowd-sourcing calibration data for automated wireframe positioning, as well as a marker-based method of alignment that uses unique features or patterns in real world objects to line up the virtual frame.

There is no evidence that Apple is planning to incorporate its augmented reality technology into the upcoming iOS 8 operating system expected to debut later this month. The company does hold a number of virtual reality inventions aimed at mapping, however, possibly suggesting a form of the tech could make its way to a consumer device in the near future.

Apple's augmented reality patent filings were first applied for in March 2013 and credit Christopher G. Nicholas, Lukas M. Marti, Rudolph van der Merwe and John Kassebaum as inventors.

Mikey Campbell

Mikey Campbell

Mike Wuerthele

Mike Wuerthele

Malcolm Owen

Malcolm Owen

Chip Loder

Chip Loder

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Michael Stroup

Michael Stroup