One month after receiving a patent reassignment from Israel-based 3D mapping R&D firm PrimeSense, Apple on Tuesday was granted another property detailing possible software implementations to go along with previously outlined motion-sensing hardware.

The U.S. Patent and Trademark Office assigned Apple U.S. Patent No. 8,933,876 for "Three dimensional user interface session control," or more plainly, a software UI for use with PrimeSense's motion-sensing hardware.

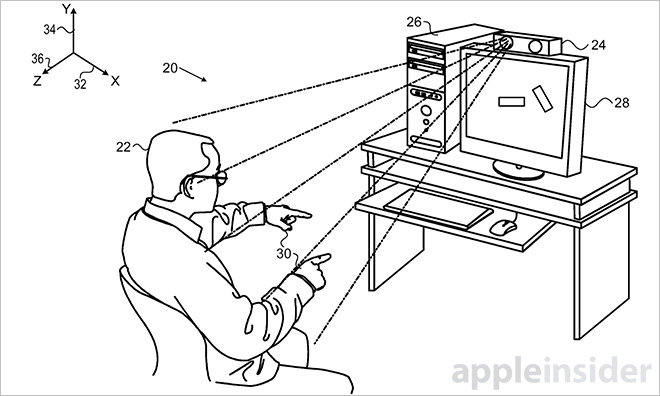

In practice, the patented system incorporates a motion and depth-sensing optical sensor, outlined in a previously reassigned patent, with proprietary software to create a three-dimensional non-tactile user interface capable of recognizing and translating user gestures into computer commands.

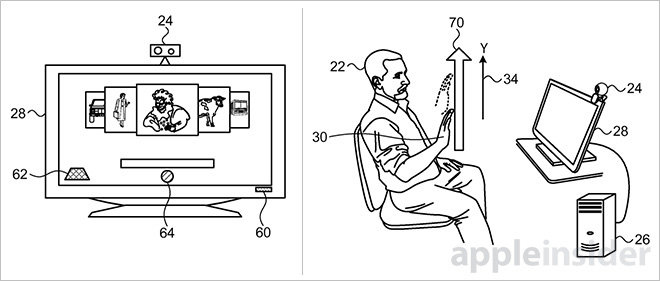

Specifically, the invention takes tracking, motion and depth input data from the camera/sensor setup and applies it to a 3D user interface. Users move their hand along multiple points, recognized in a 3D coordinate plane along X, Y and Z axes, to create gestures that are then processed by a host computer as app or system commands. In one example, a user can unlock the system UI with a rising gesture made along a vertical axis.

According to the patent, the device itself moves in between three distinct operating states, including any combination of locked/unlocked, tracked/not tracked and active/inactive. For example, if a sensing device is locked, it may also be ignoring user input — not tracking — to avoid inadvertent unlocking commands. In this scenario, the UI would also remain in an inactive state.

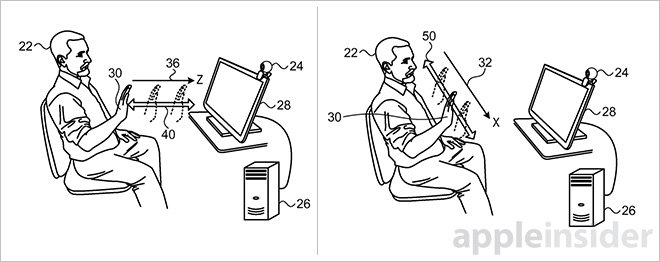

Users may alert the device to an incoming unlock command by performing a "focus gesture." These specialized movements engage the device, bringing it out of a not tracked/inactive state to locked/tracking/active. For example, a focus gesture could entail a "push" or "wave" of the hand that, when correctly recognized the sensor, enables motion tracking and activates the UI.

Alongside provisions for focus gestures is a method of "dropping sessions," which describes a graphical and operational solution to canceling user input. For example, in a scenario invoking a soft bar (shown in the illustration above as a system dock), a user raises their hand to unlock and activate motion tracking. If the user drops their hand out of the sensing hardware's field of view, the session is likewise dropped, with the example soft bar falling offscreen to reflect an inactive UI state.

Building on this point, Apple's patent notes that a user's gestures must be within the sensor's field of view, a seemingly obvious requirement, but one that may detract from a positive user experience if implemented incorrectly. The patent suggests an onboard light array be configured to illuminate when a user is positioned within its field of view, giving a visual cue that the system is ready for input.

Today's patent disclosure follows a December reassignment of another PrimeSense patent covering a 3D mapping and motion tracking device. The firm applied very similar infrared motion tracking technology when it helped Microsoft build the original Xbox Kinect sensor.

Apple purchased PrimeSense — and its patents — in 2013 as part of a deal worth somewhere between $345 million and $360 million. Following the acquisition, industry scuttlebutt purported Apple would likely incorporate motion-sensing hardware and software in a revised Apple TV unit, though the device has yet to be introduced.

Recent reports claim the release of Apple's next-gen set-top streamer is in limbo due to stalled talks with cable providers keen on keeping their content locked up behind onerous licensing terms.

Apple's 3D user interface patent was first filed for in December 2011 and credits Micha Galor, Jonathan Pokrass and Amir Hoffnung as its inventors.

Mikey Campbell

Mikey Campbell

-m.jpg)

Andrew Orr

Andrew Orr

Amber Neely

Amber Neely

Marko Zivkovic

Marko Zivkovic

William Gallagher and Mike Wuerthele

William Gallagher and Mike Wuerthele

Mike Wuerthele

Mike Wuerthele

32 Comments

And now we know why Apple hasn't released a new Thunderbolt display...

Sounds like Xbox Kinect. I just bought an LG Smart TV and the WebOS magic remote is a pretty good implementation.

I've always been curious as to who does the drawings for patents...

I've never been a fan of 3D-gestures on smartphones. It was always too clunky and pointless, but on a TV I can see myself using it a lot.

Sounds like Xbox Kinect.

Not surprising. PrimeSense amde the sensors for the original Xbox 360 Kinect device